In 2014, Guillaume Rocheron talked to us about the work of MPC on GODZILLA. He explains today about his work on BATMAN V SUPERMAN which is his third collaboration with director Zack Snyder and VFX Supervisor John DJ Des Jardin.

What was the approach of director Zack Snyder about the VFX on this show?

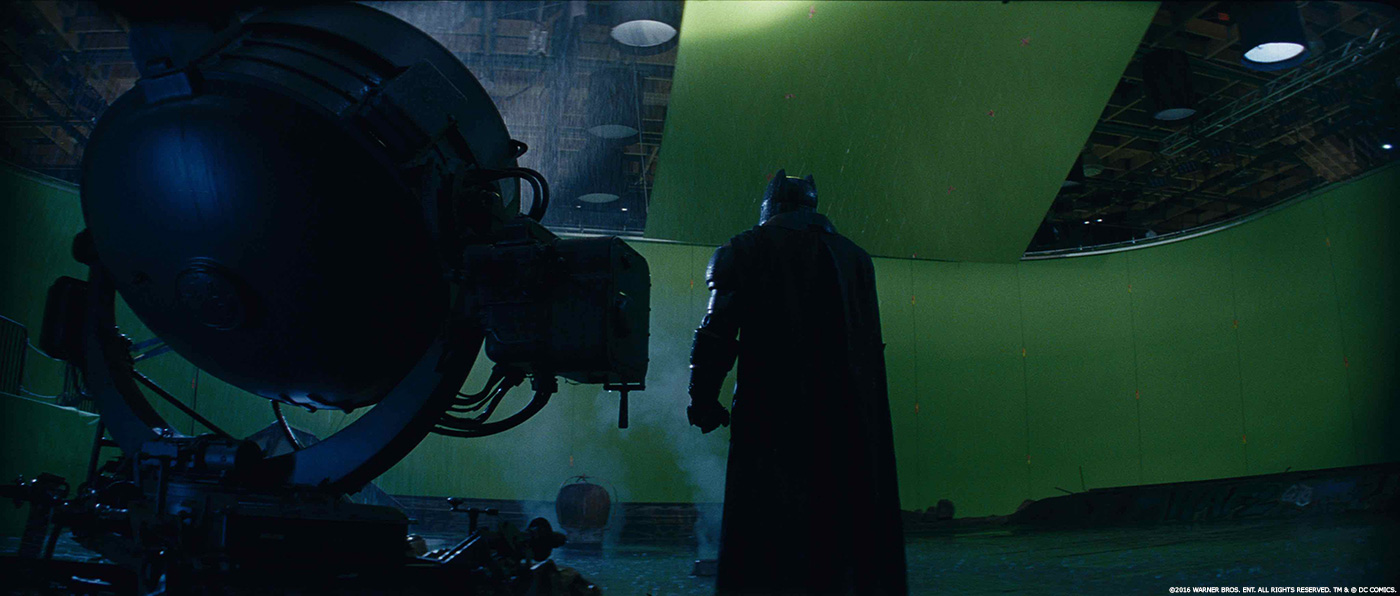

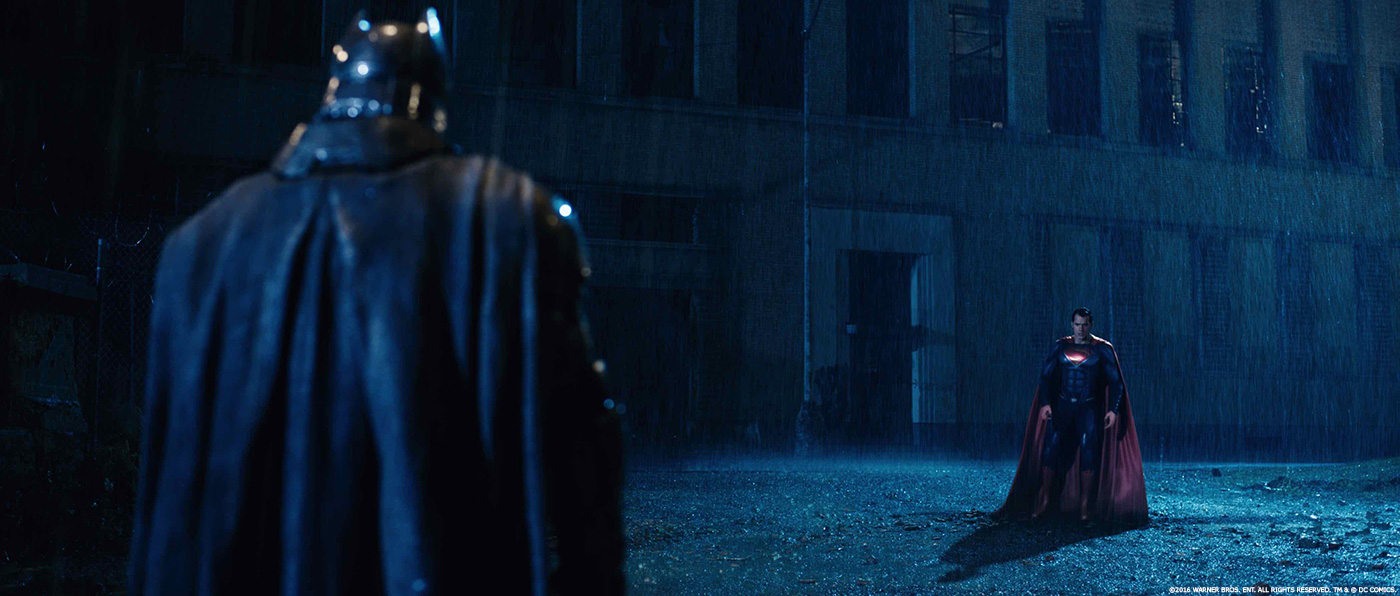

This movie and universe are grounded in the real world, so Zack captured as much as possible practically, probably more than he ever did before. There were a lot of sets, stunts, locations and practical effects. In order to create the largest possible canvas, Zack shot on both 35mm film and IMAX, for some of the key sequences. It was an exciting opportunity to create such iconic imagery on the best large format available. Zack is very well versed in VFX and his relationship with his long term visual effects supervisor, DJ Desjardin, allowed him to trust and embrace the process. After all, this is Batman v Superman so VFX plays a central role in telling the story of these characters.

What was it like to work with VFX Supervisor John Des Jardin?

This was the 3rd movie I worked on with DJ, who is long time collaborator of Zack’s. Our relationship was fluid and collaborative and DJ’s guidance helped us realize Zack’s distinct vision of the characters and universe. DJ and I started to discuss the project a few weeks after MAN OF STEEL was released and we got involved as the script was being shaped up in the following months.

What are the sequences made by MPC?

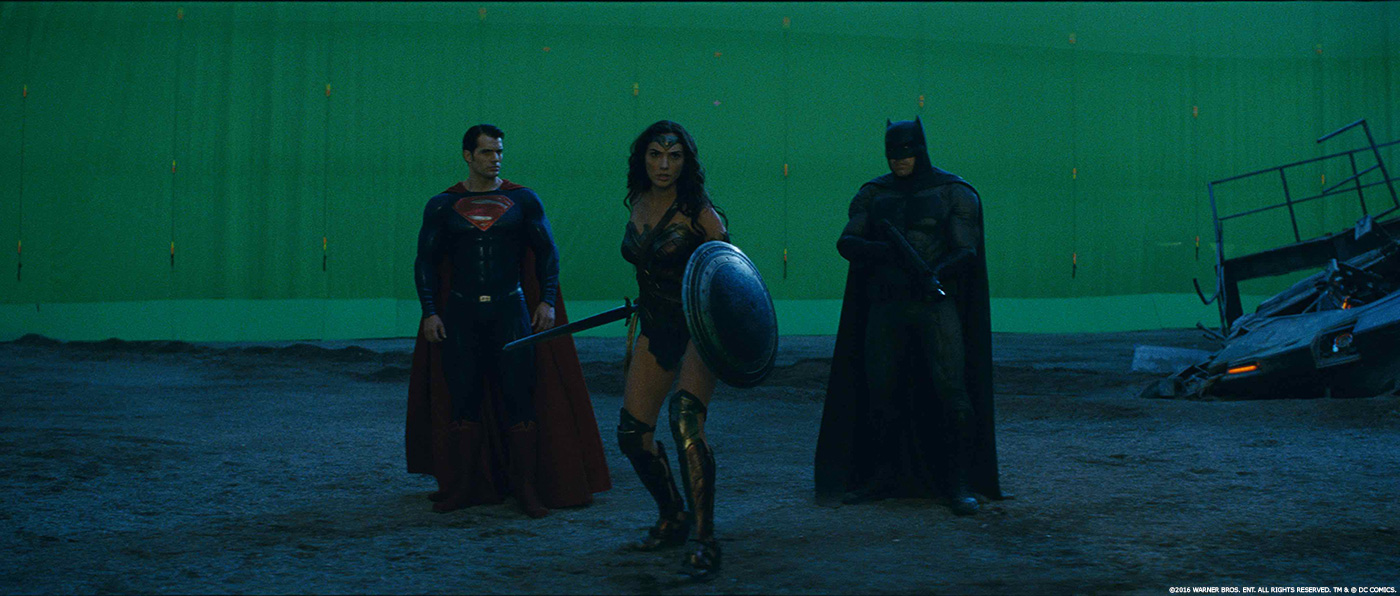

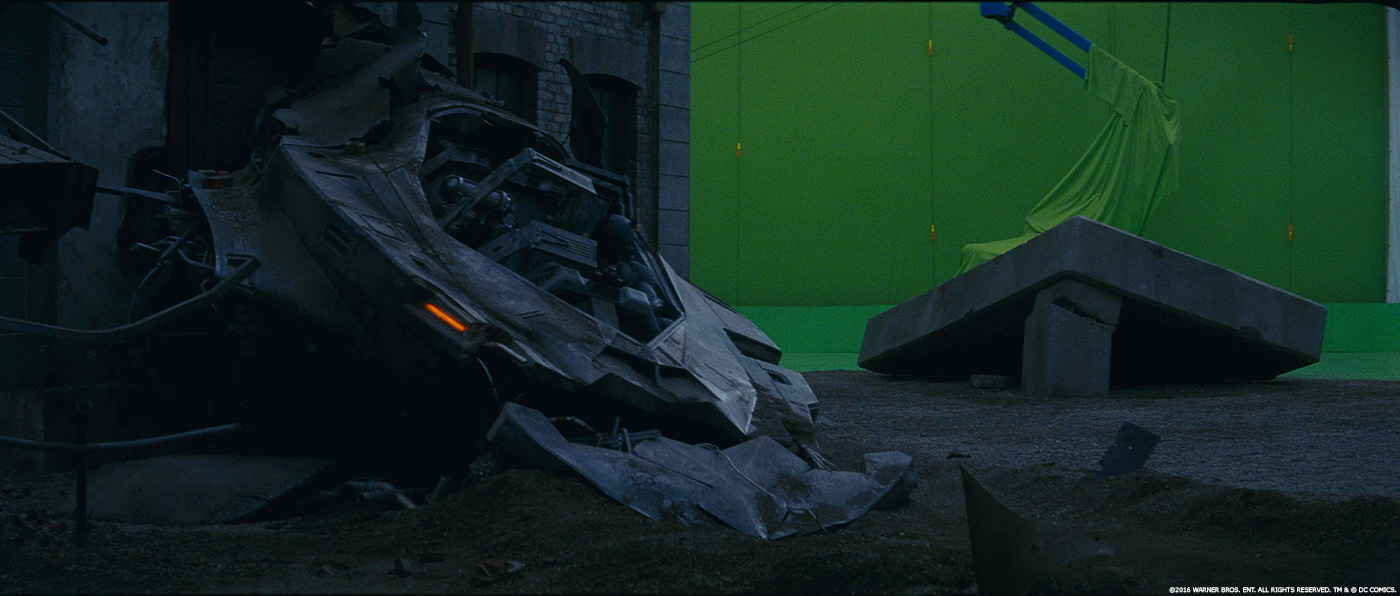

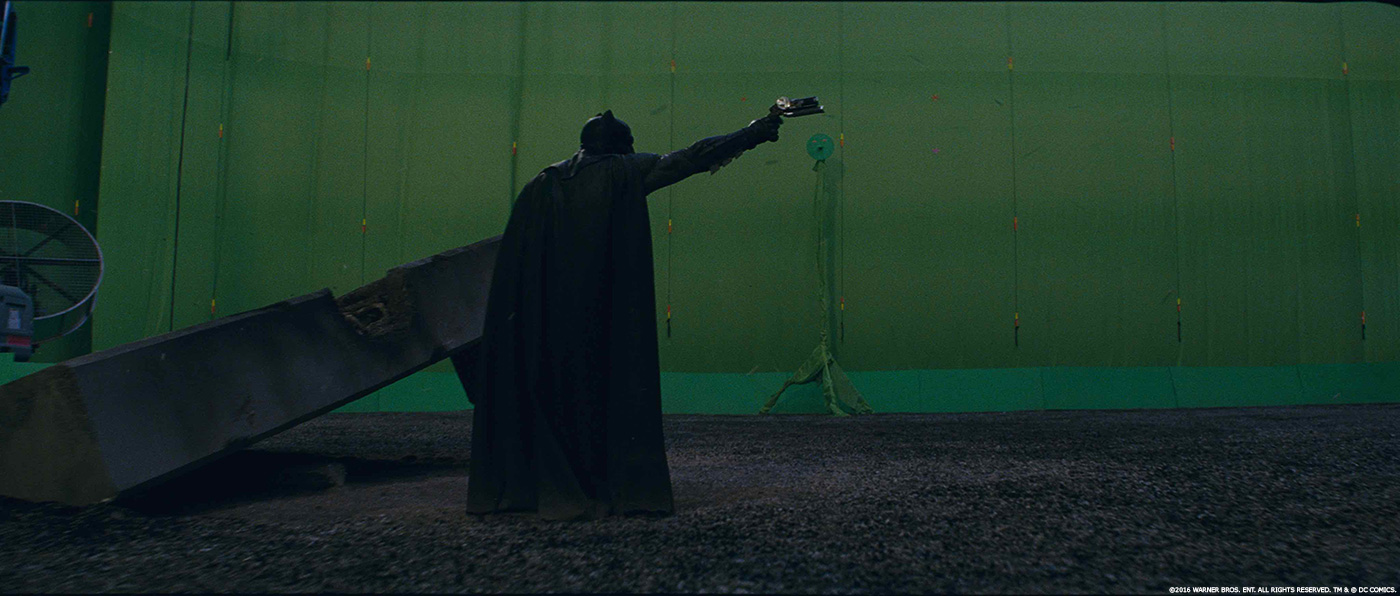

Our biggest assignments were the actual fight between Batman and Superman in the 2nd act and the Gotham Battle, featuring Batman, Superman, Wonder Woman and Doomsday, in the 3rd, final act. The IMAX resolution required that we push the technology and quality of our digital doubles further than we ever did before, which was an exciting challenge. We also created the new Gotham City, which was a vast complex digital environment that required months of planning and detailed mapping. We also had the fun project of introducing Flash and Cyborg in brief sequences.

How did you upgrade the Superman and Batman digital doubles?

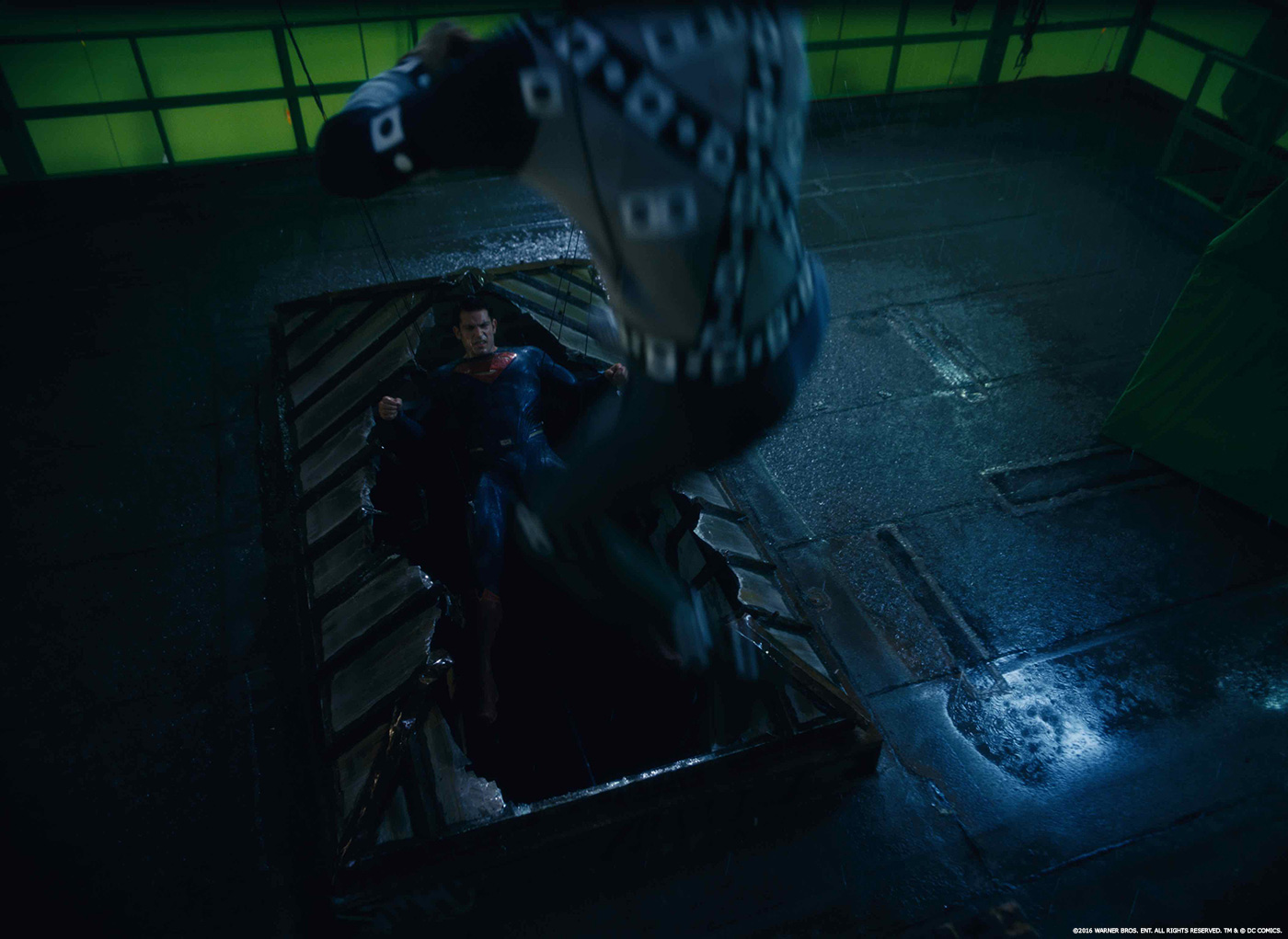

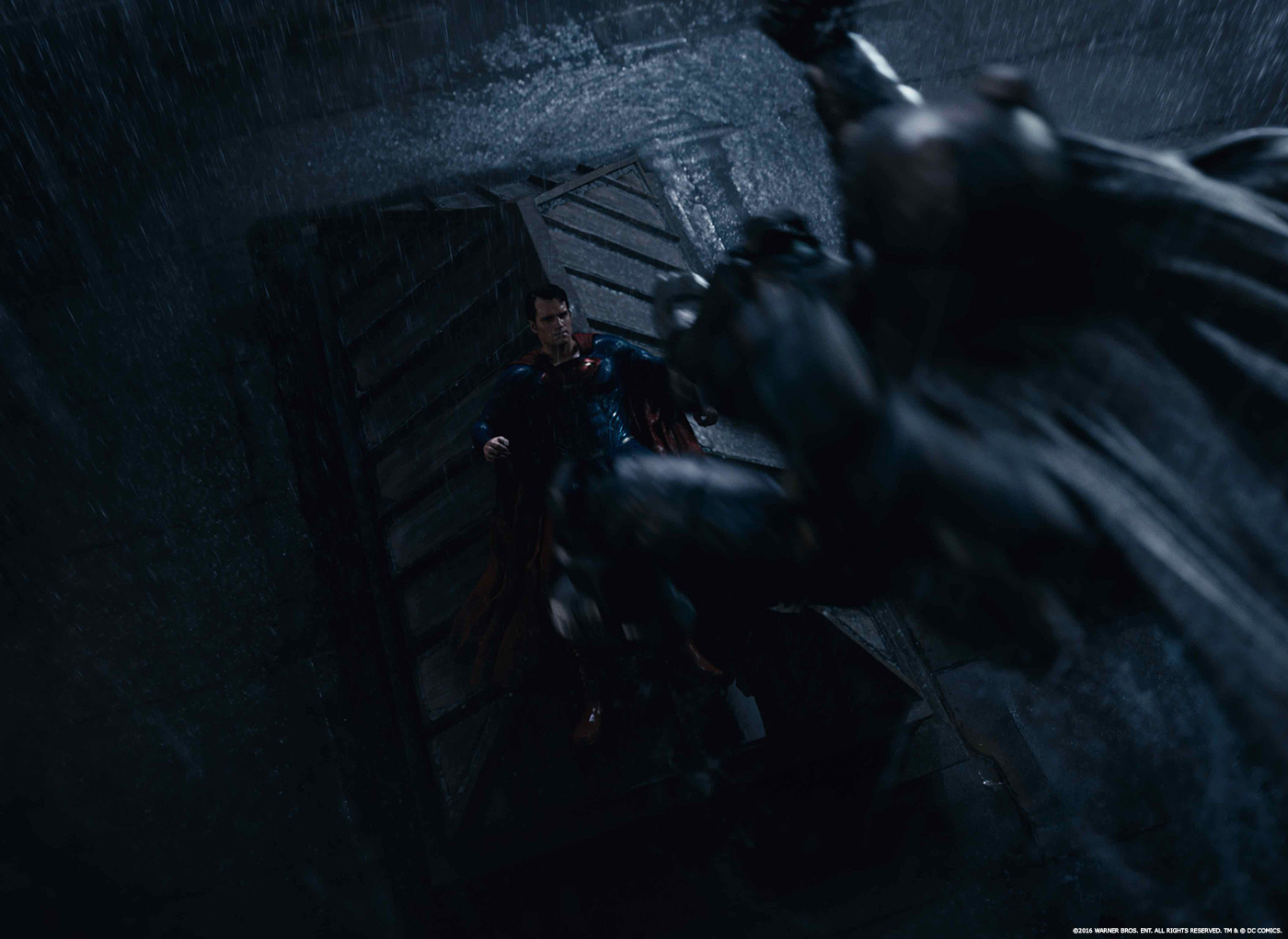

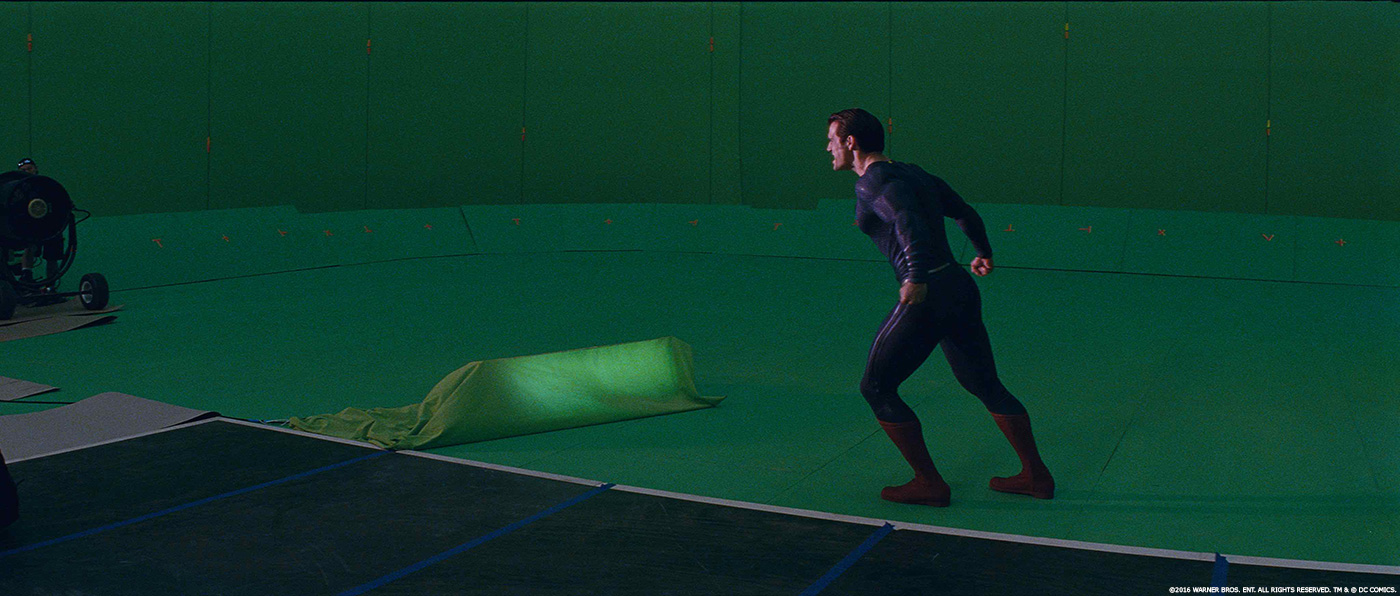

Batman, in his Mech-Suit, and Superman were complex digital characters to create because they both had to hold up perfectly at IMAX resolution during their title fight sequence. We used both digi-doubles extensively, at times up to full frame on their faces. We went to great length in order to capture as much information as possible for each actor. GentleGiant gave us the ability to make multiple Lighstage face expression scans at very high resolution. Mova scans were captured for additional blendshapes and transitions. We also used what we called the ShandyCam, a custom made portable 6 DSLR rig. It was a fast and efficient way to grab synchronized photos of the actors on a shot per shot basis to feed our expression library.

For some all CG scenes, we recorded the actors’ performances with a helmet mounted camera with tracking markers on their face. Dan Zelcs and his rigging team developed new tools and techniques allowing us to maximize the use of all the real world data we had captured. It was the key to not only make good looking digital characters, but to maintain the likeness to the actors at all time. We updated our renderer to Renderman RIS, which dramatically improved the quality and accuracy of our shading and rendering.

The fight scenes constantly switch from real to digital actors, sometime transitioning back and forth multiple times within the same shot. The new rendering system gave us the opportunity to accurately simulate and replicate Larry Fong’s lighting on skin, hair, eyes and costumes.

Can you explain in details about the design and the creation of Doomsday?

We started from a clay maquette that Patrick Tatopoulos and Jordu Schell created for Zack. Because in the film, Doomsday is created out of the Genesys Chamber from Zod’s body, the idea was to explore how the gene transformation is all about efficiency. It expands the body to extreme physical abilities, with little regard to the resulting aesthetic. This resulted into a pretty anamorphous creature with every bone, tendons and muscles expanded to their maximum capabilities. MPC’s art department then created high resolution conceptual drawing and 3d models based on the original maquette, in order to refine the transformation of each body part but still maintain some aspects of the original comic book design.

We built 2 versions of Doomsday: the newborn version was smaller and not fully developed while the fully formed version, as Doomsday builds up its strength in the film, was much bigger with every inch of his body developed beyond their maximum capabilities.

Doomsday, in its final form, had a body that expanded so much that his skin was cracked open in places, exposing tendons and muscles which presented a real challenge for us. Generally a CG character is only built at high resolution on the outside and proxy muscles, tendons and fat layers are used for simulation purposes only. In this case, we had to build everything up to the skeleton in a renderable way because the cracked skin and extruded bones exposed a lot of the underlying anatomy.

Can you tell us more about its animation?

Ryan Watson, the fight choreographer from Damon Caro’s stunt team, played Doomsday for us. He did perform every shot with a performance capture suit so we could recreate the body language and fighting style he had developed. Even though Doomsday is not a talking character, we captured his facial performance at the same time so we could always base the animation on Ryan’s translation of the character. As we did for the digital doubles, we scanned a full library of facial expression that we could then remap onto our Doomsday face.

The fight involves a lot of FX and destructions. Can you tell us more about that and especially with the use of Kali?

Early on in the final battle, Doomsday unleashes a giant burst of energy over the Gotham port, transforming the terrain of the 2 miles radius area into black streaky rocks, inspired by the dry lava fields in Hawaii, covered of debris, smoke and fire. This new environment was a tremendous layout and rendering challenge, because each shot contained hundreds of ground sections, destroyed street props along with volumetric fire and smoke fluid caches.

In order to create photo-real images, the key for me was to be able to produce coherently lit shots, with each fire sources producing accurate illumination spatially onto the environment and characters, with the correct intensity, falloff and color. This meant we needed all the burning fire and smoke to be 3d fluid simulations so we could use them as volumetric light sources instead of just using 2d elements. Because of their speed, most of our characters could cover a lot of terrain in a single shot so having all the lighting move correctly over them as they move through space worked to great effect.

Our asset team created a kit of tileable 10 meters wide ground rock sections, with different type of spike size and orientation that were assembled to create and compose the ground coverage for each shot. We also generated a large library of crushed street props, cars, debris and building chunks that was then distributed over the ground to add chaos and complexity. We developed new tools so our layout artists could place and distribute the fire and smoke fluid caches in the same way we did with standard 3d assets. It was crucial that we could design the environments in each shot with composition and lighting in mind as everything was tightly integrated together.

Then add rendering the undestroyed part of Gotham, the characters and the effects… Again, I don’t think we could have rendered such complex shots in such an integrated way even a few months before we started the project so it was a really great breakthrough for our team and for the look of the shots.

How did you created the huge environment of Gotham?

The beauty and the challenge of urban development is that each city is the product of centuries of development and progress. We approached Gotham with this complexity in mind. The risk in creating Gotham from scratch was that it would feel too generic and over designed for a city supposedly charged with history. I suggested that it would be more convincing to create the base of Gotham using large sections of existing cities that had complementary architecture and layouts and build our own features on top.

We scouted locations in Detroit and New York, and eventually came up with a cohesive design that maintained their organic features and layout. As a first step, we used Google Earth to measure and define the assembly of the various pieces. We then went on helicopter and street level shoots and captured thousands of photos of Manhattan Hell’s Kitchen, Detroit’s oil refinery, abandoned city areas, train station and port. A lot of the existing architecture was modified to make it into Gotham City but we kept the footprint of the city.

We took those thousands of photos and put them through our photogrammetry pipeline to create massive amount of geometry and textures for each city section. This technique ensured that each building, each street and each city feature was completely unique without repetition. This approach was only made possible with the update to Renderman RIS, which is capable of rendering mass amounts of data and lights instead of relying on repeated assets. This all required a tight collaboration between our assets, environment, layout and lighting teams in order to support the thousands of assets that we rendered in each shot.

On MAN OF STEEL, you created the Envirocam. Have you enhanced this process?

For the Batman v Superman fight, we made extensive use of our Envirocam technique that we originally developed for MAN OF STEEL, to capture the real locations in the correct lighting in order to create complete pre-lit 2.5D environments. With those sets re-created, we had complete freedom to position and move our virtual camera in order to create all CG shots or transitions from real shots to CG environment and characters. Compared to previous films we used the technique on, the IMAX resolution required major improvements on the precision of the photo calibration and geometric details we were re-creating to support the photo projections.

What was the main challenge on this show?

As described above, the hero digital doubles at IMAX resolution and the end battle environment were our biggest challenges on the project.

What do you keep from this experience?

A movie with Batman and Superman together for the first time, it doesn’t really get much better than that in terms of having a chance to create iconic comic book imagery. Zack is an incredibly talented visual director, which made it always challenging and rewarding at the same time.

Was there a shot or sequence that prevented you from sleep?

I didn’t lose sleep over anything really but I will admit I slept much better after we successfully rendered our first full frame digital Superman shot and our first complete shot of the end battle!

How long have you worked on this film?

Shoot and pre-production for us were about 6 months long and post-production was 9 months so 15 months in total.

How many shots have you done?

Around 450.

What is your next project?

GHOST IN THE SHELL.

A big thanks for your time.

// WANT TO KNOW MORE?

– MPC: Dedicated page about BATMAN V SUPERMAN on MPC website.

© Vincent Frei – The Art of VFX – 2016

“…but we kept the footprint of the city. ”

And how did they define that footprint? Did they work from Eliot Brown’s map for the comics published by DC in 2000?

Sleep is neither loose nor tight.

“Lose” sleep. Lose.

Thanks to have flag it.

Have made the change.