In 2018, Jedediah Smith explained the work of Method Studios on PACIFIC RIM: UPRISING. He then worked on THE OA and talks today about AD ASTRA.

How did you and Method Studios get involved on this show?

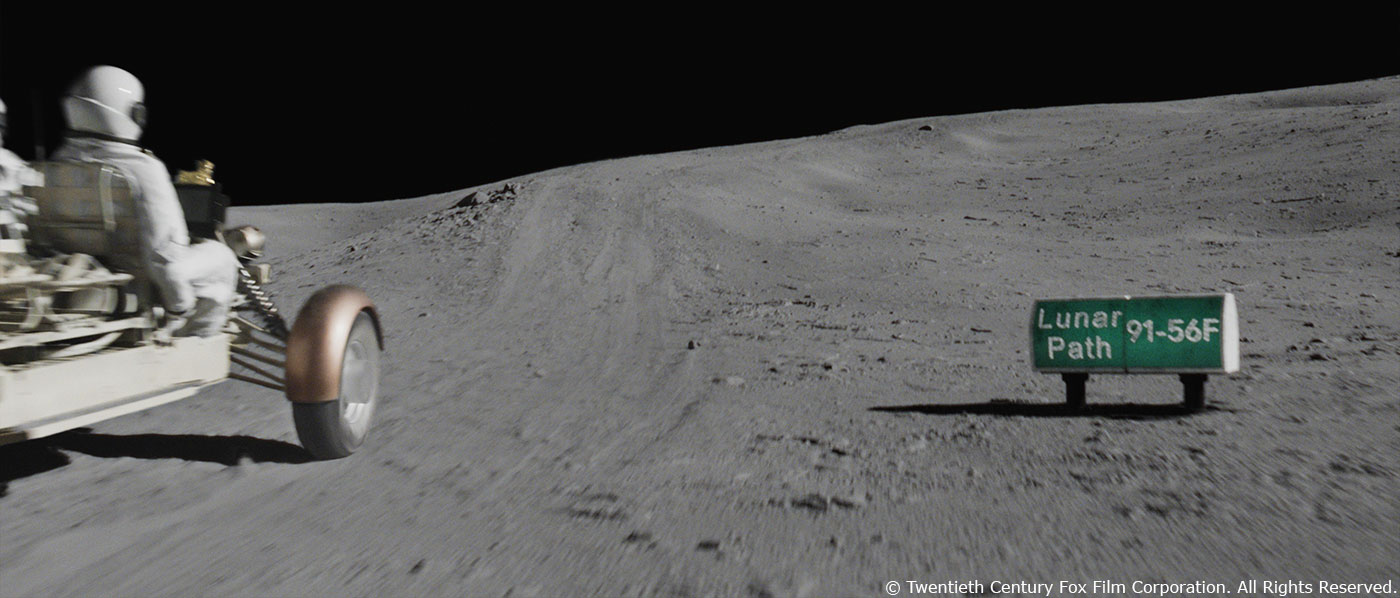

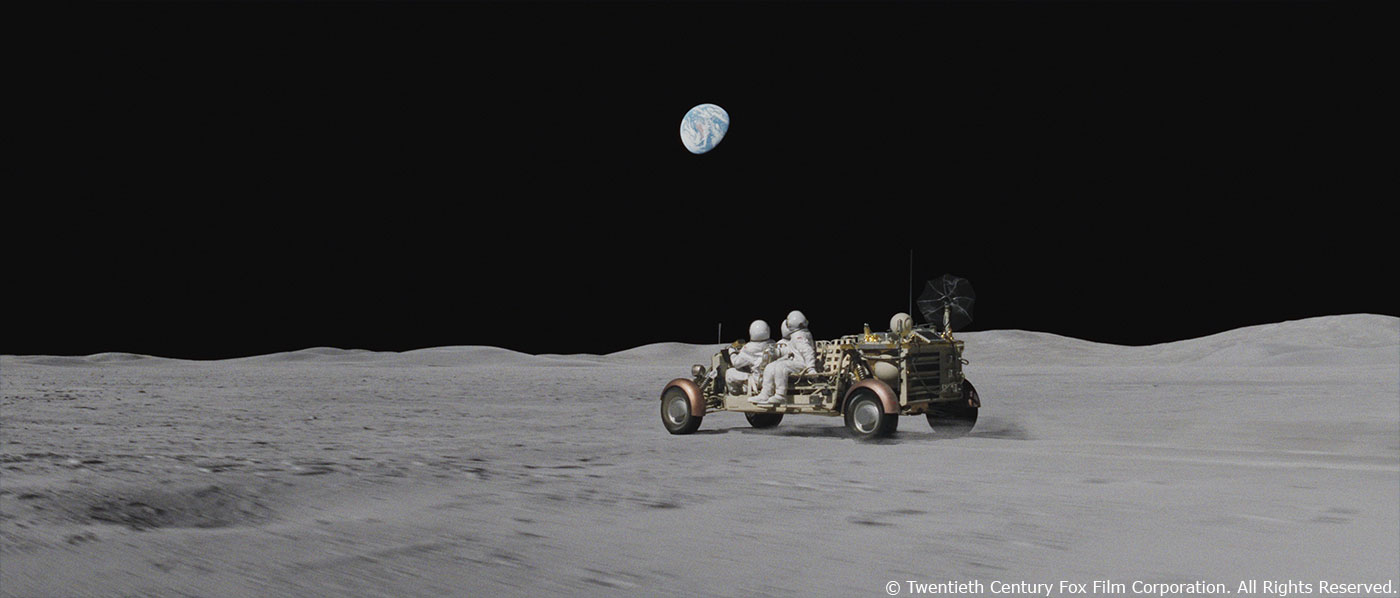

We actually got involved quite early during pre-production for AD ASTRA. Our animation department supervisor, Marc Chu, and his team created previs for the lunar rover battle sequence. This work helped refine the action sequences prior to principal photography.

Our VFX Supervisor, Aidan Fraser, oversaw the 2nd unit shoot in the blazing heat of the Mojave Desert in Southern California. I started as DFX supervisor on the show under Ryan Tudhope. Mid-way through the show, I became the VFX Supervisor and saw AD ASTRA through to completion.

What are the sequences made by Method Studios?

The majority of the work that we created was for the Lunar Rover Battle sequence on the moon. We also did a few shots for the Vesta Space Walk sequence where they stop off to investigate a distress signal, and a few shots in the tunnels on Mars.

How was the collaboration with director James Gray and Overall VFX Supervisor Allen Maris?

James had a specific vision in mind for the moon sequence. We worked in close collaboration with Allen to achieve that vision. It was a great experience. We had a lot of flexibility and creative freedom to suggest ideas and build great looking shots together. In VFX I’ve always found that the best work is created when there’s an open creative environment where the best ideas get put into the shots no matter the source.

What were their expectations and approach about the visual effects?

Realism and scientific accuracy were very important to James and Allen. The visual effects work had to feel grounded in reality and couldn’t take the viewer out of the film.

The lighting, the look of the moon surface, the simulation of the FX, all had to work within the constraints of what it would actually be like on the moon. Some cheats had to be made for the story, but overall I would say that sequence is pretty accurate.

The look of the moon sequence was really inspired by the look of the 1970s Apollo NASA missions, from the design of the rovers and the look of the space suits to the gritty aesthetic. We spent a lot of time looking at all the reference we could get our hands on. All the work we did was guided by these images.

Can you tell us more about the filming of the rover battle sequence?

The location photography for the rover battle sequence was unlike anything I’ve seen before! The cinematographer, Hoyte Van Hoytema, had a really unique idea for this sequence. Most of the film was shot on film. For this sequence they decided to shoot with an infrared modified Arri Alexa XT and an Arriflex film camera on a stereo rig.

The practical footage for the rover battle sequence was shot on location in the Dumont Dunes near the Mojave Desert. This location definitely looks like an alien planet, but there are still things you recognize as being Earth-like, such as blue sky, clouds, plants, wind and water erosion patterns in the desert. With the infrared footage, the sky would become almost black, and the desert would get this bright, washed out, ethereal look reminiscent of the moon reference.

So what we did for many of the wide establishing shots with the rovers in frame was to do the reverse of a normal stereo photography workflow. Instead of using both “eyes” to create a stereo image, we used the infrared Alexa footage as our master. We equalized the color in both plates. Then we used Foundry’s Ocula toolset to warp the film footage to align perfectly to our master. We used the luminance from the infrared plate, and the color from the film plate for the rovers and astronauts. We ended up replacing most of the terrain with our CG moon surface, but the look of that photography really helped inspire the aesthetic of the sequence.

The sequence also contained many closeups of the actors performing behind their spacesuit visors. These were shot on film, on a stage over black. They tried to mimic the lighting with a single distant light source and very little fill.

When you’re creating CG worlds, having a photographed image as a reference point helps so much. One of the cool things about this sequence is that nearly every single shot had a plate that was shot. Even shots that ended up becoming full CG shots in the end had a plate that we started from. I think this is one of the big things that really contributed to the visceral realism of the sequence.

Can you explain in detail about the moon creation?

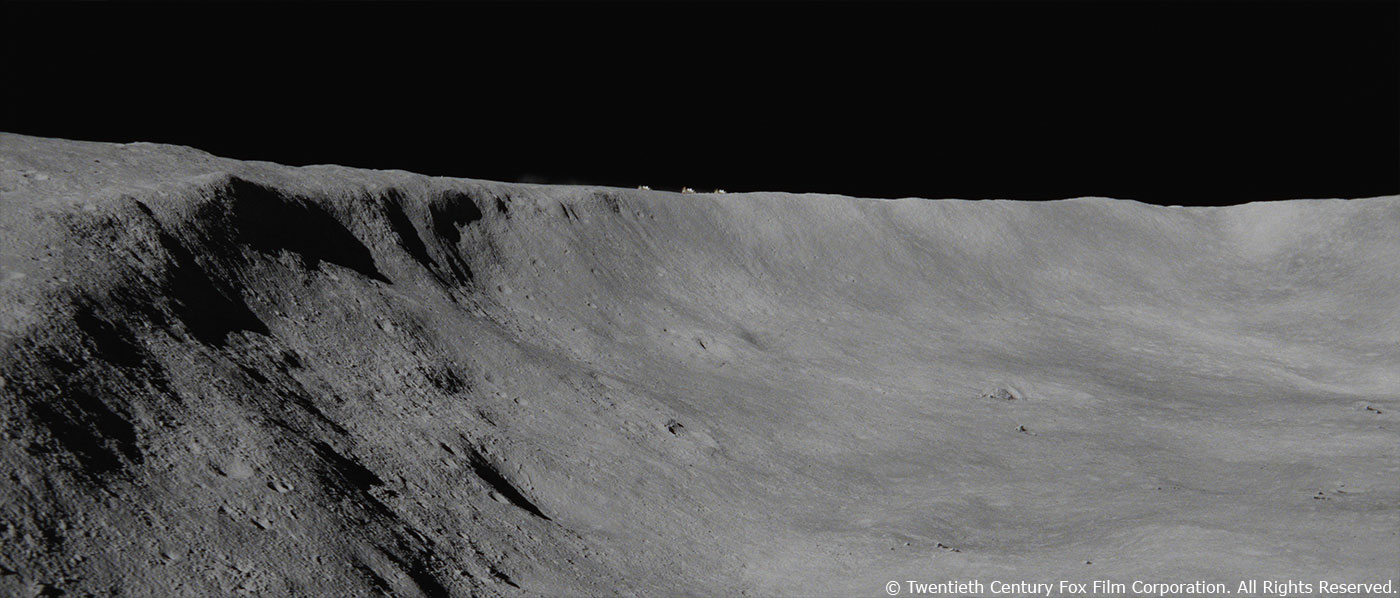

The first big challenge was layout. Since the action in the sequence takes place over a huge swath of moon terrain, we started by sketching out the geometry of the terrain.

We knew that they would start their journey at the moon port, and end at the military base where they board the Cephius and start the next leg of their journey. Along the way, they drive through some hilly terrain, past some craters, encounter some electro-statically levitating moon dust, travel through a big flat basin where they get attacked, pass a solar power plant, spin into a giant crater and then travel past the terminator line onto the dark side of the moon.

We built a low detail model for the whole environment. As we got the cameras and visors tracked in 3D, we would place them into our virtual terrain. We knew roughly where the shot was in our narrative, and we could go location scouting and find a good-looking background for each of the shots. Our layout lead, Robin Lamontagne, was instrumental in seeing this work through.

The next big challenge was creating a photoreal moon surface. The NASA Apollo Archives were a great source of reference. We were able to download and color balance those film scans and use them as color, lighting, and terrain reference. We had a crew screening at the studio of the “For All Mankind” documentary. We really immersed ourselves in the moon and tried our best to figure out what makes it look like it does.

The first big indicator is lighting. Since there is no air on the moon, it is missing some things we are familiar with on Earth. First, the sky is black. There is no atmosphere to scatter the light from the sun, so there is no fill light. The only light source is harsh direct sunlight, and indirect bounce light. Since there is no air, light doesn’t scatter in the air. This means there is no atmospheric perspective to give us distance cues. A shadow 1m away is the same density as a shadow 1km away!

The terrain appearance is also key. On Earth, we are used to a terrain that has been formed by wind, rain, plants, volcanic and geologic forces. On the moon, the only thing that formed the landscape is millions of years of meteor impacts. The terrain is formed from craters within craters, resulting in hills pockmarked by tiny craters, and rocks and debris scattered from meteor impacts. Another thing that is subtle but really throws you off is the curvature of the horizon. On the Earth, the horizon is around 5km away, and on the moon, it’s only 2.4km away, which means everything feels closer – like you’re standing on a hill and you can’t see over the edge.

Inspired by this terrain, we created a system to mimic it. We used Houdini to process high-res displacement geometry and as a tool to scatter instanced rock geometry in strategic places. We used Substance Designer to create believable patterns of craters and varying textural looks for the moon surface. This set of tileable displacement maps and textures were piped into our Katana / PRMan pipeline for lookdev and lighting. My CG supervisor, Remy Normand, was key in developing this system and getting shots created with it!

How did you handle the specific moon lighting challenge?

The lighting on the moon is not exactly flattering. It’s harsh direct light with no fill light except indirect illumination from reflecting surfaces.

In the reference photographs of the moon, one of the things that you notice is how different the moon looks in different lighting conditions. When the sun is at a low angle, you see all the textural detail of the moon surface, and it looks really alien and interesting. When the sun is behind you, everything gets really bright and washed out. Since we couldn’t really cheat to make the lighting soft and beautiful, we ended up cheating the light direction very carefully in each shot to try to get an interesting cinematic look. In some cases, we added bounce cards to get some fill light, although in many cases the moon surface itself actually did this for us!

How did you create the rovers and the digital doubles?

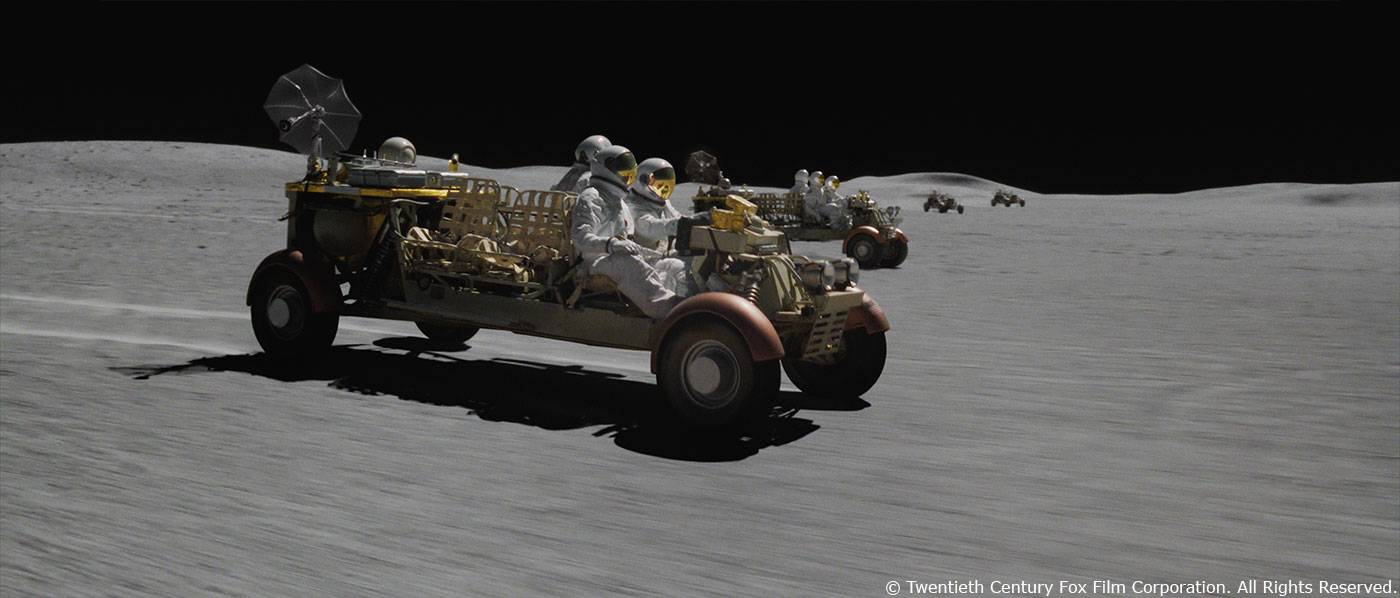

On location they had a fully functioning “American” rover and two fully functional pirate rovers. In the story, there are two additional support rovers that accompany our heroes on the main rover. These were created by us and added in CG, along with their astronaut drivers and passengers.

The process of creation was pretty standard: we received reference photographs and modeled, textured, and lookdeved these assets to match their real-world counterparts.

For our digital astronauts, these are seen mostly in mid-ground and distant rovers, and in reflections. The shot where the driver’s body is thrown out of the rover is also a full CG digital astronaut.

Can you tell us more about the environment creation?

Halfway through post-production the client realized that they needed some wide establishing shots to set the scene for the action and help the edit make sense. We created several wide “space drone” shots where we had a wider view of the moon surface and the rovers as they drove. These were fully CG shots and a great opportunity for us to show off our great-looking moon surface! We animated rovers swerving between craters, kicking up dust, as the pirates chased after the hero rover.

We also designed and created a solar power station that one of the pirate rovers smashes into.

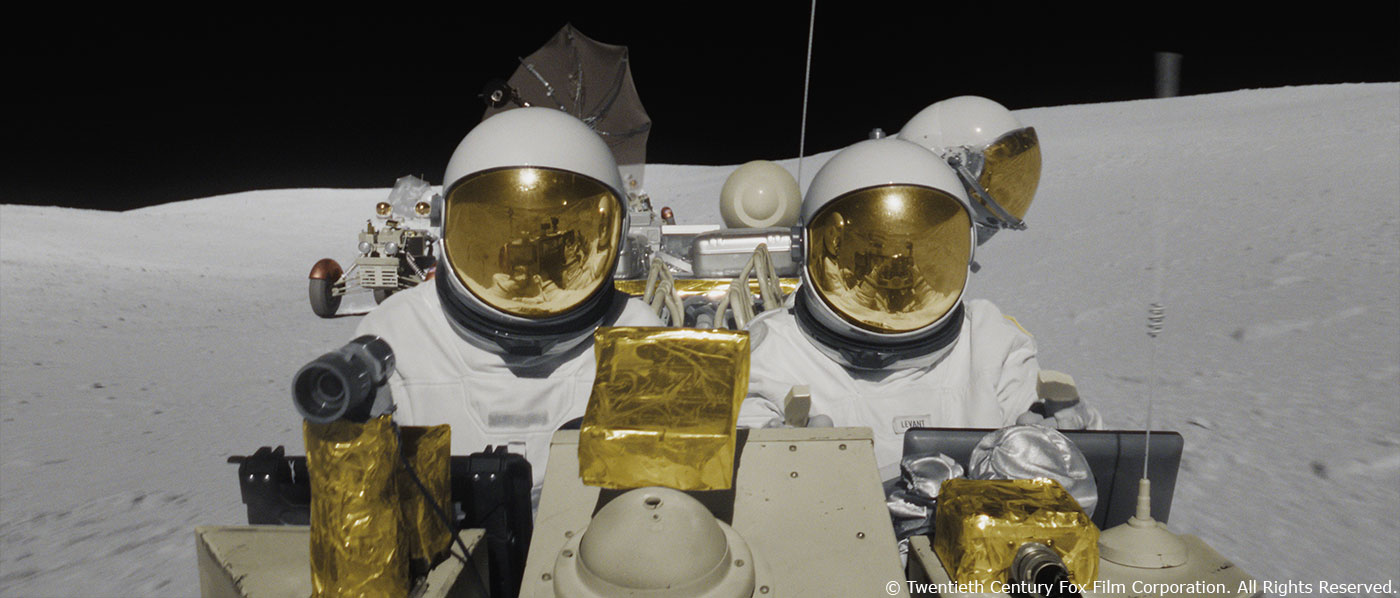

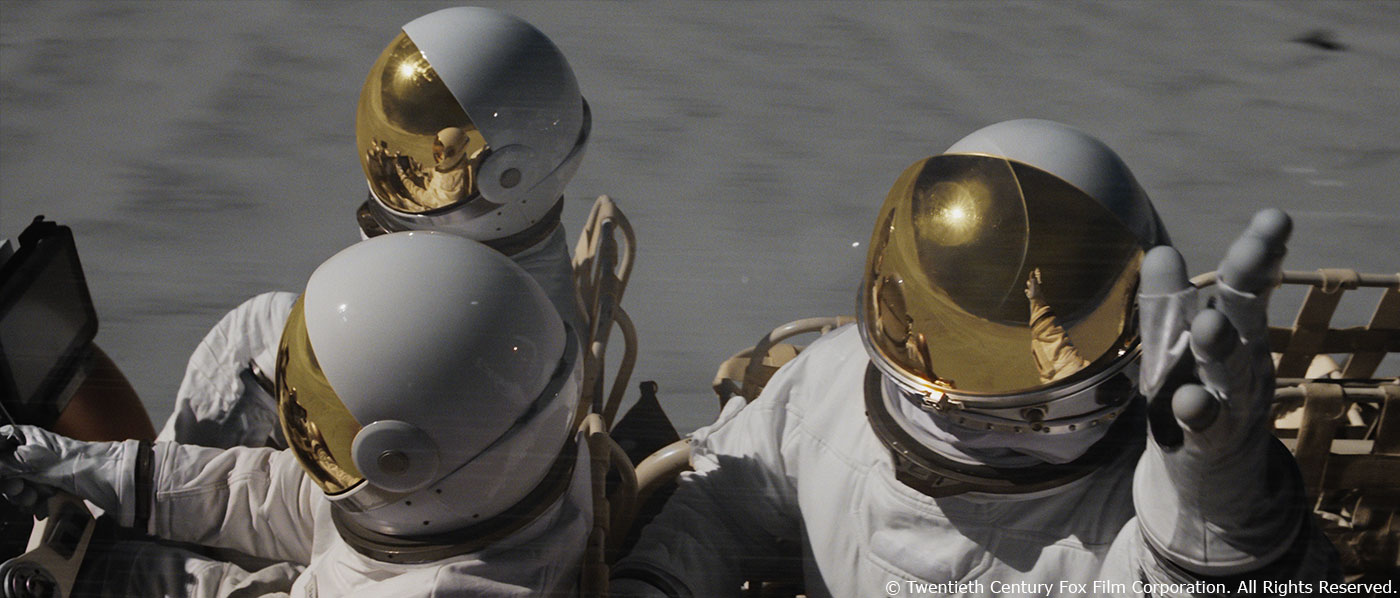

How did you handle the helmet reflections challenges?

It’s definitely not the most glamorous thing in the sequence, but the visors were our biggest challenge. A significant portion of the sequence included closeups of the actors’ faces seen through visors. They shot on a stage with the rover, using practical visors. I think that approach helped contribute to the realism of the performances.

The visors on the astronaut suits are a spherical reflective object, so they basically reflect half of the environment. Of course, because the shots were shot on a stage, the plate visors were either reflecting black, or even worse, camera crew. I’m going to go on a bit of a nerd-ramble here, so hopefully you stay with me…

In order to tell the story of our heroes moving across the moon terrain, we had to have moving reflections of the moon surface visible in the visors. We created a matchmove camera in CG, object tracked the movement of the visors with a CG version of them, and then placed these objects with our CG rover, moving across the moon terrain. This gave us a convincingly moving reflection, but the challenges were just beginning!

In the practical visors that we were trying to match, the refraction (the things you see through the surface) were tinted dark and green. And the reflections (the objects you see reflecting off of the surface) were tinted orange. In some cases, the actors were not visible enough in the refraction of the visors, so we had to carefully boost the brightness of the performer.

Often the plate visors would have visible reflections of the other astronauts and the rover. If there were reflections in the plate visors, we would try to preserve them. To do this, we rotoscoped the reflections and composited our CG reflection of the moving moon surface behind them.

To fully match the look of the reflections, we also rendered a reflected depth pass so that we could accurately re-create the reflected object depth of field. Because a reflected object’s circle of confusion size is equal to an object at distance from camera to reflecting surface + the distance from the reflecting surface to the object, the reflected rover and the reflected moon surface would usually be noticeably different in their levels of defocus.

Also, some visors were clear. For these, we needed to match the green refraction and gold reflection look of the others. In some cases, these visors had visible reflections that had to be painted out over the actors’ faces because when we darken the refraction, we obviously couldn’t separate refraction and reflection. For these very difficult cases, we had to create fully CG digital doubles to replace the reflected astronauts. It was a lot of work and a huge challenge for the compositing team.

Can you explain in detail about the FX and creation and animation?

There was a lot of FX work on the show. For most shots where we see rovers, we added moon dust being kicked up from the tires. For the look and behavior of this dust, we found this great video reference from one of the Apollo missions of an enthusiastic astronaut doing donuts in a rover on the moon. The behavior of the dust as it was kicked up into the vacuum was very interesting. It formed these characteristic C shapes and settled back down to the ground slowly. We tried to mimic the look and feel of that dust behavior in our FX simulations, all of which were done at one third of Earth’s gravity and without air.

The pirate rover attack had a lot of FX work as well. The pirates had these projectile weapons called Stilettos, which fired a molten projectile and had a huge amount of kinetic energy. We created tracer effects for the Stiletto bullets, as well as created impacts on the moon terrain that sent dust and rocks flying into the vacuum over the rovers.

Two of the support rovers get disabled and destroyed in short succession. These shots were fully CG. We started with a plate that had the rough camera move. We keyframe-animated our rovers and astronauts, and then created FX simulations for the rovers being torn apart, as well as the Stilettos and the dust impacts.

Many of these are point-of-view shots where we see the action from Roy McBride’s perspective. For these shots, we added a “POV treatment” where the moon surface was tinted slightly greener by the visor, and then added additional reflection elements on the edges of frame motivated by the sun reflecting off of the visor surface. This little sequence of shots feels really dangerous and immersive, because of these POV shots.

Any other big challenges on the sequence?

The stereo camera rig was a bit unwieldily so they weren’t able to shoot with both film and infrared cameras in all cases. For these shots, we received only an infrared plate. These were a huge challenge not only for the normal reasons (visors, moon surface, FX), but we also had to colorize the rovers. We basically had to rotoscope each individual material in the footage, in addition to the normal roto we needed to composite the rovers over the background. My rotopaint supervisor, Tony Como, and his team did a great job managing the complexity and simplifying it for the compositing team.

Generally speaking, the show was a huge challenge for compositing. Between the color pipeline for managing the film/infrared shots, the visors’ reflections, properly integrating so many disparate plate elements and CG, and recreating the alien look and feel of the moon, the compositors had their hands full. I have a huge amount of respect for the dedication and hard work of Compositing Lead Thomas Trindade, Compositing Supervisor Antoine Wibaut, and their team.

Can you elaborate about the Mars underground environments?

For the underground Mars shots, we did some simple environment work to make the tunnels feel more vast. We took the plates, which were shot with a red light in what I think was an underground military bunker, and extended the length of the tunnels so that they felt more expansive. We also added some water tanks along the edges of the tunnels.

Can you explain in detail about the spaceships creation?

For the Vesta Spacewalk sequence, we designed and created the Vesta spacecraft, an abandoned scientific research spacecraft. I’m not sure the purpose of their experiments but all we know is that it involved baboons! For the design of the spacecraft, we referenced the modular design of the International Space Station. We built a lot of detail into the model, and then added wear and tear from the infrared radiation on the metallic exterior.

These shots were also shot on a stage, with the performers on wires wearing their space suits. We painted out the wires, added stars in the background, and lit and rendered our Vesta spacecraft, placing it into the visors as a reflection, and into the backgrounds of the shots.

How did you handle the deep space lighting challenge?

For the Vesta lighting, we imagined that we were quite distant from the sun, so the intensity of the light would be less bright. We would see stars slightly more clearly in the background and the sunlight reflecting off of the Vesta would be less bright than it would near the Earth. We also cheated a bit and added some bounce light to make it look cinematic. We tried to keep it subtle though. The end result is a good balance that I think reads realistic while still creating a pleasing image.

Which shot or sequence was the most challenging?

One moment in the moon sequence that was particularly challenging was the pirate rover crashing into the solar station. They shot this as a real stunt in the desert. These shots were infrared-only plates, so we basically started with a black and white plate of a pirate rover being smashed into a concrete pillar in the desert. We rotoscoped all the little pieces, figured out what color they were, composited the plate elements with our CG moon surface and CG solar power station, then layered in additional FX passes of dust and debris, and added our point-of-view visor treatment on top. It required a huge amount of compositing work. There is even one shot at the tail end of this little sequence where we see the aftermath of the crash in the reflection of the visor. For this shot, we created a full CG version of the crashing rover, just so we could see the event in the reflection from the right perspective.

Is there something specific that gave you some really short nights?

The first time I saw a visor shot actually working I slept very well!

What is your favorite shot or sequence?

My favorite shot in the sequence is after they drive onto the shadow side of the moon and they call in the missiles from the moon base. It’s a good example of collaboration. The FX artist working on the shot, Phillip Wrede, found this great reference of a science experiment simulating a meteor impact in a vacuum. The debris sent up by this impact formed a really unusual conical shape, with a spiky center core. We showed this to the client, and they loved the look of it. We built an FX simulation at the proper scale, with additional rocks and debris. For the camera move, the director had a specific idea in mind. One take after another, we weren’t quite there, then Allen Maris put tracking markers on the ceiling of his bedroom and sent through this perfect mockup of the camera move shot with his cell phone for us to match. We nailed it on the next take. I had this idea that I wanted to do for the lighting: the environment in perfect darkness, stars in the background, with the light from the sudden explosion illuminating the moon surface. That’s one thing I love about VFX, how collaborative it is. So many good ideas from so many different people go into creating these little moments in the movie.

What is your best memory on this show?

Teamwork. The show had its fair share of challenges, but we had a great crew, and because of that, the experience is one I will remember with fondness.

How long have you worked on this show?

The 2nd unit shoot in the desert happened in Summer 2017. I started work on the show January 2018. We wrapped the first portion of the work November 2018, then we did around 20 additional shots for the sequence in May to June 2019.

What’s the VFX shots count?

We worked on around 175 shots total, though the final shot count is around 150 shots.

What was the size of your team?

We had a pretty small but dedicated team. We had four modelers, two environment artists, three matte painters, two matchmove artists, four animators, three different riggers who helped out during different parts of the show, five FX artists, one lighting lead and five lighters, 21 compositing artists at our peak, with a core team of 12, and seven in-house roto artists.

What is your next project?

After AS ASTRA, I spent some time with a telepathic octopus on THE OA Season 2, and now I’m currently working on the DC Comics Harley Quinn spinoff movie BIRDS OF PREY.

A big thanks for your time.

WANT TO KNOW MORE?

Method Studios: Dedicated page about AD ASTRA on Method Studios website.

© Vincent Frei – The Art of VFX – 2019