Bryan Hirota told us about the work of Scanline VFX on BATMAN V SUPERMAN: DAWN OF JUSTICE in 2016. He then worked on THE PROMISE and JUSTICE LEAGUE.

How did you get involved on this show?

I think due to Scanline’s prior experience with DC films coupled with some of the specific demands of this property, it made sense to explore how we might be involved. After some discussions about various scenarios with the studio and filmmakers we were lucky enough to be brought on board in the first half of 2017.

How was the collaboration with director James Wan and VFX Supervisor Kelvin McIlwain?

Working with both Kelvin and James was a very creative and cooperative experience. With the film’s post production being done on the WB lot in Burbank the proximity of being based out of our Los Angeles studio allowed us to spend time meeting with Kelvin and James weekly face to face which proved extremely valuable in helping our understanding of what was working for the film and what wasn’t. In general we’d meet with Kelvin first to discuss any submissions and questions that either side might have. We’d cull out anything that wasn’t ready for James’ review and then present that material to James for his feedback. James was constantly looking for ways to plus up the shots which was nice that he never just settled for something that wasn’t his aesthetic.

What was the sequences made by Scanline VFX?

The main sequences produced by Scanline are: The lighthouse and it’s surround environment, the “Aquaman” title card that follows the Boston aquarium, Aquaman pushing the submarine to the surface and rescuing the sailors inside, Orm’s tidal wave that sweeps away Arthur and Tom including the rescue and aftermath, Black Manta being paid by Orm for the submarine’s delivery and Arthur and Mera’s visit to the Kingdom of the Trench.

How did you organize the work with your VFX Producer?

Julie Orosz (VFX Producer) and I have done a number of shows based out Scanline’s Los Angeles studio with the lion’s share of department supervisors and artists in our other locations. The company has streamlined working across multiple facilities and the in house 4k video teleconferencing system (dubbed Eyeline) allows for face-to-face production meetings which helps team cohesion. We had considerable production management help on the floor in the various locales and having Julius Lechner’s (DFX Supervisor) tireless oversight in Vancouver where the majority of our artists resided proved invaluable.

How did you create and animate the Aquaman logo?

Creating the title shot of the movie was especially exciting for us. The idea of going from the aquarium into an ocean full of fish with the logo being part of these swarms was an interesting challenge. On a technical level we had to hook up with the end of Rodeo’s aquarium work and manage up to 60,000 fish which had to be quite high resolution since the camera was traveling right through the middle of them. To handle this amount of geometry we had to modify our internal crowd loader and find a new way to fit all this data into memory. Creatively we had a lot of fun figuring out how to make a swarm of fish form readable letters while still behaving naturally. Looking at real world reference we found that swarms can have quite a distinct outline and streams of fish changing direction create well readable shapes. We combined these two qualities by having each letter consist of some very defined spiraling swarms of fish for readability as well as some wider spread groups to tie them all together.

Can you explain in detail about the creation of the Lighthouse and its coastal environment?

The film opens on a stormy night near a lighthouse in the fictional town of Amnesty Bay. The home’s occupant, Tom Curry, discovers Queen Atlanna washed up on the rocks. This location is returned to throughout the film. Creating the lighthouse required combining a full-size house with the base of the lighthouse tower constructed on Hastings Point in New South Wales, Australia. Additional house and dock sets were built on sound stages at Queensland’s Village Roadshow Studios. Supplemental environmental footage was shot with both motion and still cameras and drones in Newfoundland Hastings Point and Palos Verdes, California. This footage was used to place the Hastings Point house into a New England location and remove any sense of Australia from the surrounding environment. A digital build-out was done to complete the lighthouse tower and extend the dock fully out to sea. This material provided reference for the digital version of the environment, house and ocean that would be utilized whenever the photographic elements would not suffice. One of the more elaborate hookups started inside the living room of the lighthouse, and as Tom and Atlanna get to know one another, the camera pushes into a toy snowglobe with a tiny lighthouse inside which ultimately transitions into the real lighthouse tower showing a time jump to winter when Tom and Atlanna have now fallen in love. The shot stitches together the interior living room set, with a CG transition through the snowglobe to a blue screen element with Tom and Atlanna set in a digital winter coastline.

There is then a big fight between Atlanna and soldiers. How did you create their armors?

The Atlantean armor was a practical costume with the exception of being visor-less and having a blue screen panel in the chest where the Atlantean technology would go. James wanted to tell the story that the suits had technology that held in water akin to space suits for humans. We designed holographic displays built into the suits to display vitals and other pertinent information. When the suits were breached fluid simulations were required as the water would rush out of the sources of puncture.

With a project like AQUAMAN, how did you improve your water and FX pipeline?

To help give the boat during the trench attack a more natural behavior on the water we developed a real time version of our ocean solver that was also able to drive the boat’s secondary motion based on physical properties. This allowed us to set the look of our ocean already in the layout stage which we could then pass on to our Flowline department to create high resolution simulations. In addition to that we completely reworked the way we simulate our oceans, resulting in cresting and churning wave peaks crashing onto themselves with nice interaction around the boat and the creatures in the water, while still staying crisp and defined where the waves had not collapsed yet.

Can you tell us more about the submarines creation?

Production had built a partial section of the exterior for use in the water tank in Australia. The Russian submarine was defined as a member of the Akula class. In our research we discovered there were multiple specific types of submarines that made up this class. An additional complication was that the cross section of the hull set piece was slightly different than the cross section (based on what our research turned up) of the real Akulas. We presented James with our findings and some recommendations for what we might suggest doing. We settled on a hybrid of a few features. Once we had placed Manta’s submarine docked on top the space ahead of the conning tower felt too confined and interfered with Arthur’s leap to the top. To accommodate all of the requirements we ended up with our stretched limo version of the sub.

For the Manta sub we received a base model from production which needed to detailed out. We took inspiration from some of the new flying wing drones that are being produced and some advanced submersible craft.

Can you explain in detail about the creation of the shots with the tsunami?

Fed up with mankind’s militarization and pollution of the world’s oceans, Orm fires a warning shot at the human race by creating a gigantic tidal wave to rid the Atlantic Ocean of the world’s navies and rubbish. The tidal wave was realized with a large-scale simulated wave that carries military ships along as it crests and collapses as it hits the coast. The simulated wave was integrated with a combination of day for night footage, blue screen shots for the actors in truck interiors, a truck on a rotisserie rig, and an interior cabin in a water tank. As we began to integrate the wave with some of the aerial footage of the coastline, we ended up building a digital match so we could have the flow of water affect the treeline. The forces would bend the branches, trunks and if strong enough, rip the trees from the ground and have them travel along with the turbulent flow. Arthur was shot in the aforementioned rigs on a bluescreen stage searching for his father through a now underwater digital coastline with numerous FX simulations for debris.

How did you create the digital doubles?

The digital doubles use in this sequence we inherited from ILM. We received the geometry and textures along with a turntable to match to. We would then retrofit them into our character pipeline and create our solutions for cloth and hair.

Mera saves Arthur by keeping the water away. How did you design and create this effects?

When Arthur sees Mera creating an air pocket to protect his human father, Arthur swims towards and jumps through the wall placing him in the center of the air pocket. This moment was one of James’ signature ant-farm style shots for the film. He wanted to see water visualized as if there was a plexiglass wall 20 feet from the camera of infinite size. To give Arthur the appropriate look, the plate of him underwater was shot dry for wet in the stunt rigs and the b-side was shot with Arthur in full costume leaping through an SFX laminar flow to give him interactivity with a real-life wall of water. We then brought the two passes together and registered them and their movement in Nuke and surrounded them with the hillside and volume of water simulated with forces to hold it back with Mera’s hydrokinesis. At the end of the sequence, a news program shows damage around the world caused by the wave. We took a variety of aerial and stock footage to place military ships thrown onto land and applied appropriate damage.

Later in the movie, Arthur and Mera are attacked by the Trench creatures. How did you design and create these creatures?

While it was originally thought that the Trench would be realized with practical costumes with stunt performers, it became apparent early on that we would not be able to achieve the kind of dynamic performance James wanted on set. Freed from the physical restrictions, James was free to pursue a design without compromise. We went through numerous design passes to adjust proportions and presented reference of different animals and creatures as inspiration for creating a functional biology and behavior for the creature.

Trench required multiple levels of simulations. The initial step was a full body muscle simulation where the artist had the ability to art direct the tensioning and bulging of the muscles and subtissues while still staying fully dynamic. On top of that we would simulate fat and tissue, followed by a skin simulation. The final step was simulating the fins and fin spines. Every trench that came from animation had their fins simulated at the minimum. Since that was such a large number, we optimized our workflow so that our CFX artists could simulate any given number of trench simultaneously with minimal setup time. Hero trench had full muscle and skin simulations, custom for each shot. We also used the muscle simulations to extract correct deformations onto our animation rigs, which ensured that simulated and rig driven characters behaved in a similar way.

Can you tell us more about their rigging and animation?

Because the design of the creatures changed from more bulky and muscular, to more emaciated and sinewy, our rigging strategy had to change as well. A primary problem was the skeletal features that protrude out of the skin, and essentially anchor the skin around it to that particular spot, preventing any skin sliding. We used around 130 “mini-rig” muscle joints to get movement from tendons, muscles, and bones. Detail went as small as the tendons on the back of the hands to spittle that stretched between his teeth. Pose drivers would drive transformations of these joints to achieve a desired shape. To give a bit more life to a creature with no eyes, we baked out shapes of skin slide over the head ridges, and added 6 cycles of pulsating flesh on his head.

How did you create their textures and shaders?

Once we able to define more clearly what the specific details of the Trench should look like based on doing some studies of real life marine life and other animals our creature team is pretty adept at creating textures and developing shaders.

How was filmed the shots with the actors underwater?

A key concept for the film was to shoot the underwater action dry for wet. Using a variety of rigs and wire harnesses, the actors were hoisted and maneuvered to provide the mobility needed to simulate movements underwater. Highly detailed digital versions of their bodies and costumes were used to help repair the image where the rigging covered too much of their body. Having detailed matchmoves of their bodies allowed for replacement of their hair, beard, or costumes with simulated versions to aid in the illusion.

Can you tell us more about their hair simulations?

We used our past experience in affect physically true simulations with specific targets to help shape the simulations where appropriate. It was important to keep the simulations messy and real but avoid anything outright ugly. We made sure to emulate the clumping together to provide a natural look and avoid it looking like obvious interpenetration of strands.

We discover the crazy amount of creatures in the Trench. How did you manage the crowd creation and animation?

We used different approaches depending on the needed complexity of the crowd behavior, amount of trench creatures or advantages of specific tools. When Arthur and Mera are still on the boat we had large crowds approaching the boat, while reacting to the movement of the ocean as well as driving hi-res interactive simulations water simulations. They were approaching in different depths below the surface, could breach through the water, dive back under and fight for the best position around the boat. For this part of the sequence we used Massive combined with a custom in-house importer for 3ds Max which was also very useful for the creation of the title shot of the movie. Under water we used Miarmy for most of the shots as well as a solution based on Thinking Particles with custom geometry handling for the shots with the largest amount of creatures. This way we could keep the memory consumption as well as render times fairly low. To the fill the gaps and to add the thousands of trench we see in most shots, Thinking particles was used to populate the numbers we needed. Particles were artistically directed along paths, and flocking logic was implemented for a somewhat of a cheap solution to a crowd solve, with simple rules like avoidance and turbulances. Due to the simplicity of the system, we could then cache our particles out per shot in literally minutes. Then at render time, swim cycles were instanced onto the thousands of particles and we took advantage of Vrays render time instancing to be able to quite easily render all the trench with relatively short render times.

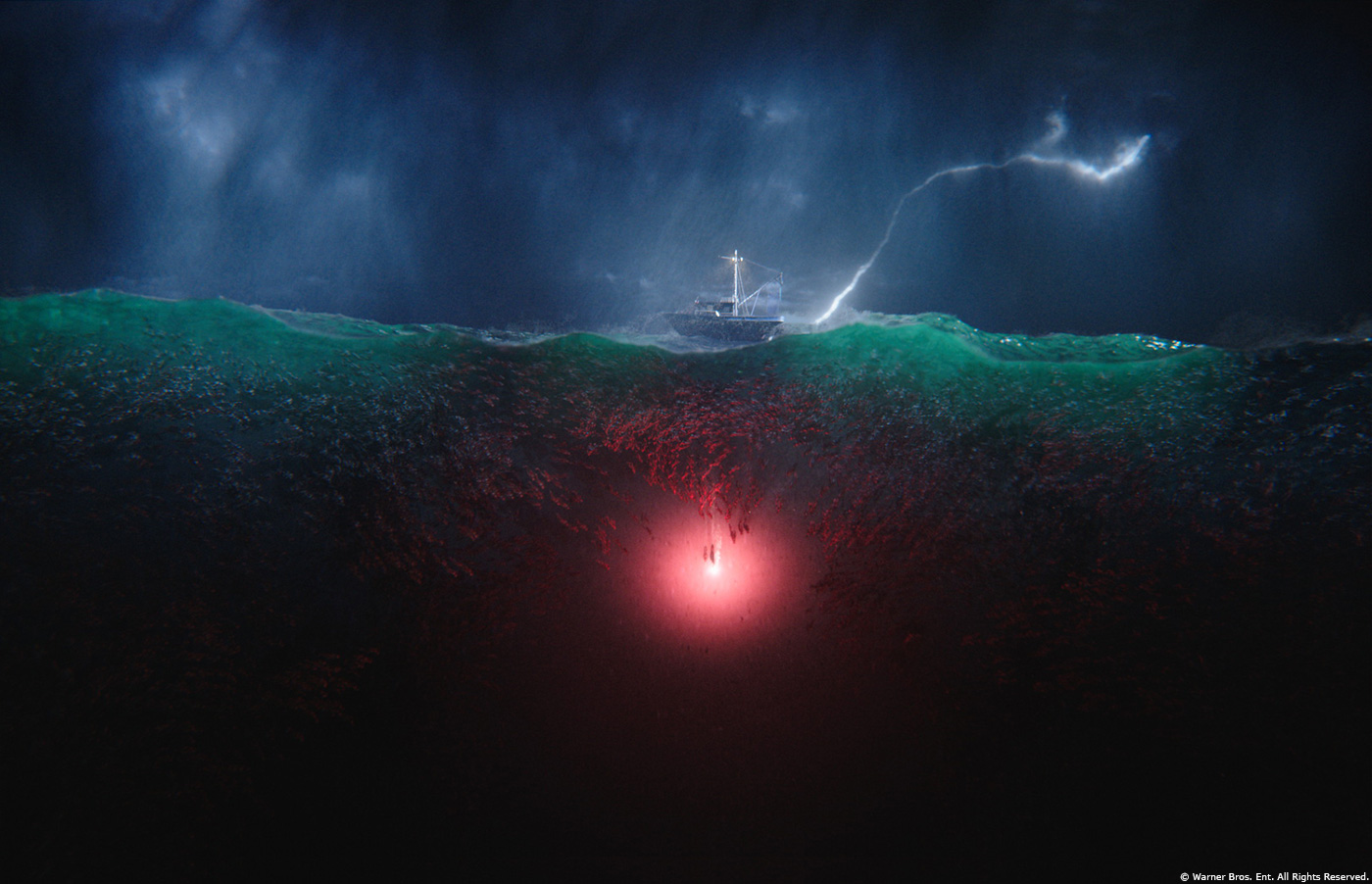

Then Arthur and Mera dives into the Trench. The first shot is absolutely beautiful. Can you explain in detail about it and how you create it?

This shot had a very specific vision from James, he wanted to create sort of an ant farm or cut away shot. We had a pretty specific postviz to match, down to the beats of the lightning strikes. Using the technique mentioned above, we sculpted the crowd of 16,000 trench to match the timing and the movement of the postviz using particles art directed along paths and curves. We could simulate the crowd of 16,000 in less than a total of 5 mins. So the turn around time to iterate versions was incredibly fast, we would preview all the trench with a proxy capsule shape to get a sense of the swarm. Surprisingly the visual complexity of the shot, we were able to visualize, adjust and get through lighting fairly smoothly and quickly.

With so many creatures and simulations, how did you prevent your render farm to don’t burn?

Although our render farm consists of very powerful machines we were soon aware that we needed to be highly efficient when it comes to simulation data, amount of geometry and render times. A typical shot in the trench attack sequence had various heavy ingredients such as up to 60 high resolution creatures with muscle and fin simulations, large scale ocean simulations where even the rain hits on the surface were 3-dimensional, as well as thousands of creatures in the water. All of these items had to be aware of and see each other during rendering so we wanted to make sure each piece of the puzzle is using resources in the best way possible. We set the groundwork for this early on by keeping this efficiency in mind when building our assets, creating our shaders and setting up our simulation rigs. On the CFX side the team led by Kishore Singh was terrific in creating very stable and fast simulation setups for muscles and fins which was essential in handling these large amount of creatures. Their strategy of setup building and additional pipeline tools worked so well that a full shot with fin simulations for any number of creatures could be done by a single artist in less than a day. For the water simulations our Flowline team supervised by Michele Stocco did an excellent job in optimizing the data usage and simulation times on their end. And when bringing the pieces together our lighting team under the watchful eye of CG Supervisor Chris Mulcaster was as stellar in using our custom procedural render pipeline to ensure our renders are fast and have minimal memory consumption.

During all this sequence, the lighting is really challenging. How did you handle this aspect?

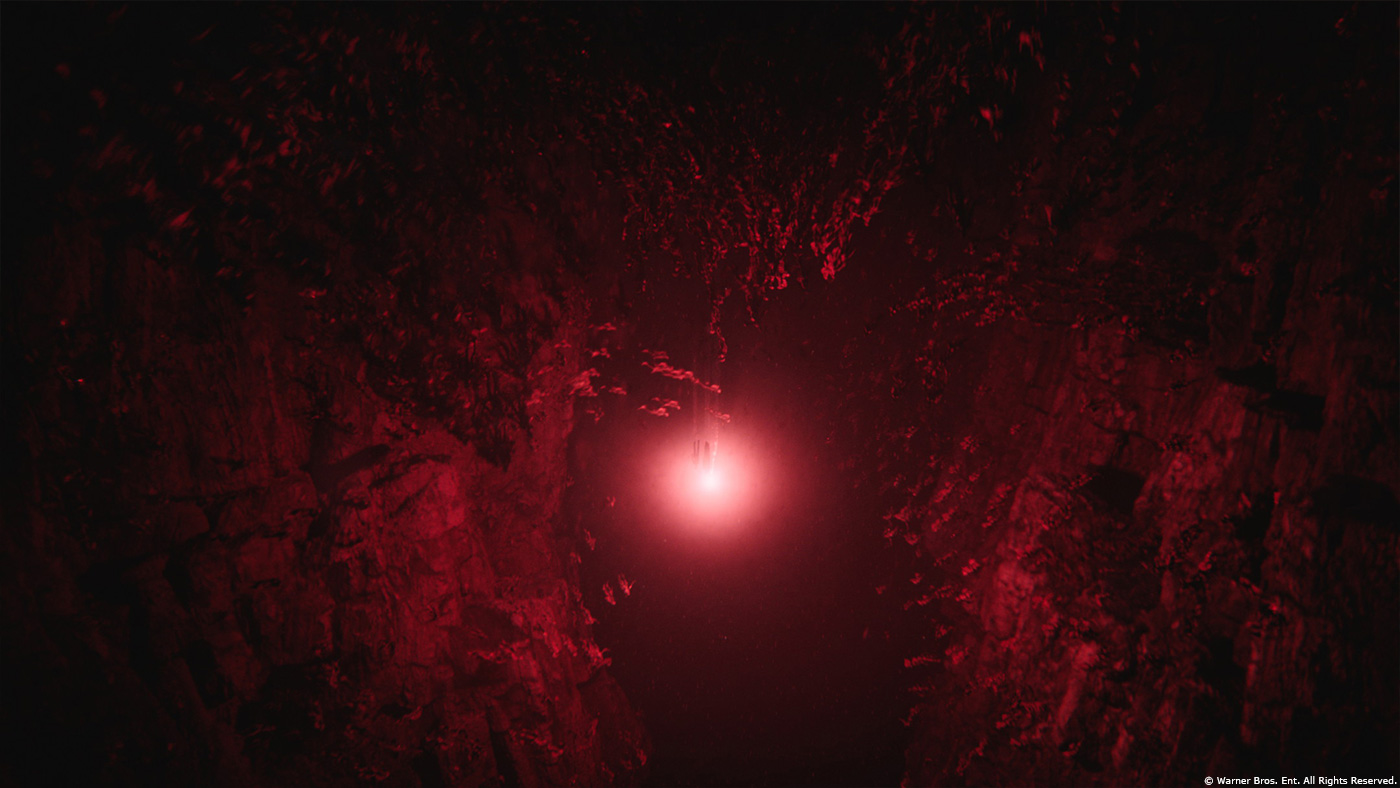

Once they’re underwater the only real lightsource is the flare they’ve lit to keep the Trench away. On set an LED flare was held by Jason to provide interactive light onto him and Amber for the photographic components. In lighting the shots it was important for James that there was a really strong graphic image that told the story of the red flare providing a protective safety bubble for our heroes. Additionally we would artistically place other lights in the scene to help provide visual cues to the geography or to add dramatic shapes and interest to the frame.

What was the main challenge on the show and how did you achieve it?

I think the biggest challenge of the show was being able to remain flexible while at times juggling the enormous datasets required to realize some of these shots. To James’ credit he was always pushing to maximize the imagery.

Which sequence or shot was the most complicated to create and why?

Our most involved shot was where Atlanna defeats a group of Atlantean soldiers in a continuous 700 frame shot that occupied a team of artists for the better part of a year. Production shot for multiple days’ utilizing a spider cam to capture both the elaborately choreographed fight sequences and numerous SFX events. Once we ingested the hero takes we proceeded to add additional destruction and events digitally. In the end, the final composite had over 500 CG elements between the FX and lighting used in the final comp. The majority of the original set was replaced with destructible geometry. Destructible geometry involved accurately modeled internals: drywall, plaster, wood frames, insulation, weatherboards, down to electrical wires connected to light fittings. There were over 50 unique effect tasks to destroy certain parts of the set from the guards kicking through doors, windows, porches and holes throughout the rooms from Atlantis guards’ tracer fire. Each Atlantean guard was entirely replaced by a CG guard, taking care to simulate damage to their armor where appropriate, and flowline simulated for water spraying out of cracked armor.

Is there something specific that gives you some really short nights?

No, not really. We all worked quite hard on this project for the duration but tried to keep some semblance of order with regards to schedule.

What is your favorite shot or sequence?

I’m proud of all the work we delivered but if I had to narrow it down to just one shot or sequence it would be the attack of the Trench.

What is your best memory on this show?

Being able to collaborate with so many talented artists who were all dedicated to achieving James’ vision.

How long have you worked on this show?

I was on the project for just over a year and a half.

What’s the VFX shots count?

I believe we delivered just over 450 shots that are in the final film.

What was the size of your team?

At our peak we had about 250 artists working on this film.

What is your next project?

I am currently working on GEMINI MAN.

A big thanks for your time.

WANT TO KNOW MORE?

Scanline VFX: Official website of Scanline VFX.

© Vincent Frei – The Art of VFX – 2019

Dear All

I found this article very interesting … and I am very proud to know that my son at Scan Line contributed to the making of this fantastic movie.