In 2012, Paul Butterworth explained to us the work of Fuel VFX on PROMETHEUS. He then worked on IRON MAN 3, THE HUNGER GAMES: CATCHING FIRE and X-MEN: DAYS OF FUTURE PAST. In 2014, Fuel VFX is acquired by Animal Logic. Within this studio, Paul works on INSURGENT, AVENGERS: AGE OF ULTRON and CAPTAIN AMERICA: CIVIL WAR.

How did you and Animal Logic get involved on this show?

We had collaborated with Marvel’s VFX supervisor Chris Townsend on CAPTAIN AMERICA, IRON MAN 3 and AVENGERS: AGE OF ULTRON. So it was wonderful to be invited to work with him again on GUARDIANS OF THE GALAXY VOL. 2.

How was the collaboration with director James Gunn and VFX Supervisor Christopher Townsend?

All of our collaboration was with Chris, though we did say hello to James when we were at the Marvel studios in Los Angeles and showed him a test VR version of Ego’s Palace, which is very cool.

What was their approaches and expectations about the visual effects?

Primarily we were invited to solve how to represent Ego’s backstory. Chris and James were after a concept that involved a series of ‘living paintings’ inside Ego’s Palace.

Secondly, we were asked to design and build Ego’s Palace interior out of fractal mathematics as we had some experience working with fractals on AGE OF ULTRON.

The expectations are forever the same: ‘Show us something we haven’t seen before.’

What are the sequences made by Animal Logic?

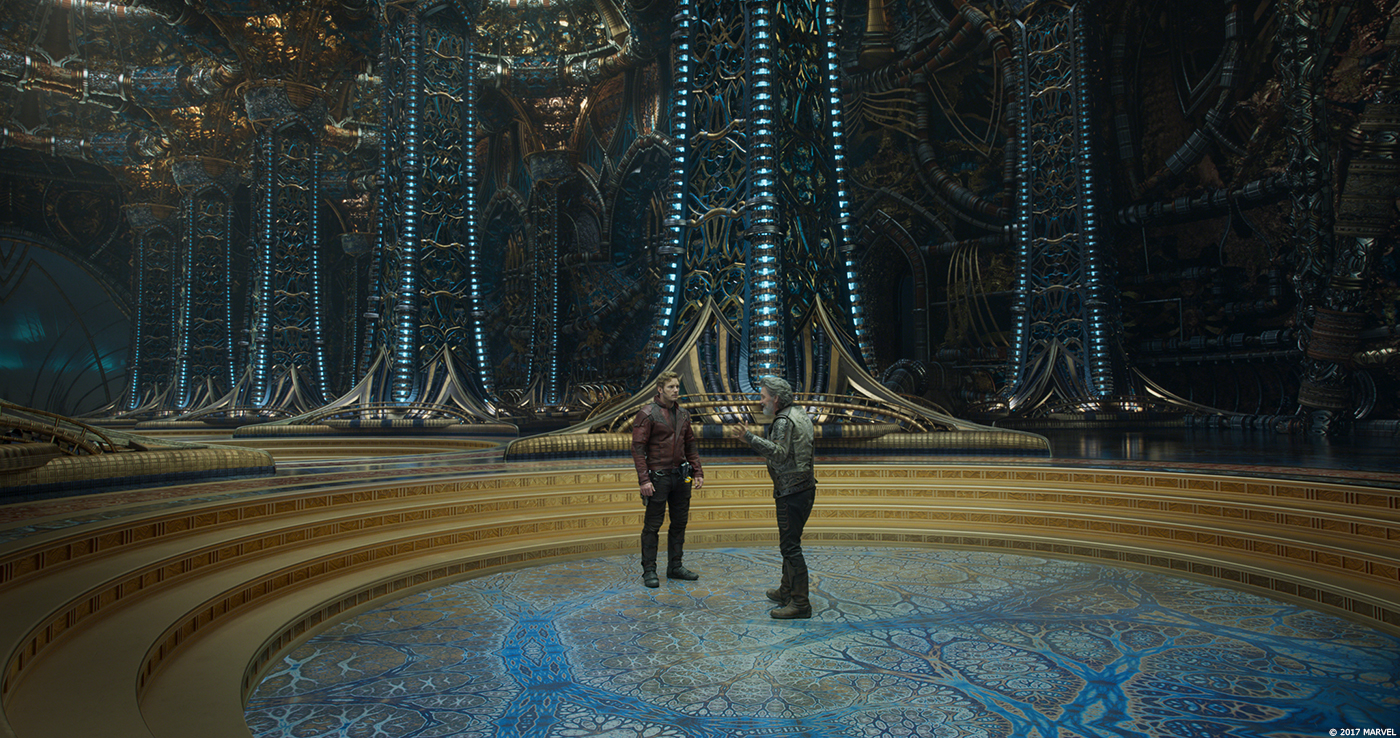

We looked after most of the Interior Palace scenes where Ego explains his origins and then later reveals his true malevolent purpose, and the Palace Garden scene where Quill learns to summon his ‘celestial energy’ and play ‘celestial catch’ with his father. They were really interesting scenes that had important plot points to convey – a responsibility we often get when we work with Chris.

How did you organise the work at Animal Logic?

With a project of this nature, we gathered a small, senior heavy, generalist team to work through each of the ‘unknowns’ on the show. The team had representatives from Concept, FX, Modelling, Lighting and Comp. At this stage, I like the process to be a little messy, unhindered by the pipeline with the sole purpose of getting ideas in front of Chris and director as soon as possible.

On this particular film we had to solve how to ‘grow’ alien environments, design Quills ‘Celestial Light’ and, as mentioned before, we needed to represent Ego’s backstory in a visually creative way. As these concepts and solutions coalesced into solutions, these were distributed to the broader department teams who then further honed the various concepts and managed the turnaround of shots through the Animal Logic production pipeline.

The Ego Palace is really beautiful and a huge environment. How did you approach it?

Building the Palace and Garden sets for GUARDIANS OF THE GALAXY VOL. 2 presented a unique challenge for us. The creative brief involved two separate alien environments, made from complex mathematical shapes with an unprecedented amount of detail.

As an additional challenge, the palace environment had to closely refer to concept art from Marvel’s production designer, who based its architectural forms on the intricate fractal work of Hal Tenny. To achieve this challenge we used a novel approach to modelling fractal objects using point clouds, taking advantage of our pipeline’s capabilities to integrate FX objects with the environment set pieces and leveraging instancing and high-levels of geometric complexity using the in-house ‘Glimpse’ renderer.

Can you explain in detail about the design and the creation of this palace?

Early on, it was clear that the project’s creative design process would require a smarter alternative to a brute force modelling approach. Various techniques for modelling fractals as 3D objects were considered, such as exporting static geometry from external applications, building high-resolution volumes, pre-processing depth and shadow maps, or tracing the fractal function directly at render-time. We had used some of these techniques previously on AVENGERS: AGE OF ULTRON and were aware of the limitations.

During the design process, to quickly visualise a variety of potential fractal styles, Matt Ebb our FX Lead on the show, developed a modular, interactive fractal visualiser within Houdini which translated fractal formulae to VEX code. Inspired by distance field rendering the tool used sphere-tracing to intersect the fractal surface from the camera efficiently. Rather than rendering pixels, it created a dense point cloud as native Houdini geometry, with data such as a normal, colour, occlusion, and other fractal specific attributes stored per-point.

This enabled near real-time ‘scouting’ through fractal structures in the Houdini viewport, with the advantage of being very easy to freely explore deep inside the fractal space at various scales, opening up a vast range of design possibilities that were previously not readily available with previous approaches. It soon became apparent that this technique could be used not just for visualisation, but also for generating final models.

In Modelling, Jonathan Ravagnani lead the team in roughing out the basic architecture, making low-resolution bounding objects for ‘fractalisation’, while also shaping high-resolution framework polygon geometry to be used complementarily with the fractal objects. Fractal point clouds were then generated to fit inside and were sympathetic to the forms of the bounding geometry, and delivered in a renderable Glimpse render archive. Most final objects ranged between 10 to 100 million points, but because only externally visible surfaces were evaluated, this allowed resolving a very high level of detail with a low speed and memory budget.

The final generated point clouds could then still be manipulated with a high degree of creative control with any of Houdini’s standard tools, allowing them to be carved, warped, duplicated and re-arranged after the fact. Rather than converting to polygon geometry, the fractal point clouds were rendered directly in Glimpse as millions of efficient tightly packed sphere primitives, using the stored fractal normal to override the sphere’s shading normal to give the impression of a solid. This was surprisingly effective, even for smooth metallic surfaces, and led to much faster turnarounds than converting to and cleaning up polygon geometry.

The surfacing team utilised fractal data stored in the point clouds and other attributes such as density, occlusion, or curvature to control shader parameters, using Glimpse’s custom ‘Ash’ node-based shading system. Instancing of the fractal point cloud objects in Glimpse was heavily used to contain memory usage – one of the final sets included 19 fractal set pieces of total 646 million points, but would be 3.35 billion if not instanced.

Can you tell us more about the lighting challenge and especially for the garden sequence?

There were several lighting challenges for us on the show.

Firstly, the base lighting of Ego’s ‘virtual’ palace set extension had to match the actual lighting of the practical set piece. The filmed set piece was just the central dais with a partial base column in one corner with a bench for the actors to sit on. Chris’s onset team had collected plenty of HRDI spheres and colour chart reference so setting up this base lighting was straightforward.

Next Ian Dodman and his lighting team designed beautiful lighting groups that highlighted the scale and grandeur of the Palace interior. This included lights that raked the delicate filigree and detail on the walls and ceiling, internal lighting on the fractal columns, soft downlighting that illuminated the windows and alcoves, and dappled light shafts that highlighted detail and feature lighting for each of the sculptures. These light groups were then rendered separately with a multitude of passes giving the composting team a huge amount of control to set different moods for each sequence. For example when we first enter the Palace the lighting design is late afternoon, warm inviting and friendly, whereas later on when Ego reveals his true nature the lighting is intended to be much darker and sinister.

Along with lighting the set, the compositing and rotoscoping teams had a lot of work extracting the actors from the original filmed set and relighting them when they walked past virtual practical lights elements or when they interacted with various ‘energy elements’ like the ‘Celestial Light ball.’

In the garden, the big challenge for compositing apart from extracting the actors from the photography was balancing their lighting between plates shot over different shooting sessions months apart.

Along with extensive balancing of the actors, Quill was cleverly given interactive lighting for his ‘Celestial Light’ moment. This was achieved through a combination of comp grading trickery and replacement of his hands with CG hands.

Quill with the help of Ego can use blue energy. Can you explain in detail about this FX creation?

Developing the look of Quill’s ‘celestial light’ proved challenging as everyone had a different impression of how we could represent this energy.

Chris’s brief was that the energy needed to evoke an electrical quality but be mouldable like bread dough. It needed to feel real and photographic, be playful like a bubble and yet reveal that it could be potentially dangerous. We spent a lot of time exploring the ‘celestial light’ in the concept department as a series of style frames and motion tests, again using Fractals to help define it’s exotic look. To integrate the ‘celestial energy’ with Quill’s palms, we needed to build them in 3D and rotomate Quill’s performance accurately. In the end, we decided to replace his hands entirely with CG hands giving us more control.

Various fluid simulations were designed to create plasma arcs, vapour trails, and subdermal lighting of tissue. These FX sims were then combined as renderable caches with Quills CG hands, that were modelled, rigged and animated in Maya. Our shading team used ‘Ash’ to handle the texture work, and everything was rendered through ‘Glimpse’. Along with combining all these passes, comp applied the usual tricks of relighting the actors, adding lens aberration and glows to the CG to match the original photography.

How did you create the interactive light?

Some interactive light was in camera. Most of the relighting of the actors was through carefully tracked and rotoscoped mattes and regrading of the actors in compositing to appear as if they were lit by the ‘celestial energy.’

Which references and indications did you receive to create the dioramas?

Early on in the pre-production phase of the film, we were asked by Chris to design and develop a series of ‘moving paintings’ that depicted Ego’s backstory. Chris was keen on the idea of pictures that form through falling sand, and he had some excellent reference that he shared with us in this regard. James loved the idea that Ego’s backstory was displayed in the manner of the ‘Stations of the Cross’ which is a classical representation of Jesus’s crucifixion.

In Ego’s case the paintings would be several triptychs that depicted his backstory from a lonely intelligence adrift in the cosmos to ‘galactic conqueror.’

One of the other key factors to consider was that Ego could manipulate and grow anything with his ‘celestial energy’. So the images needed to be beautiful Renaissance paintings that could evolve and change like a picture sequence.

Can you explain in details about the diorama creation?

We started the diorama process by exploring how to transform the live-action that James was shooting into shifting sand. These early tests were achieved by tracking the supplied live action plates and then exporting the motion vectors to Houdini. By using these vectors we were able to generate particles with the live-action colours attached per pixel. These tests had some promising impressions of falling sand. But while they were impressive James and Chris were looking for a more hand drawn, bespoke feel to the paintings.

So Anna Fraser and Sam Ho in the concept team explored many ways of depicting a hand-drawn triptych, from simple pencil sketches to airbrushing to mimicking paint mediums such as gauche and oil paint, while thoroughly examining the sci-fiction artwork of the 70’s especially Chris Foss, Vincent Di Fate and Roger Dean. We even hand painted over a series of frames from the original film to assess how painful the process of converting the live action into a painting would be. It became evident that any approach we chose needed to be easily split between departments, as opposed to sitting on one artist. We needed a process to turn around each painting relatively quickly as the editorial team would be cutting the live-action up to the last moment. Once a painted style was approved, we would split the task of converting the live action into a moving painting across departments. The rotoscoping team isolated shapes in the live action. The art department animated hand-painted brush strokes that comp could apply as part of an oil paint recipe that was developed in Nuke by Max Stummer. But in the end after extensive development, it was decided this wasn’t the right creative direction for the scene. Marvel felt the moments of Ego’s story being depicted in the paintings would have better impact as 3D animation.

So it was back to the drawing board. And we threw every talented artist on the team at what the new look would be. It’s fun to see how creative artists from different departments approach the original problem and within a week or so we presented a bunch of possible new directions to Chris and the team. Was it made out of dust? Out of light? Do they emanate from the palace columns? Are they made of liquid, gold, or fractals like the palace?

In the end, the concept that gave us the most creative options was around stylised sculptural forms for the diorama content which was framed by an elegantly designed eggshell that mimicked the design of Ego’s ship interior. This latter element was suggested by Chris for us to explore and so we replaced the picture frame idea with the ‘eggshell’ that had layered blades that could be used as wipes to transition between diorama elements. To give the sculptures a realistic finish and scale, we experimented with a variety of shaders from hand painted stone, to brushed on gold leaf and eventually settling with pearlescent glazes that James likened to Hummel figurines (in a positive way!)

Once we had this new direction, the process became pretty straightforward, and the challenge now was getting it completed in time, as we only had five weeks remaining by that stage. We pre-visualised the animation, placement and size of each diorama to capture the particular ‘story beat’ and to aid editorial in locking the scene.

For Meredith and Ego’s diorama, we roto-mated the original live action and applied this performance to the stylised sculptural forms of them. Each diorama also had a hand painted motif designed by Prema Weir that was ‘carved’ onto the inside surface of the egg to support the foreground moment.

The dioramas and palace sets were then rendered at 3k and downscaled to 2k as we found the Red Weapon camera resolved so much detail that a standard 2k render looked soft in comparison to the live action. The renders were then combined with the live action in compositing with all the usual trickery from reflections of the cast on reflective surfaces, to the light wrapping of the CG set back onto the actors. In most shots, we completely rotoscoped the cast off of the original background and made use of CG hair wigs to supplement their hair edge detail.

How did you find its final look?

Thorough exploration in the concept department, plenty of motion tests and presentations with Chris and the studio to hone the eventual look. It was quite a creative journey.

Was there a shot or a sequence that prevented you from sleep?

Not on this one. I slept like a rock.

What is your best memory on this show?

I really enjoyed this production. The work was challenging both creatively, technically and looks fantastic in the end.

But the standout memories were from the camaraderie we had amongst our internal crew as well as Chris and his team on the Marvel side. When you are workshopping how to depict the backstory of a giant ‘space-brain’ who grows his own planet and has impregnated 1000s of alien women you find you are having some pretty trippy conversations at times.

What your shots count?

Around 150.

How long have you worked on this show?

For me, it was around 15 months.

What was the size of your team?

70 ish.

What is your next project?

There’s always plenty of research, development and opportunity at Animal Logic. But for the first time in several years, I’m not jumping straight into the next project, which is great for a change. It awards me a little time to catch up on all the exciting developments in broader areas of personal interest from Artificial Intelligence, Augmented Reality, illustration and creative film-making. But a lot of my colleagues are very busy on THE LEGO NINJAGO MOVIE and PETER RABBIT at the moment.

A big thanks for your time.

// WANT TO KNOW MORE?

Animal Logic: Official website of Animal Logic.

© Vincent Frei – The Art of VFX – 2017