In 2014, Laurent Taillefer explained the work of Mokko Studio on BEAUTY AND THE BEAST. He then works at Atomic Fiction (now Method Studios), Mr. X and joins Scanline VFX in 2018. He works on many films like DEADPOOL, STRANGER THINGS, PACIFIC RIM: UPRISING and AD ASTRA.

How did Scanline VFX and yourself get involved on this show?

Scanline was involved early on with previz, roughly 6 months before the shoot. We were then part of the on-set supervision and support, collaborating with the nCam team to provide real-time visualization. I joined Scanline in October 2018 and my first duties were to assist our on-set supervisor Walter Schultz. By the time the show wrapped I was asked to take on the global visual effects supervision.

How was the collaboration with director Roland Emmerich and Overall VFX Supervisor Peter G. Travers?

Pete only joined the show after the shoot wrapped, so our first collaborations were directly with Roland. As MIDWAY is the fourth film Scanline and Roland have worked on together, our owner Stephan Trojansky has built a strong relationship with Roland. The level of trust that has been built over time between Scanline and Roland meant that things ran very smoothly. Roland is very clear about his ideas, which leaves little room to second guess or misinterpret what he is looking for which makes the workflow very efficient.

What were their expectations and approach to the visual effects?

As we were involved in both the previz and real-time viz, a lot of the visual story-telling, framing and composition was established early on. This approach allowed us to eliminate any layout and animation questions very early on, allowing for plenty of time to develop look, simulations and visual quality. From there on, the focus was achieving the photorealism.

How did you organize the work with your VFX Producer?

Our VFX Producer, Katharina Kessler, had been working on the project from the start, so she was able to accelerate my on-boarding, both on the show and at Scanline. She had established the communication channels with the client side team, allowing me to focus on the creative and technical challenges. As she was working from our Vancouver studio and I in Montreal, we were able to balance our days with me starting early focusing on delivering notes and versions, while she handled the later part of the day with all client-side duties such as deliveries.

How did you split the work amongst the Scanline VFX offices?

The show started while the Montreal office was still in its first months of opening, so the bulk of the team was split between the Vancouver and LA offices at the beginning of the show. As the project evolved, and the Montreal office grew, every department grew in LA, allowing for a balanced split. Supervision and leadership was split across all three studios, with one CG Supervisor in each West Coast studio and myself in Montreal. For instance, our animation lead was in Vancouver, layout in Montreal, and simulation in LA. Each one of the West Coast studios had a Compositing Supervisor while I was tending to the Montreal team in the morning hours. Scanline has developed a cross-site communication tool set called “Eyeline” making split supervision and team communication seamless, so there was very little overhead to the split

Scanline VFX was co-producer on the show. What are the advantages of this position?

Though this did not have an impact on the day-to-day workflow, very similar to any other similar sized movie, it did allow us to partake in large scale strategic decisions. From a creative stand point, the director remained the only client, so it was quite a normal workflow.

What are the sequences made by Scanline VFX?

We focused mainly on 4 sequences: The Pearl Harbour attack, the Doolittle raid, the Carrier Hunt (torpedo bombers attempting to engage the Japanese fleet) and the Last Mission, with Richard Best bombing the last standing carrier.

How did you approach the massive attack of Pearl Harbor?

The early previz, and the editing of the nCam real-time viz were very helpful in allowing us to narrow down the builds, so we could focus on what would be on camera only. This was instrumental in allowing for an efficient distribution of the work. Although our layout and animation teams were dealing with a large number of shots, the direction was very clear from early on.

The key to the success of this sequence was our new “multi-shot” setup, mainly developed by our FX Supervisor Lukas Lepicovsky, which allowed for batch simulations and renders of templates. With this, all shots featuring similar effects were run in an automatic way, allowing for drastic improvements to efficiency.

Another key in delivering this sequence came down to our layout pipeline, which allowed Ashley Blyth’s team to place practical elements interactively in the scenes, while they would publish to compositing and effects. This allowed us to get early sign-off on effects heavy elements in layout, omitting a lot of approval cycles from the more time consuming departments.

Can you elaborate about the previs work?

The team, lead by Peter Godden, began working in the summer prior to the shoot. We started by exploring various avenues to speed up the output (ranging for custom pipeline alteration to implementing Unreal). We finally settled upon a “light” version of our current layout pipeline. This proved to be judicious, as it allowed to re-purpose a lot of the previz in to the actual movie, using the studio assets in a simplified resolution, thus staying away from throw-away work.

Can you explain in detail about the creation of the various planes and ships?

The asset build was split between the production company, Pixomondo and ourselves. The production studio provided some of the assets, as they were able to start early on and had access to the movie production design for historically-accurate blueprints and references. Our work involved converting the ships to work in our pipeline, carrying out minor alterations to integrate the builds in the cases of set extensions, and develop textures. This collaboration sped up our work significantly.

The asset split between Pixomondo and ourselves was based on shot relevance, with each studio accountable for the assets most prominent in their shots, and handing them over to the other vendor once complete. For instance we built the Hiryu carrier, which is mostly seen in our Last Mission sequence, while Pixomondo built the Akagi. Both carriers were exchanged and are featured in shots from both vendors. As Pixomondo and Scanline use different renderers, we had to design a streamlined ingestion process. Our texture lead Marie-Pier Avoine and CG supervisor Kazuki Takahashi took on this challenge, and by the end of the project, each new asset coming in was almost automatically translated into our pipeline. In all cases, production was very supportive in delivering references, blueprints and any information to help us in achieving historical accuracy regarding our builds.

Can you tell us more about the shader and texture work?

We work mainly in vRay, with a strong lean on the PBR photo-real rendering paradigm. While we rely on Mari quite heavily, we used MIDWAY to incorporate Substance into our workflow, leveraging the Smart Materials to get first pass and underlying layers for the large amount of high-resolution metals.

How did you recreate Pearl Harbor and its vast environment?

As we had established the scope of what would be visible early on, we were able to work our build through “shot cameras”, a string-out version of every possible angle. This allowed our CG supervisor Matthew Novak and Environment Supervisor Damien Thaller to do an efficient split between actual 3D builds and projected matte painting, With a clear scope of the work, the teams were able to focus on only what was needed, which narrowed down the amount of work and made it manageable with a small dedicated team. Our modeling lead Kevin Mains oversaw the creation of each individual asset.

Where was the Pearl Harbor sequence filmed?

MEL’s in Montreal for all the battleship shots, while the Intel Office and aftermath sequences were actually shot in Pearl Harbour.

How did you handle the lighting challenges?

The biggest challenge was to changing the studio lighting into daylight on the Arizona sequence (Pearl Harbour). While the on-set lighting was done wisely to avoid any major issues, such as double shadowing, it’s often hard to re-create sunlight on a sound stage. The Composting Supervisor for the Pearl Habour sequence, Michael Porterfield, used all his tricks to achieve this result. Another challenge was the integration of the on-set interactive fire lights. Animated light blockers and powerful LED screens were used to add the contribution of the flames, smoke and explosions surrounding the action (Scanline provided the media for the LED panels). Though these provided useful effects, they also made the integration of the footage more difficult, as it forced us into very specific patterns, which we sometimes had to suppress for the sake of editing and storytelling.

Another challenge was the sheer amount of shots to manage. To that extent, we developed a tool called “lightspin” which would run key-frames of each shot with a rotation light-rig, allowing for supervisors to set the best lighting direction without requiring much human intervention, freeing up man power to focus on detail work and hero shots.

Can you tell us more about the creation of the POV shots?

Though these shots differ from plate-based shots in that they are completely CG, they were treated in the same way as the rest, resulting in a consistent look. When dealing with flight shots, we always started from historically accurate values for speed, elevation, time of day, orientation, and would only alter these if the storytelling commanded it. As these shots weren’t constrained by plates, the contribution from layout and animation was more significant, allowing us to explore creative compositions and a tighter collaboration with the client in creating these. Overall this exchange went really well, with a lot of our propositions making it into the edit. The work was definitively collaborative.

The sequence is full of explosions and destruction. Did you use procedural tools?

Yes. Our practical element exporter from layout to effects and multi-shot setups allowed for quick iterations that could be re-run with variable adjustments. Plane fires and explosions were based on template setups that could be propagated into the shots automatically, then simulated on their own. Another aspect for which we developed a lot of automation, was for the tracer fire, impacts and water hits which was led by Joe Scarr. All assets were rigged with gun controls, which the system would acknowledge to run bullet simulations, without the need for individual scene setup.

Can you explain in detail about the creation of the various FX elements?

We identified the various main FX elements early on, before the movie was turned over (explosions, fire trails, aftermath gasoline fires, tracers, etc), and started building templates and tests which were presented to the client. Once the look was validated, we built a bank of background elements rendered with a static camera under various lighting conditions, which could be used by layout as placeholders, but also by compositing as mid-ground and background elements. The hero, foreground and story-telling dependent elements were simulated per shot, using Scanline’s Flowline and Thinking Particles for rigid body, hard surface and particulates.

In all cases, we started by doing some historical footage research, compiled the relevant material and sent it to the client for approval, which gave us very clear guidelines as to the look we needed to achieve.

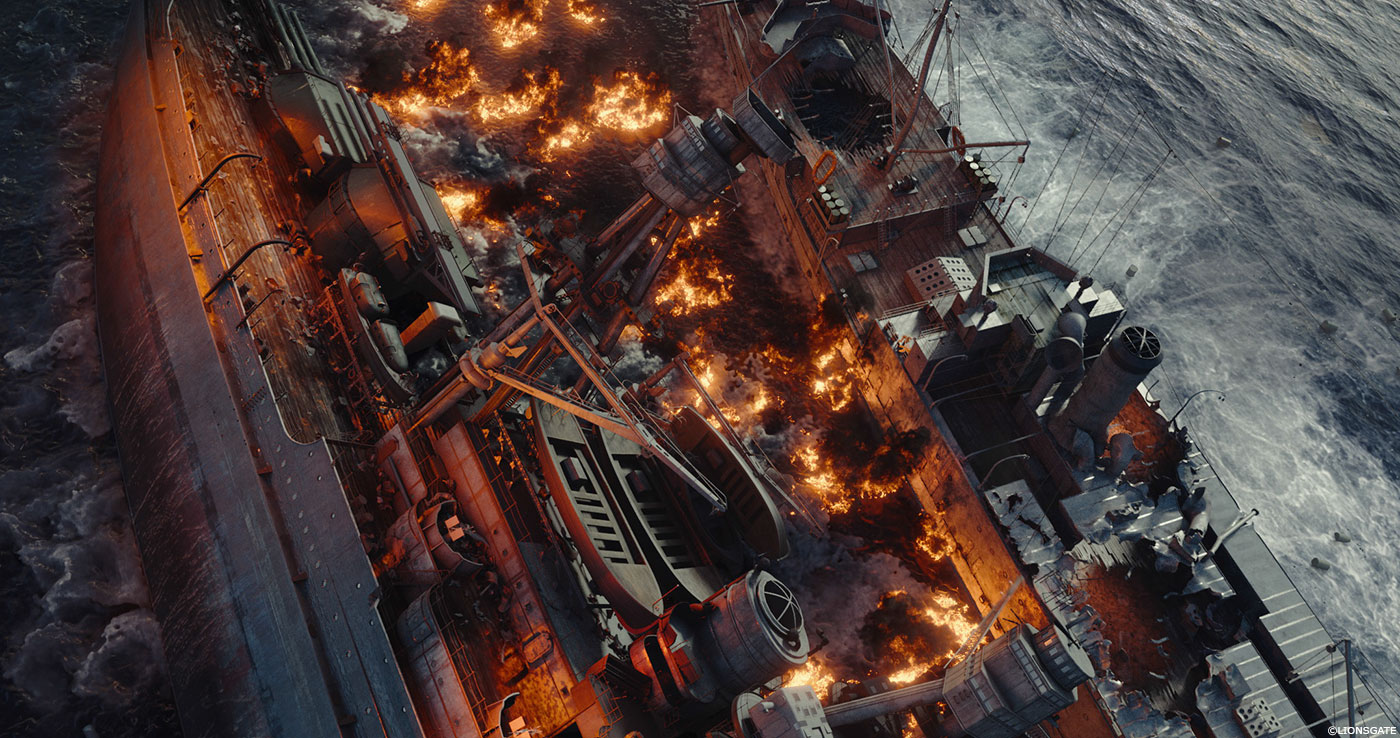

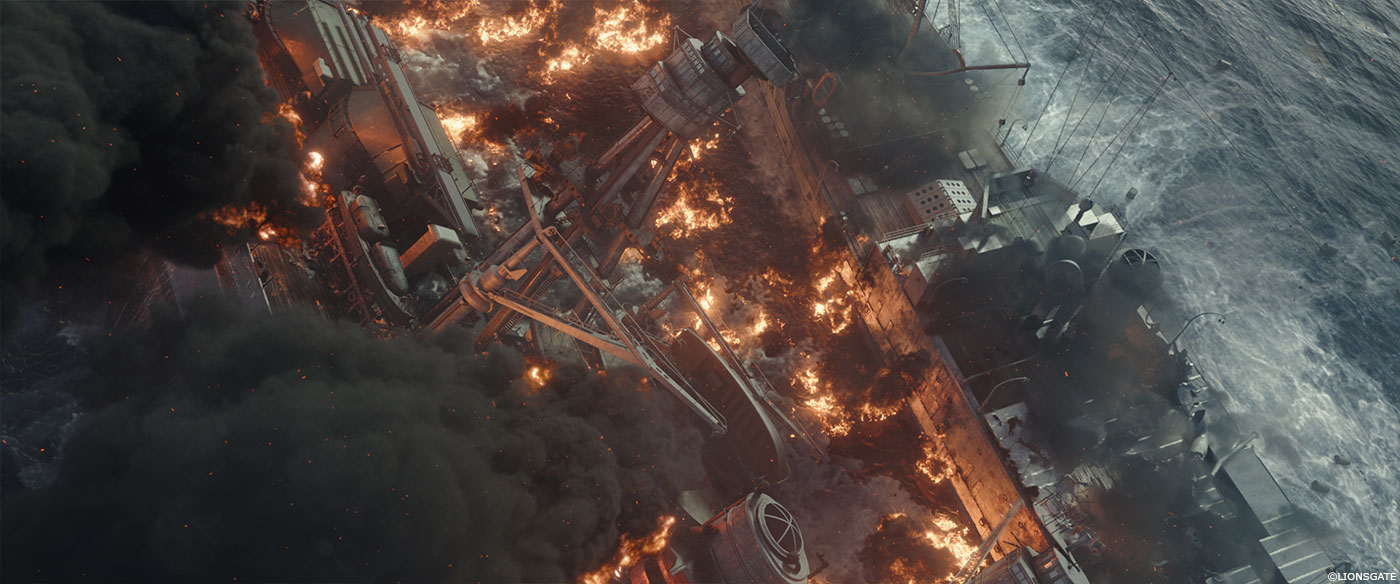

Can you tell us more about the big Arizona explosion?

The production designer, Krik Petruccelli, directed us to historical data about the actual explosion, including a step-by-step description of what exactly happened. This, and a rare piece of footage showing the actual explosion, allowed us to re-create the choreography as close as possible to the actual event. We started off by blocking the series of shots in layout, using our practical and generic elements to get a rough version of timing and framing, allowing for a very prompt turnaround. Thanks to that, we were able to experiment with timing and framing with the director until we reached a point where we felt comfortable moving into actual simulation. The shots are a sum of 4 distinct explosive events, in crescendo, with each of the components interacting fully with the environment – the breach in the hull shapes the fire ball, which emits debris that trigger waves and splashed, etc. Lastly, these shots were dressed with practical elements by our comp team to add the last measures of detail and photorealism.

How did you create the ocean?

Our Flowline setup uses generic non-interactive oceans (we call them 2D oceans) for the base characteristics whereas areas around the ships are simulated (3D oceans). As both layers share the same base values, they can be blended in a seamless way. Leveraging this technique, we ran a library of pre-simulated boat wakes, adapted to each hull profile and speed, and were able to constrain them to the animated ships. As the 2D and 3D oceans merge, we were able to render boats interacting with water on every shot, with only hero action requiring actual simulations.

Can you elaborate about the storm creation during the Doolittle sequence?

This sequence offered a lot of challenges. Once we had established the strength of the ocean waves, we ran actual simulations with “Boaty and Floaty”, a custom tool that allows to export boat animation onto a wavy surface, which will generate the correct amount of rocking and displacement. This was a delicate process, as we had to reconcile the physically accurate results with the desired framing and composition to make the shots powerful and dramatic. Once locked, our Flowline simulation artists, led by Lukas Lepicovsky, started the process of generating all the secondary elements: the spectacular bow splashes, the churning wakes, the whitecaps and crashing waves. A rare piece of footage of a plane flying through a rogue wave splash was given by the client, so we used it as a target to achieve maximal realism. We had to go from very detailed and shadowed splashes to simplified ones, which proved in the end to be wise, as it anchored the sequence in crude realism. Our creative director Nick Crew, also a long running Scanline supervisor supporting the show, developed the look for the sequence, establishing the bright yet apocalyptic sky the director was looking for.

The ocean is seen in various conditions. How did you manage this challenge?

Our lighting renders, with the use of proper environment lighting and physical characteristics, did most of the work. The high altitude ocean shots proved to be the trickiest, as the field of vision is such that a lot of elements come into play – wind gusts, currents, underwater patterns. This is mostly visible in the Last Mission sequence, where we follow dive bombers from very high down to water level. Lighting provided several ocean patterns and our Compositing Supervisor Brent Prevatt and his team developed recipes to add what we called “ocean complexity” – various levels of breakup, whitecaps, cloud shadows, and underwater phenomenon. Although all our ocean shots were full CG, we used references from helicopter footage shot in Hawaii to guide us.

The Battle of Midway involves a massive numbers of elements. How did you prevent your render farm from burning?

A few things helped. As we pushed for layout being the central hub for blocking effects, using our practical toolset, we were able to prevent from over-rendering out of lighting and effects for a long period of time. As the quality of our layouts was sufficient, we were able to deliver these for the intermediate screenings, again preventing us from any extra renders.

Taking full advantage of our multi-shot system, it was easy for us to setup key shots, and render a series of low-resolution sequences, and only trigger final quality renders very late in the game. The ability to render entire sequences without human intervention also allowed us for better planning of the render resources.

Can you tell us more about the animation of the planes?

Relying mainly on Maya and curve based animation, our animation leads, Daniel Alvarez, and later on Ken Meyer, were able to give our planes that extra layer of realism and drama. To help them focus on the performance – gracious arcs, sudden breaks and accidents, our rigging department, led by Devan Mussato, provided us with automated propeller, flaps, breaks, and wing vibration setups, which all adjusted to the animation. Only a handful of shots required us to manually animate the mechanical components of the planes, allowing our animators to stay focused on the creative and the narrative.

What kind of references and indications did you receive for the animation of the planes?

The movie production had hired a fight expert – a former Top-Gun pilot, who reviewed every step of the creative process – from the set builds, actor’s actions, to previz, animation and final looks. His input as to individual flight patterns, speeds and also how formations work, was key in avoiding any inaccuracies. We would receive a list of points to address after every major screening, allowing for enough time to blend them in our schedule. We also used historical news footage quite extensively.

Lastly, as for most things on this project, we would always refer to real life data for speeds and mechanical questions.

Which sequence or shot was the most challenging?

The opening shot of the Pearl Harbor aftermath sequence. Its dramatic importance to the movie, its relation to historical photography and its scale made it one of the hardest shots to produce. It features the largest fluid simulations ever ran at Scanline, which were designed by Stephan Trojansky himself.

Is there something specific that gave you sleepless nights?

This show was my first as VFX Supervisor. I was fortunate to be surrounded by a team of the best, yet every day was a first for me.

What is your favorite shot or sequence?

The opening shot for the Coral Sea sequence. It’s a one-of, full CG shot. The director wanted a spectacular and dramatic opening to the sequence, and was in search of ideas. We were able to propose and collaboratively design the shot together, with the historical reference exchange going both ways. Being able to collaborate with experienced supervisor, Pete Travers and director Roland was a great privilege. And the shot ended up looking amazing.

What is your best memory from this show?

The thrill of being on-set running the real-time station with Walter Schulz and the nCam team, modeling and texturing on site while the camera was rolling.

How long have you worked on this show?

11 Months.

What was the VFX shot count?

Roughly 500 shots.

What was the size of your team?

Around 250 people have been part of the Scanline team at one point or another.

What is your next project?

FREE GUY.

A big thanks for your time.

WANT TO KNOW MORE?

Scanline VFX: Official website of Scanline VFX.

© Vincent Frei – The Art of VFX – 2019