After starting his career in 1992 at Animal Logic, Richard Stammers joined MPC in 1995. He participates in many projects of the studio and work as vfx supervisor on such movies as WIMBLEDON, THE DA VINCI CODE and its sequel ANGELS & DEMONS or ELIZABETH: THE GOLDEN AGE.

What is your background?

I trained as a graphic designer in 1991, but my final year at university was spent predominantly doing traditional animation. My first job in the industry was at Animal Logic in 1992 where I was employed as a junior designer creating televising graphics and animation. Whilst I was able to learn the vfx tools of the trade in Australia i kept my design roots and upon returning to London I split my time between vfx compositing and designing/directing TV graphics and commercials. I joined MPC in 1995 to focus entirely on creating visual effects initially in commercials and later making the transition to features in 2002.

What did MPC do on this movie?

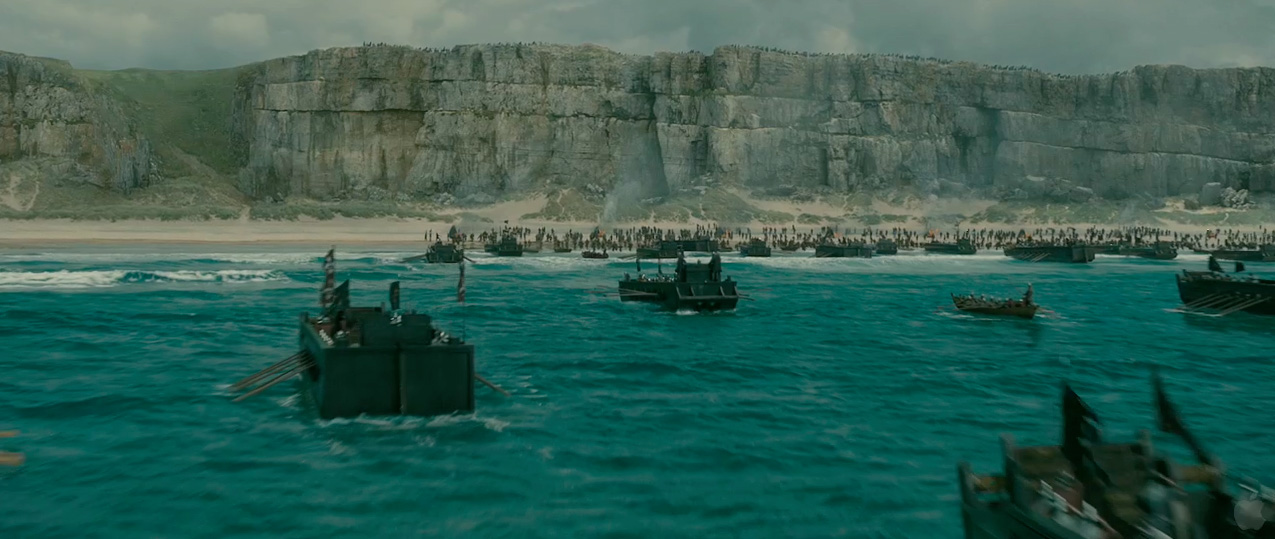

One of MPC’s main challenges was to create the invading French Armada and the ensuing battle with the English army. A CG fleet of 200 ships and 6000 soldiers were added to the 8 practical boats and 500 extras used in principal photography. MPC used Alice, its proprietary crowd generation software to simulate the rowing and disembarkation of French soldiers and horses, with all water interactions being generated using Flowline software. The defending English archers and cavalry where also replicated with CG Alice generated clips and animated digital doubles. MPC relied predominately on its existing Motion Capture library for much of Robin Hood, but a special mo-cap shoot was organised to gather additional motion clips of rowing and disembarking troops and horses.

MPC’s digital environment work was centred on two main locations; London and the beach setting for the French invasion and final battle. A combination of matte painting and CG projections were used to recreate the medieval city, which featured the Tower of London and included the original St. Paul’s Cathedral and old London Bridge under construction, in the city beyond. The production’s football field sized set provided the starting point for MPC to extend vertically and laterally, and in post production alternate digital extensions were also created to reuse the set three times as different castle locations. Each extension was a montage of existing castles chosen by Ridley Scott and production designer Arthur Max. For the beach environment, MPC had to create cliffs that surround the location, and were added to 75 shots. Once approved in concept, the cliff geometry was modelled using Maya and interchangeable cliff textures were projected depending on the lighting conditions.

MPC was also responsible for creating the arrows for various sequences on the film. Practical blunt arrows were used in production where ever possible, but most shots presented safety issues so digital arrows were animated instead. Arrows were added to over 200 shots, with 90% of these being handled by the compositing team using Shake and Nuke. MPC developed proprietary 2D and 3D arrow animation tools to assist with the volume of arrows required, which included automatically generating the correct trajectory and speed, and controls for oscillation on impact.

How was your collaboration with Ridley Scott?

Very good. He’s always very clear and concise about what he wants and also takes an interest in the financial implications of his requirements, and will spend the vfx budget where he feels it’s most suited. He usually would brief me with a quick sketch and would often follow up with a more detail by drawing over a print out of a shot. I’d get my team at MPC to interpret this into the 3d realm as simple un-textured Maya geometry over the plate, and re-present this to Ridley for approval. Where there was any ambiguity over a shots requirements I’d present a few options to choose from, so we had a clear brief before starting any detailed work on a vfx shot.

Can you explain to us the shooting of the French Armada and it’s landing?

The location for this shoot was at a beach called Freshwater West in Pembrokeshire, Wales. The crew of up to 1000 people were there filming for 3 weeks, in order to capture enough footage for the 20 minute screen time the battle was edited to. Further time was scheduled at Pinewood studio’s Paddock Tank and Underwater Stage to complete some of the shots that were considered impractical or too dangerous to achieve on location. The production where able to create 4 real working landing craft and 4 rowboats to represent the armada, and as many as 500 extras on some days. Ridley’s shooting style for this battle involved staging large scale performances each lasting 4-5 minutes and get as many cameras covering the shots he needs. This would take some time to set up and rehearse, and then it would be frenetic for a few minutes whilst they shot. He’d do several takes then move on to the next key stage of the battle.

The shooting conditions were extremely difficult and varied which caused great continuity problems. Changing light and weather created the usual inconsistencies, but the changing tide moved at 1 meter per minute so the size of the beach constantly was fluctuating, and the shooting crew had to be equally mobile, with all equipment on 4×4’s or trailers. For the vfx crew this meant the 10 cameras Ridley was using were moving constantly, so wrangling all the camera data and tracking markers, essential for our mactchmove department, was a huge task. We overcame much of this by capturing all camera locations with Lieca Total Station surveying equipment, and later incorporated the data in to a Maya scene with a LIDAR scan of the beach location. All cameras were armed with zoom lenses to deal with Ridley’s constant request to reframe for particular compositions he wanted, and often we’d find takes that had been shot half a dozen different focal lengths. Despite me reminding Ridley that we needed to avoid zooming during takes (because of the added complexity of the matchmove process) inevitably some of the shots later turned over to MPC were incredible difficult to work with.

How did you create those shots and what was the part of CG in the plates?

During the end battle most of MPC’s work was supporting what was already present in the plates, in some cases the number of extras was sufficient, and we’d be only adding a few boats into the background. But with 10 cameras filming and only 8 practical boats, most shots needed MPC’s digital armada, CG soldiers or environment work to augment the background. There were also a handful of wider shots that where MPC created the entire invasion or battle and much of the background landscape too. Each CG shot went through the same basic pipeline: first the film scans would go to the matchmove department for camera tracking and to the comp department for colour balancing to create a ‘neutral’ grade for consistent CG lighting.

The prep team would also handle any clean up such as marker removal or camera crew removal at this stage. Once a Maya camera was available the environment department would handle creating the cliff and the layout team would place the armada, which started from a master boat formation, and animation cycles which could be scaled or offset to suit the conditions of the sea. We usually go through a couple of versions of refinement to make it work compositionally and in context to the cut. Once I had approved the boat layout the crowd and layout teams set to work with our ALICE software to place all the soldiers in the boats and the beach with the appropriate animation. At this stage we’d send a temp version to the editorial team to cut in so Ridley and Pietro Scalia, the editor, had a chance to comment. At this stage we’d know the CG content of each shot and could accurately identify the rotoscoping requirements to create all the mattes necessary to place the cg behind the foreground live action. Whilst we waited for feedback on our layouts we continued into lighting and rendering and got the effects team working on the water interactions for the boats and crowds. Once we’d established a few key shots this process worked well. There was generally little or no feedback from Ridley so we could progress into comp quickly and get the shots looking more final.

Can we explain the creation of a crowd shot with your software Alice?

The first stage of preparing for a large crowd show like Robin Hood is to identify the motions that are going to be required. ALICE has a very sophisticated underlying motion syntheses engine that can take multiple inputs from any combination of motion capture clips, keyframe animation cycles & physics simulations which it can manipulate to give us the resulting simulations we see on screen, this gives us a great deal of freedom when deciding how to tackle a show.

For Robin Hood we relied predominately on MPC’s existing mo-cap library but extended it with new mo-cap data captured over a 2-day shoot, specifically targeted towards the disembarkation of soldiers & mounted cavalry, along with the rowing motions for the boat crews in each of the different boats. Once all the new motions arrived at MPC they were processed into the existing library through our motion capture pipeline, where our crowd team started to the create the motion clip setups and motion trees which would drive the agents for the whole show.

With ALICE being fully proprietary it allows us to quickly write anything from a new behaviour, such as inheriting motion from the boat the crowd agent is occupying, to simple tools that automate and simplify tasks for other departments. For the first time ALICE was used by our Layout department who took on the challenge of populating the whole Armada.

The crowd team produced a large number of different caches for each of the different rowing motions and disembarkations required for the various different boats. We then wrote a simple interface, which the Layout team could then use to rapidly set-up, randomize, change, and offset the caches to populate all of the boats in a few simple steps.

Once the first pass had gone through layout, the crowd team would take over any of the shots, which required more complex simulations to top up the action. This generally involved tweaking/adding to the disembarking to make it feel more chaotic, ranging from people being dynamically hit with arrows to stumbling through the water whilst providing the data required for the FX team to add in the interactions.

Once I was happy with the combined work of crowd and layout the next stage was to do the cloth simulations for all of the agents. Most agents only required the looser cloth of the lower body and any flags that were being carried to be simulated and this was handled by ALICE’s inbuilt cloth solver, before the resulting caches automatically flowed into FX and lighting departments.

There are a very large number of arrows that are drawn in this movie. How did you manage this?

Knowing that we have a large number of arrows shots on the show, meant we needed an efficient process to deal with them. I’d had great success on a past show Wimbledon (2004) animating tennis balls to mimed rallies, much of which was achieved as a 2d only compositing solution in shake. I felt that we could do the same on Robin Hood, as the trajectories were similar, but even simpler. One of the shows compositing leads, Axel Bonami took the process further by developing a series of shake macros, which only required the artists to place the start or end position of an arrow. The macro would use a still of a real arrow at the most appropriate perspective to work for the shot and then automated the animation process. He added further controls for impact oscillation to so the artists if necessary could dial this in. Arrows were added to over 200 shots, with 90% of these being handled by the compositing team using Shake and Nuke. MPC also developed proprietary 3d arrow animation tools to assist with large volumes of arrows where the 2d solution was unproductive. This was essentially a Maya particle system but could be tied into to the ALICE pipeline to allow crowd agents to fire arrows or be killed by them.

How long have you been working on this project?

I started in March 2009, and we delivered our final shot on 12th April 2010, so around 13 months in all, which seems to be about the minimum these days for VFX supervising a show right the way through.

What was the biggest challenge?

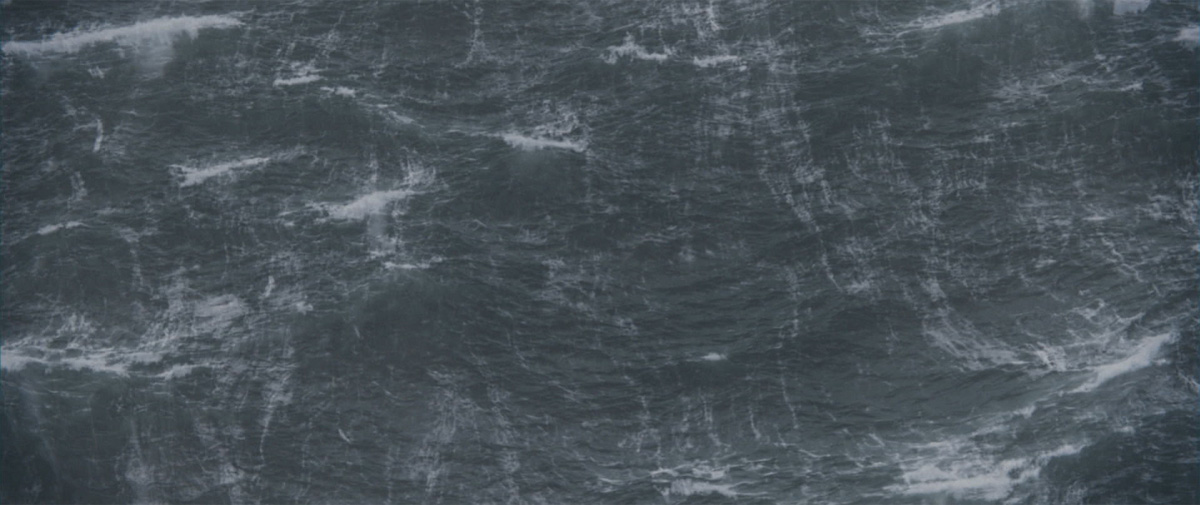

There’s a sequence of shots where the merry men return to England in King Richard’s ship. The production weren’t able to shoot this boat at sea, and Ridley wanted it to be windy and rough so the chances of shooting the right kind of sea plate were slim. It was storyboarded as one wide shot only so we looked into stock footage to use, but Ridley wasn’t happy with any of the options. Instead he turned to a previous film of his, ‘White Squall’, and cut in a sequence of shots from there, which featured a modern sailing ship and included insert shot of the sails. The 5 shots we created involved replacing this ship with a medieval CG replacement. There were no similarities between the 2 styles of boat, and further more it was so close to camera we had to completely rebuild our asset to a higher level of detail, and populate the deck with CG sailors, horses and windy canopies. We had no camera information for the plate and they were ‘scope anamorphic’ so the machmoves were tricky too. The finals were beautiful – a real testament to the teams that made it all work – a great example of just dealing with whatever gets thrown our way!

Was there shots that made you lose your hair?

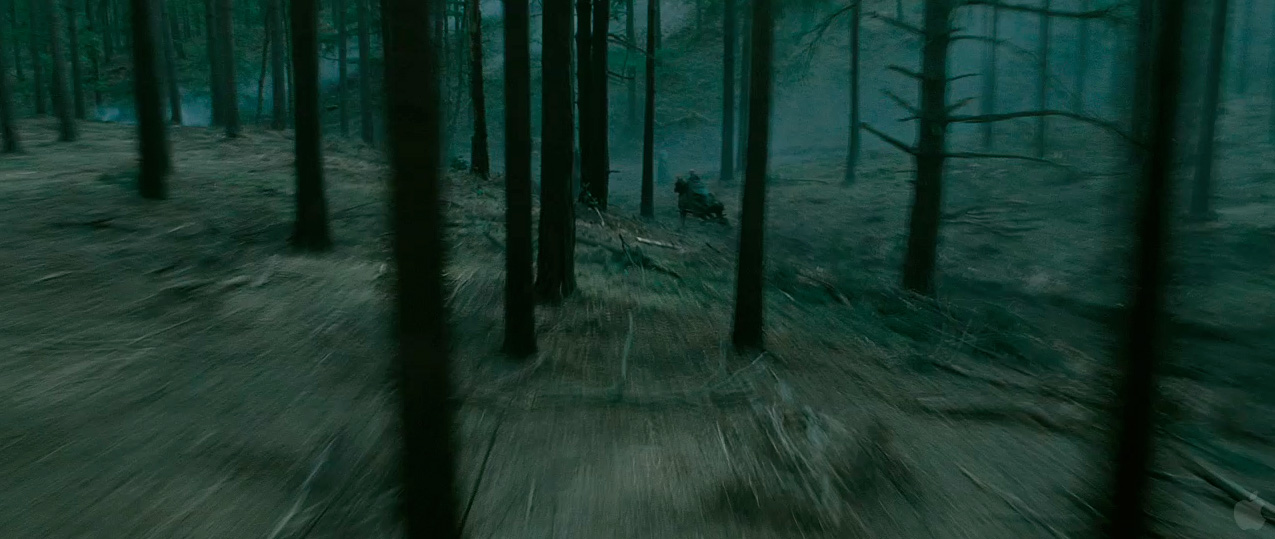

Well things never got that bad, but there were a few shots that I did worry over. One was an arrow POV shot that represents the moment at the end of the film when Robin Hood fires a deadly blow at Godfrey, Mark Strong’s character, as escape the battle on horseback. Pietro felt that this was an important moment in the film that mirrored a similar moment nearer the beginning of the film when Robin wounds Godfrey in a similar attempt to kill him. There were many discussions on how we could shoot it but no clear solution that worked with the limitations of the beach location. MPC created a previs of the shot, as it was important to visualise the key elements required and how we could break the down into achievable chunks. The first half of the shot sees mostly sky and digital environments that we were already creating, but the second half flies right into the back of Godfrey’s neck whilst he galloped along the shoreline. As the shot took place in the shallow waters of the beach, this was something I did not want to attempt as a full CG shot, because of the complexity of recreating the sea. I opted to shoot a moving plate of the beach and sea to match the previs as best as possible, and separately shoot Mark Strong’s stunt double as a bluescreen element, so we could manipulate it to work for the shot.

The practical and cost effective solutions were to shoot the background plate with a miniature helicopter and the foreground stunt man riding a partial mechanical horse, with MPC creating a full replacement CG horse. 20 MPH winds hampered the plate shoot and left us with only a few usable takes requiring significant stabilisation, and the cameras proximity to Godfrey’s neck required a slow Super Technocrane move to avoid injuring him. As we had to speed ramp the shot much faster in post we compensated with the stunt man performing his riding actions in slow motion. It was an uncomfortable set of elements to work with, and required a lot of manipulation to piece together. The final solution involved creating a BG almost entirely in CG but retaining the live action sea, which was camera projected back through our previs camera. Godfrey’s element was successfully pinned to a hero cg galloping horse and we started getting something that was working. But the nature of a smooth arrow trajectory made the shot look so clean and out of context to the surrounding shots, and this is where most of my concern lied. It was always going to be delivered late in the schedule, the last week in fact, and there would be no time to re-conceive the shot in another way if Ridley didn’t like it. So we set about adding as many of the attributes of the surrounding shots as we could. We changed the sky to something less pretty, added camera shake, layers of smoke to pass through, we dirtied up the beach by matte painting extra detail like clumps of seaweed, and added more depth hazing overall. And with the shot carefully graded to match the shot it cut to we had success. It took it far enough away from the feel of the previs, and worked really well in the cut – it’s a great moment in the film.

What did you remember about this experience?

The shot exceeded my expectations, which is always great. As a VFX supervisor you have to be a jack-of-all-trades, but you work with teams of artists who are masters at their disciplines, so you take for granted high expectations – exceeding them is always a bonus.

What is your next project?

Well, nothing confirmed. I’m busy at MPC pitching on possible news shows, but nothing I can talk about yet.

What are the four films that have given you the passion for cinema?

As a student, sequences created by Ray Harryhausen and Terry Gilliam are what inspired me to take up animation. TERMINATOR 2 and JURASSIC PARK both had jaw-dropping moments, which to me pushed the boundaries of VFX at a time when I was quite junior to the industry. They inspired me to do better. I always loved David Lynch’s DUNE and Ridley’s ALIEN, I’m happy to watch these again and again – few films have that effect on me these days.

Thanks so much for your time.

DETAILED SHOT BREAKDOWN

Robin and Merry men leaving the Tower of London.

The foreground live action plate was shot on the backlot of Shepperton Studios. MPC created a digital matte painting of the castle walls, Tower and the river. The element used for the river was taken from a plate shot at Virginia Water, Surrey. Ridley wanted the town of London to be full of life and make the river bank busy like a market, so MPC bolstered the limited number of extras with around 200 CG people in the town, CG guards on the castle and cloned live action boats on the river. In the foreground additional huts were created to increase the housing density, and multiple layers of smoke were added. When reviewing the final version of this shot at MPC, Ridley said he liked this it so much so he wanted to live there! This is 1 of 14 other London environment shots that MPC created for Robin Hood.

Robin and Merry arriving at the Tower of London in King Richard’s ship.

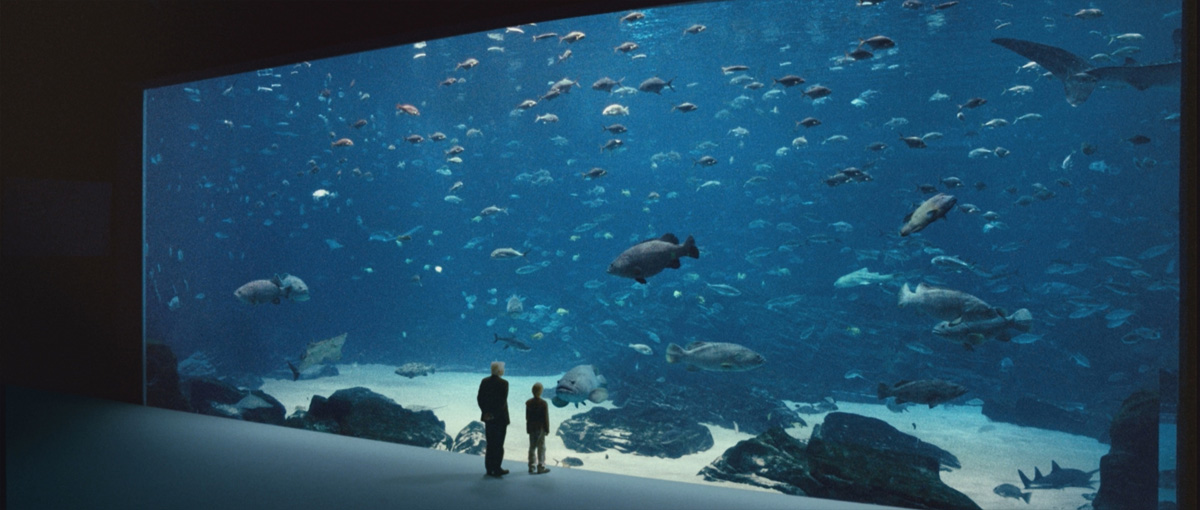

The live action helicopter plate was shot on location at a lake in Virginia Water, Surrey. The aerial unit used a Panavision Genesis camera for their photography. MPC created a CG environment where much of the original backplate was replaced with the Tower, the surrounding city of London and landscapes beyond. The design of the Tower and it immediate surroundings were a collaboration between the Visual Effects and Art Departments, with the final layout and orientation coming from meetings with Ridley Scott, production designer Arthur Max and visual effects supervisor Richard Stammers. Whilst quite a substantial set was constructed as a river-side entrance to the Tower, the jetty, wall and archways occupied such a small part of the plate in this case, but provided MPC with the ‘anchor point’ to add their digital extensions. Environment lead Vlad Holst built the city in Maya with basic geometry to represent all the key features. This was presented to Ridley for comments and some adjustments were made before all the matte painted projections were started. The final DMP’s created by matte painter Olivier Pron extended the city to the horizon and incorporated the original stone London Bridge under construction, and old St Paul’s Cathedral in the distance. The lake was extended to become a river as a rendered CG element, in order to incorporate all the reflections of the new digital environment. The banks were populated with CG boats and CG crowds gathered to witness what they believe to be King Richard’s return from the crusades. King Richard’s ship and some of the foreground rowboats were in the original plate, but these were added to with 2d replications, and the motor wake of Richard’s ship was removed.

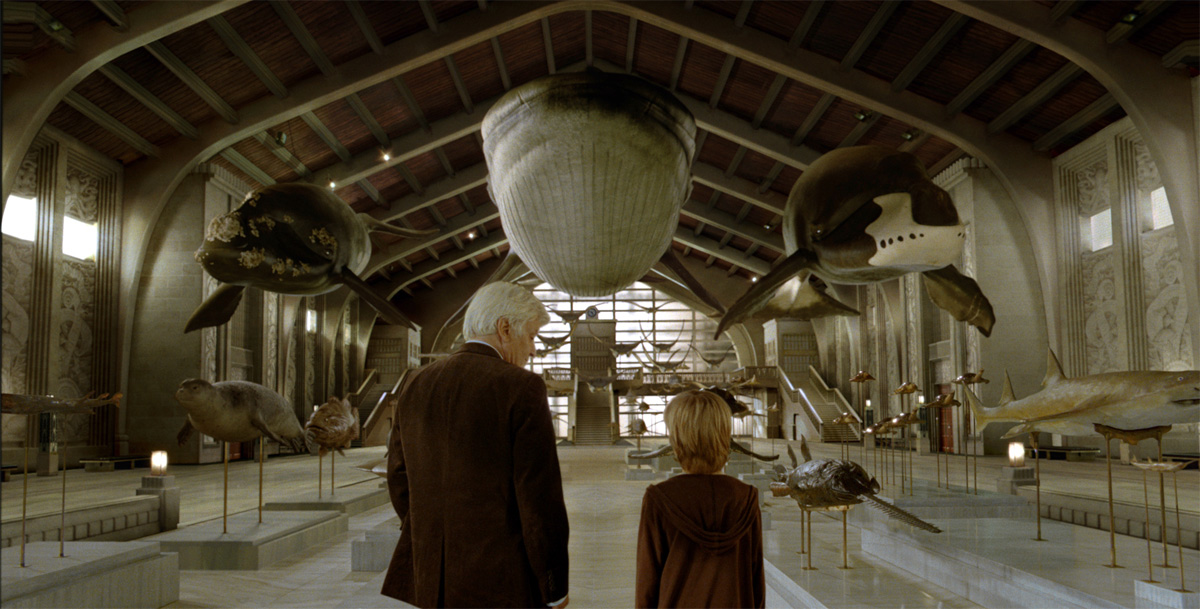

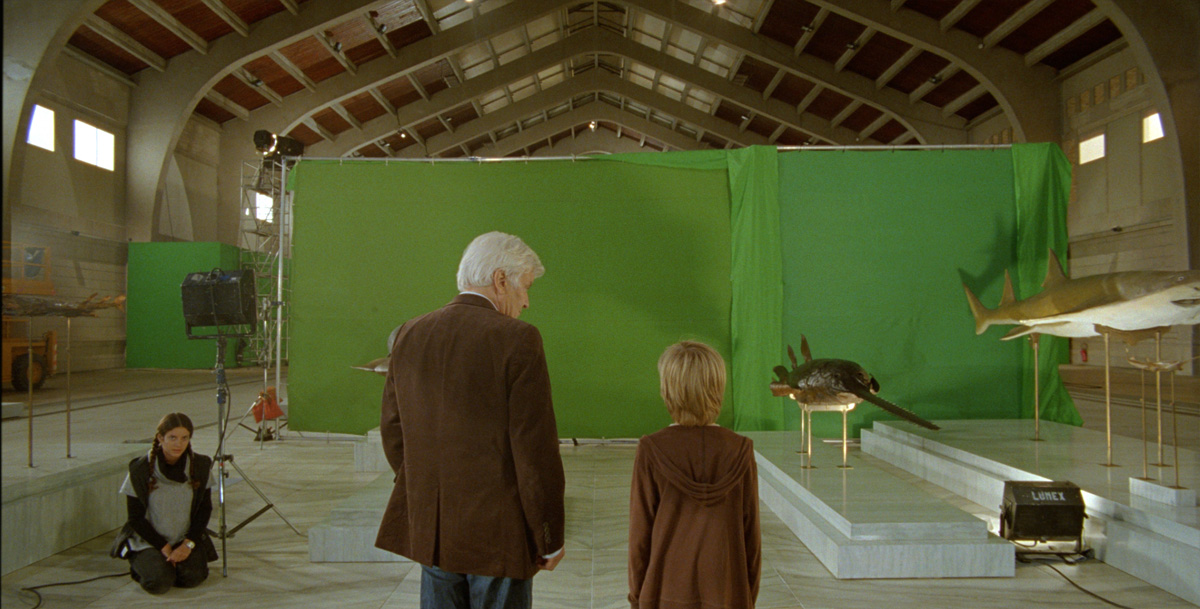

The combined armies of King Phillip and the Northern Barons approach the beach where the French Armada have begun landing.

The live action helicopter plate was shot on location at Freshwater West, Pembrokeshire in Wales and was captured using a Panavision Genesis camera.

This shot was turned over to MPC early on in the schedule and became key development shot, to test the look our CG assets. It was used to conceptualise the digital environment work, which required the creation of cliffs that surrounded this location – a necessary story point to create a tactical advantage for the English archers. Also the shot was used to determine the layout and number of boats in the French Armada and the numbers of soldiers on the beach. It paved the way for over a 150 other shots that required views of the cliffs or the French Armada.

For the design of the cliffs, MPC’s environment lead Vlad Holst created some Photoshop concepts for Ridley. Initially these were based on the white chalk cliffs of Dover, as this was the scripted location of the French invasion. The final design however, was based on the practical necessity to have a real cliff location to shoot non- VFX shots, which was in close proximity to the main beach location in Wales. These cliffs, whilst quite different from the concepts were a good geological match to the beach, and ultimately provided a better blend to the sand dunes behind the beach. Textures of the cliffs captured by the aerial unit were tiled, graded and projected onto simple Maya geometry that blended to a Lidar scan of the beach location. The cliff geometry went through a number of shape variations for Ridley’s comments with the approved version including a wide access path to the beach for the bulk of the cavalry and a narrow gorge from which Marion could join the battle later.

Ridley wanted to feel that the end battle involved around 2000 soldiers on each side. The French Armada was made up of 200 CG boats, and this shot featured about half the visible fleet and 1500 disembarked French soldiers. The practical photography provided a good guide for scale and lighting, with 4 landing craft, 4 rowboats, over a hundred extras on the beach and 25 cavalry in the foreground. Ultimately much of this was replaced with CG when the beach was widened in order to maintain continuity of the tide position throughout the sequence. Boat layout and animation was handled in two stages, divided by a period where matchmove artists would roto-animate the waves in the backplate. This allowed for detailed animation and interaction with the ocean surface to be achieved.

MPC’s crowd simulation software ’Alice’ provided digital artists with the tools to handle the number of CG soldiers required. Alice utilised MPC’s motion capture library for most of the animations but with specific actions like rowing, disembarking soldiers and horses being realised through a specific mo-cap shoot. Digital effects elements such as wakes and splashes were created for the boats and CG soldiers in the water, using pre-cached Flowline simulations, which were automatically placed with each Alice crowd agent at render time.

The small numbers foreground cavalry were multiplied with the addition of full CG riders. Safety regulations prevented the helicopter’s camera from being close enough to the live action cavalry, so Ridley requested that MPC add the additional CG characters right into the foreground and under the camera. For this task, ‘Alice’ crowd agents, which are inherently suited to being smaller in frame, were promoted to having a high level of detail. Additional modelling, texturing, animation, cloth and fur simulations were required to provide the extra details and nuances to what became almost full frame CG renders. The effects team again provided interaction elements for the horses’ hooves, in the form of mud clumps, grass and dust, augmented further in the final composite with additional live action dust elements.

// WANT TO KNOW MORE?

– The Moving Picture Company: Dedicated page to ROBIN HOOD on MPC website.

© Vincent Frei – The Art of VFX – 2010