Stephane Ceretti is back on The Art of VFX. After PRINCE OF PERSIA and X-MEN FIRST CLASS, he talks to us about his work on set on CAPTAIN AMERICA.

How did you get involved on this film?

Victoria Alonso, who is in charge of all the visual effects at Marvel, was looking for a second unit VFX supervisor for CAPTAIN AMERICA: THE FIRST AVENGER and she spoke with Diana Giorgiutti and Danielle Costa who were working on THOR to find out if they knew someone in London. I had worked with both of them before and they suggested that Victoria contact me, which she did. I met her in London, then went to LA for meetings with Method Studios, which I had just joined, and met with Mark Soper (VFX producer) and Chris Townsend (VFX supervisor) who were already preparing the shoot of CAPTAIN AMERICA. Looks like they all liked me and Method agreed to loan me out to Marvel for the duration of the shoot in the UK.

Can you tell us about your collaboration with director Joe Johnston and Production VFX Supervisor Christopher Townsend? How did you split the supervision work?

I was in charge of everything that would be shot on second unit, including all the plates we had to shoot in the various locations like Liverpool, Manchester, Wales and the entire aerial shoot in Switzerland. We had constant coordination meetings between Chris and myself and the VFX team to make sure that nothing was left behind. Sometimes second unit would start a sequence and we would hand over information to the main unit regarding what we had shot and the plates we had, and sometimes it was the other way around. The director, Joe Johnston, shoots in a very organic way, without previs, so we had to make sure we were prepared for any options. It was very intense because the units were not shooting in the same locations, so we had to make sure communication was constantly flowing between the two units. Chris was spending all his time with Joe Johnston gathering information. I was spending most of my time with the second unit director Jonathan Taylor. A large part of the second unit team had done a lot of the action sequences on the Bond movies, and we had a lot of fun with practical stuff to do on ‘Cap.’ I must say that they were all very helpful and consistently tried to provide the VFX team with all we needed.

What was the average size of the sets?

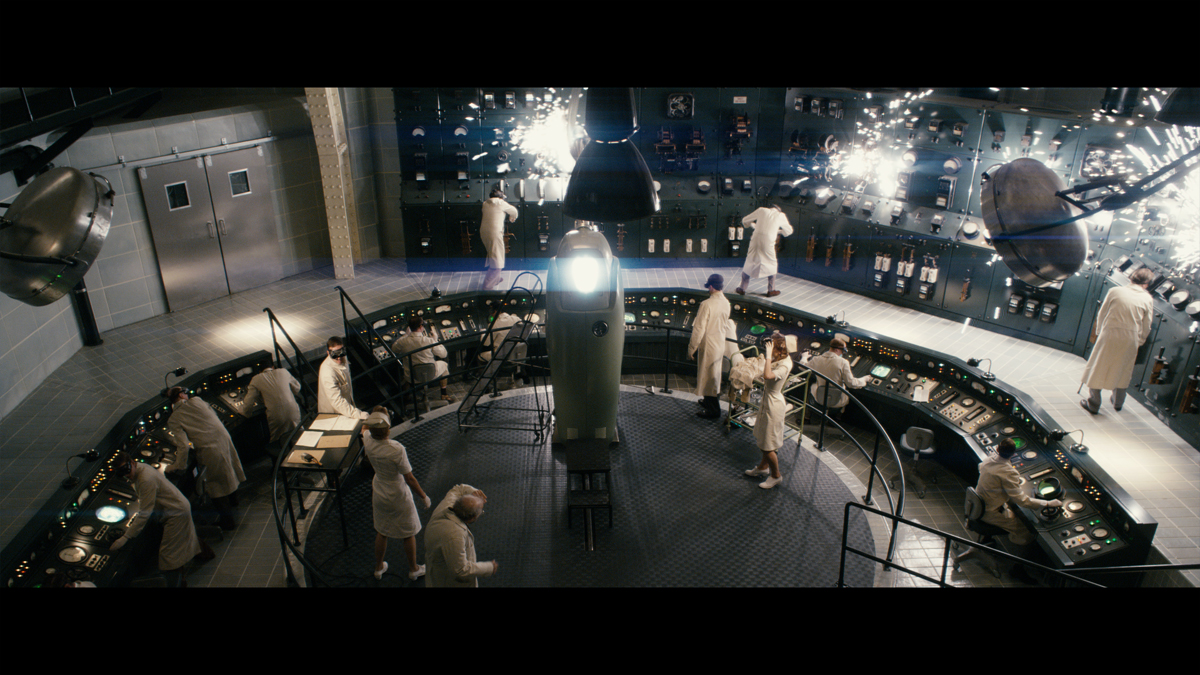

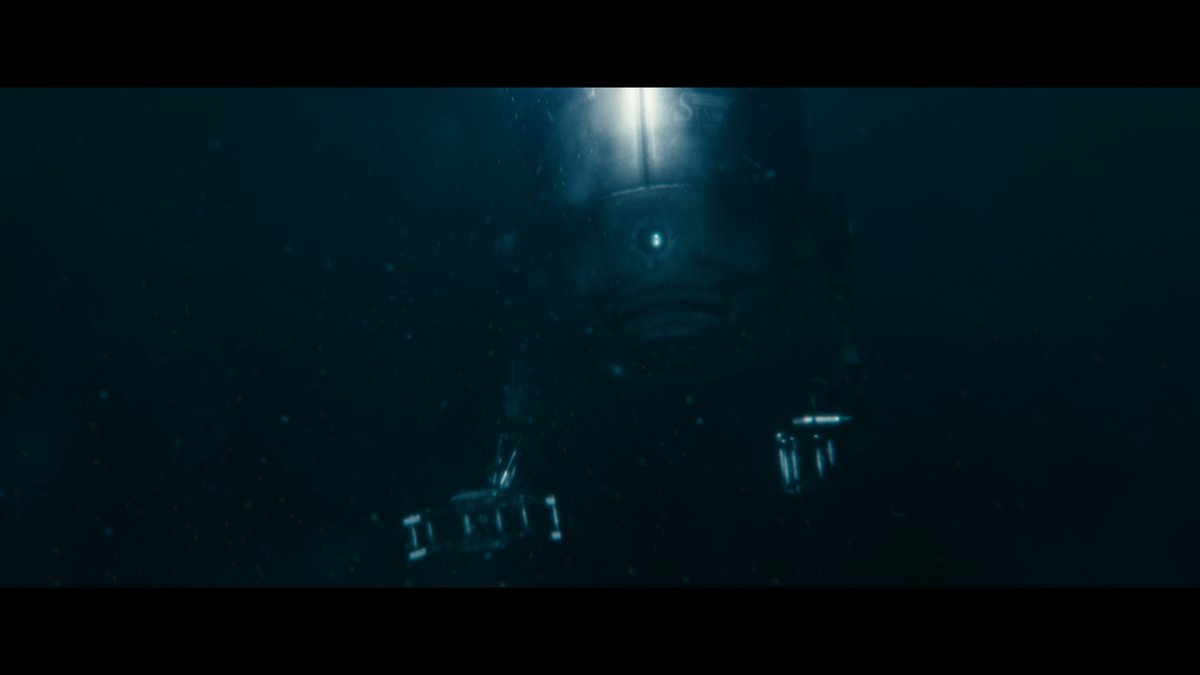

Well, we had from very small (a corridor) to very large streets in Manchester and forests behind Pinewood. Interior sets were really big and detailed. The Bomber was a huge set built on a gimbal which could be moved in every direction, and the Hydra Factory set used all of the space in the H stage at Shepperton. The NY World’s Fair set was built outside in Longcross and that was one of the biggest green screens I have ever seen.

Our biggest exterior “sets” were definitely the streets of New York that we did in Manchester and Liverpool. Art department dressed a full street in Manchester to look like 1940’s Brooklyn and we had all the props and cars and extras to fill the street with the amount of life you would get there. These were huge open air sets.

What are the important elements about the battle scene that you forward then to the different vendors?

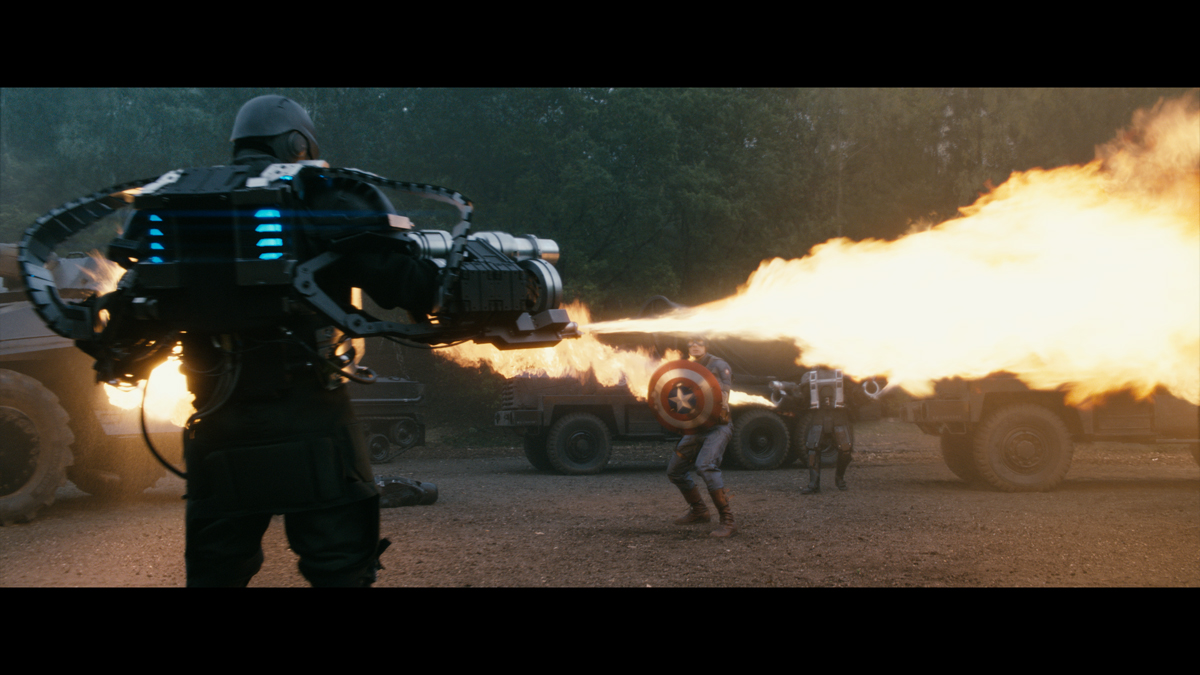

There were a lot of plates shot for all the green screen elements. We shot plates for the car chase and the bike chase, as well as the train and plane sequence plates, which we shot in Switzerland. Also, we had a big element shoot at the end with countless explosions and various other elements needed for the entire show. We shot some crowd elements as well. It was a lot of things to track and ID so that we could insure everything was covered.

On top of that, the decision was made to shoot clean plates for every shot because of the post 3D conversion. We aimed to get as many as possible but sometimes in action sequences getting a clean plate does not make much sense, especially with that many cameras (up to five on the big action sequences, sometimes more) and lots and lots of layers with cars, crowd, smoke, explosions…

What is your equipment to identify and capture the information necessary for VFX?

We had a great team of matchmovers. Mike Woodhead was with me in second unit all the time, as well as Natalie Lovatt who coordinated and gathered all the information to communicate to the main unit and later on to our vendors. The equipment we had on the set was quite standard with survey station, grey/silver balls and macbeth charts, and many digital cameras to gather textures and references. We also had witness cameras for all the scenes involving Red Skull. We did not do any of the skinny Steve work in second unit but we knew that all the fights with doubles of Red Skull could potentially need some work so we captured reference material with witness cams every time we had Red Skull with us for a fight or a stunt. We also did that for all the shots involving the ‘blue ray of death’ where we would have to paint out and vaporize people!

All sets were surveyed and scanned in 3D using a LIDAR. All the actors were scanned in 3D by 4Dmax, but that was taken care of by the main unit guys as they had the actors with them most of the time.

Did you use a lot of bluescreens?

It was green screens mostly because Captain America is blue! But we had a good amount of green costumes mixed in with this as well… but yes, the H stage in Shepperton and some of the Longcross set had huge amounts of green screens. But on location we tried to avoid having any of these and relied on rotoscopy to do the job.

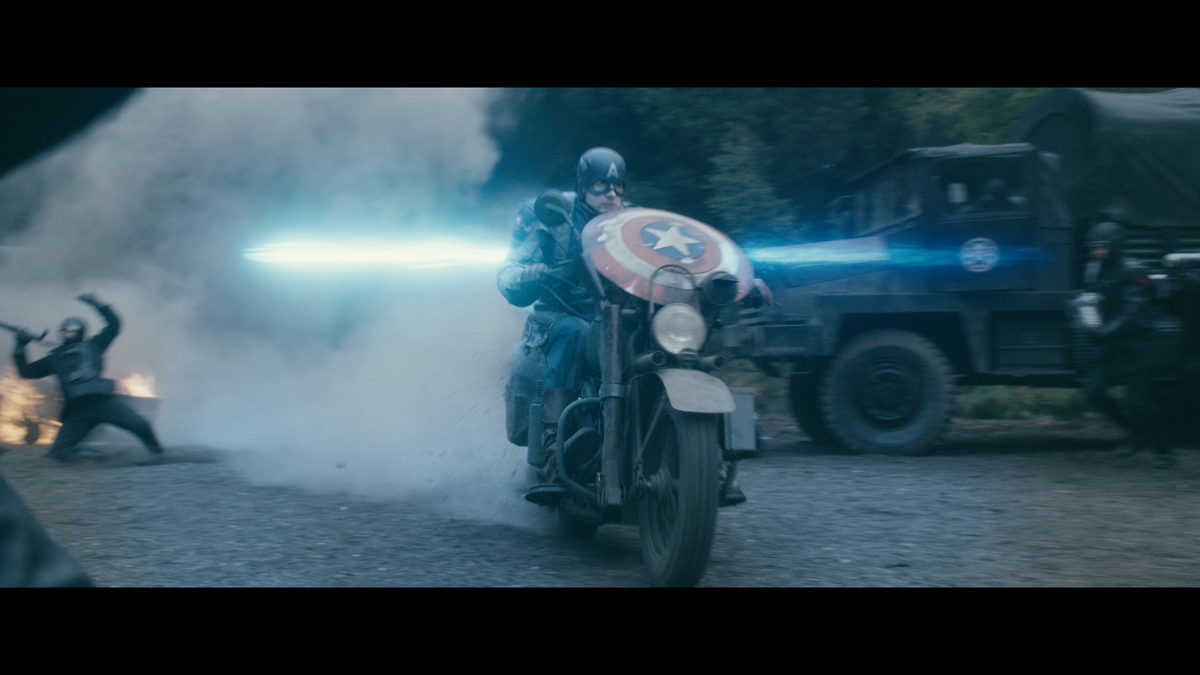

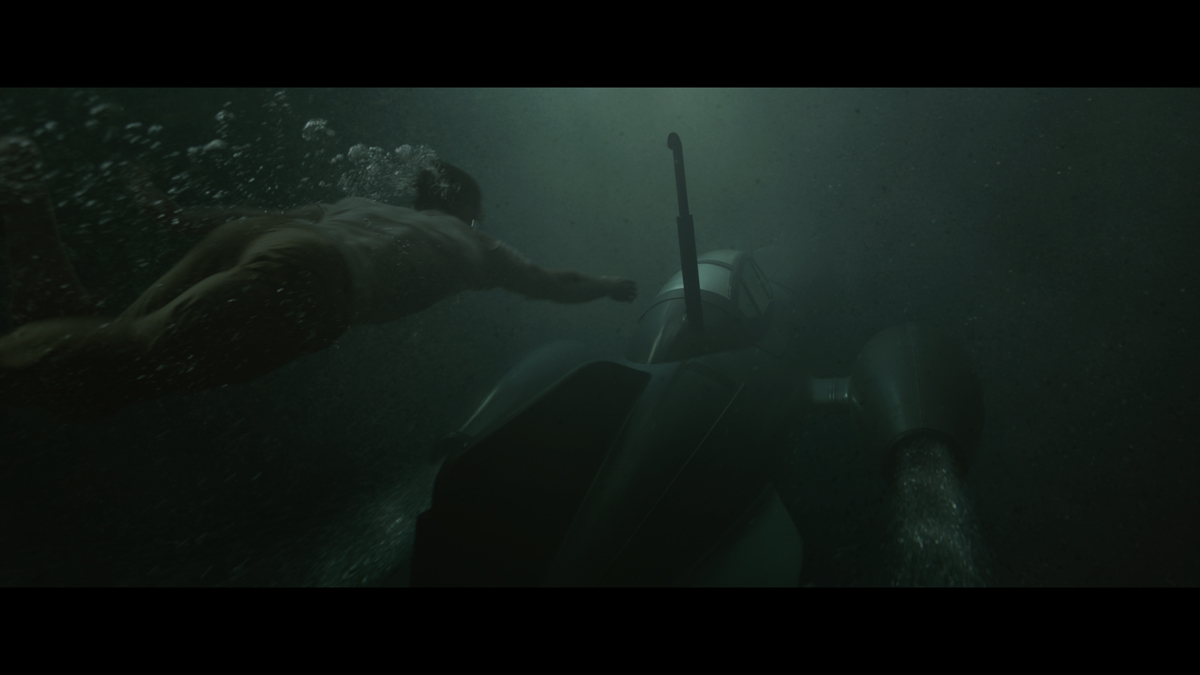

How was the shooting of the motorcycle chase sequence? What kind of effects and preparation does this sequence required?

Very cool, but very demanding. Shooting in a forest and having to run around miles and miles was quite tiring! Plus we had some issues with some of the bikes that were a tiny but capricious. We shot the chase in the back of Pinewood in a forest called Black Park, which you see in a lot of movies. It’s a great looking place and really perfect to shoot this kind of sequence. We had a small helicam to shoot from the air flying in between the trees, and then travelling cars and quads to follow the bikes and get some cool shots. We even shot some of the close up impact shots with canon 5D cameras, but I don’t know if they used them in the cut. A full cooperation between the stunts, the special effects guys who did the explosions and myself was necessary to make sure we would again get all the plates necessary for the green screen elements with the actors. I sometimes had my own camera splinter unit to collect plates while the second unit was shooting some other bits of the sequence that were not VFX related.

At the very end of the sequence, Captain America gets to the entrance of the hydra base and jumps on the ramp. That was shot in Longcross studios.

Can you tell us about the progress of one of your typical day?

Get up very early and get to the studio. Once on the set, make sure the team is ready, listen to Jonathan Taylor (second unit director) and Terry Madden (second unit First AD) about the news of the night, and then get on with shooting as much as we could!!! No days were the same to be honest, there were new challenges every day. I really had to be always aware of what my essential needs would be each day and what was absolutely necessary for us to get in terms of elements. But as I said earlier, the second unit team was great and cooperation was good all around.

How many shots were filmed on average by the second team?

I can’t really say, but a lot! I would say we shot around 600 to 700 VFX shots in second unit but not all of them make it to the cut, obviously.

How was the collaboration with film crews?

Excellent. It’s not always that you get such a high level of collaboration with VFX. We had a very good time working together.

What was the size of your team on the set?

Depending on the needs ,between three and five people, plus some of the vendors coming to visit on set from time to time.

Was there a shot or a sequence that prevented you from sleeping?

All of them, but I don’t sleep much anyway!

How much time have you spent on the shoot?

I worked on the movie from the beginning of May 2010 to mid December 2010. All of the shoot. I did not do the post as the guys were moving back to LA and I could not follow them. I moved onto X-MEN: FIRST CLASS in early January to supervise the post from London with John Dykstra in Los Angeles.

What do you keep from this new experience?

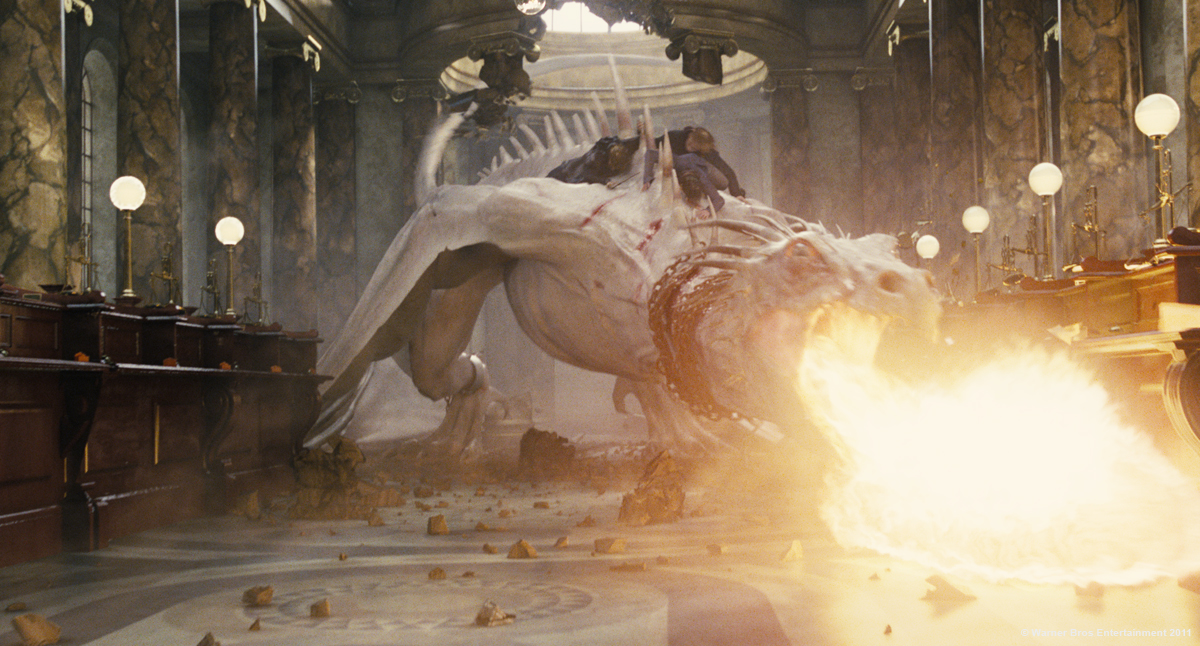

It was great working with Marvel for the first time! I think the Avengers project is a great concept and they’re building it with a lot of style. Working with Joe Johnston was a blessing. I enjoyed THE ROCKETEER so much when I was a kid, and CAPTAIN AMERICA: THE FIRST AVENGER had a lot in common with this film. The entire team was great, from the production design to the actors and all the technical crews, it was a real pleasure to be involved on this project.

Working with Chris Townsend and Mark Soper was awesome. We had a wonderful VFX department team: Jen Underdhal, Lisa Marra, Natalie Lovatt, and Ben Agdhami who were organizing and coordinating our crazy team with lots of dedication and style, and our matchmovers were always up for a challenge! We had a wonderful alchemy on the show and I think it shows in the final product.

What is your next project ?

I am currently in Berlin about to begin the shoot of a very ambitious and exciting movie which is an adaptation of a successful English novel.

A big thanks for your time.

// WANT TO KNOW MORE?

– Method Studios: Official website of Method Studios.

© Vincent Frei – The Art of VFX – 2011