Ben Shepherd works for over 10 years in visual effects, he participated in many projects such as BIG FISH, HARRY POTTER AND THE GOBLET OF FIRE, and TIM BURTON’S CORPSE BRIDE at MPC. In 2006, he joined Cinesite teams where he oversaw films such as X-MEN: THE LAST STAND, THE DAY THE EARTH STOOD STILL or 2 episodes of the TV series THE PRISONER.

What is your background?

My background is in illustration and graphic designer. I have a degree in fine art from Newcastle University. I’ve worked on lots of feature films including ALIEN VS PREDATOR, UNDERDOG, HARRY POTTER AND THE GOBLET OF FIRE, TIM BURTON’S CORPSE BRIDE, to name a few.

How was the collaboration with director Jonathan Liebesman and production VFX supervisor Everett Burrell?

This was the first time we had worked with both Jonathan and Everett. On BATTLE: LOS ANGELES we mainly dealt with Everett, who communicated Jonathan’s feedback to us. This process worked smoothly and Jonathan always seemed very pleased with the work we created.

How did Cinesite get involved in this project?

Director, Jonathan Liebesman, had seen our Emmy award-winning work on GENERATION KILL and off the back of that work we were selected for the job.

What are the sequences made at Cinesite?

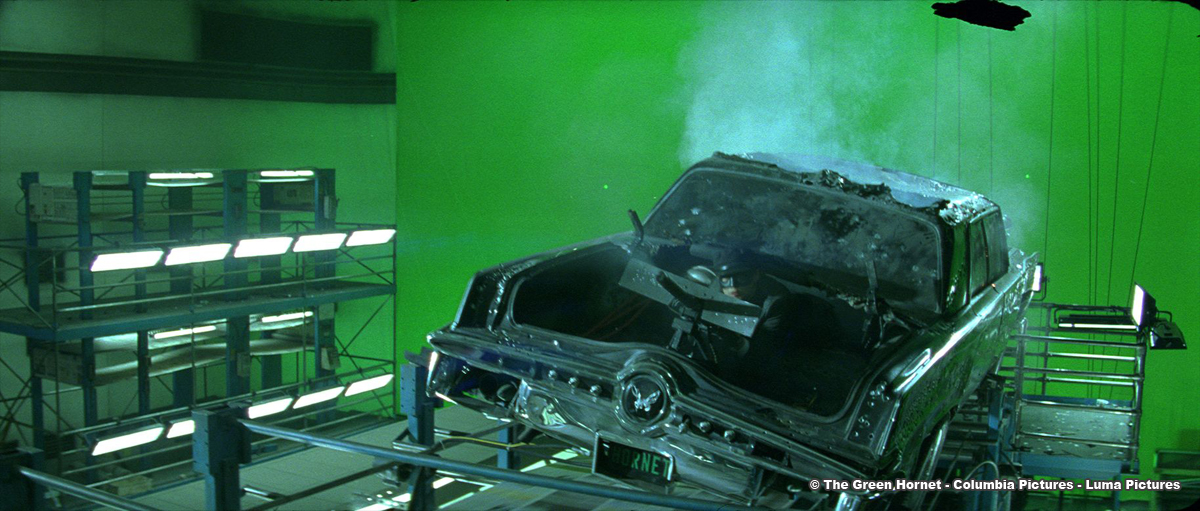

Our key sequences were those where the marines are transported between Camp Pendleton to the forward operating base at Santa Monica airport. The marines fly down the coast through the falling meteorites, witnessing the battle below them and coming under attack themselves. These sequences include CG water splashes, dead bodies, military hardware, meteorites, extensions, smoke plumes, explosions, water splashes – it’s major visual effects showing an epic wave of destruction.

The sequence culminates with the Marines landing at Santa Monica airport, a modern airport that we turned into a military base complete with military aircraft and soldiers, with smoke rings and falling meteorites in the background.

We did other intermediate shots like a big matte painting establisher where the Marines get back to Camp Pendleton to discover it has been destroyed by the aliens.

Also, a major section from where the marines enter the sewer right through to the destruction of the alien aerial was our work. This includes a close up fire fight between the marines and a troop of aliens.

How did you create the impressive smoke trails and their distinctive shape?

The smoke rings are an integral part of the meteor attack/landing at the beginning of the movie and they’ve featured heavily on the posters and publicity for the film.

Jonathan’s idea for incorporating the rings was that they were a retro-thruster mechanism that would activate in the meteors to slow them down before landing.

We had an extremely talented FX TD working on them, Claire Pegorier, and she used standard Maya fluids to build the effect. The initial explosion was created using fast moving particle fluids. The smoke was created using fluid emitting from a torus surface, driven by a torus volumeAxisField to force the fluid to rotate.

Various other processes were applied to give the rings an irregular and more natural look, to simulate the process of time on the rings and to collapse the rings.

How did you get the idea for this very iconic smoke ring?

The smoke rings themselves were the hardest thing to do. I was on set with Everett when they were filming some massive explosions. One of them resulted in a perfect smoke ring floating up into the sky. Everett turned to me and said, “If you made that in CG, no-one would believe it.” Then Jonathan came over and said excitedly: “I want that in my movie!” So we were challenged to replicate it.

The smoke ring effect simulations were created by Claire Pegorier and Michael Wortmann wrote the shader. Jonathan laid down a challenge that he could spot any CG smoke, but he never quibbled about any of our smoke, which I guess is a sign of his confidence in our work.

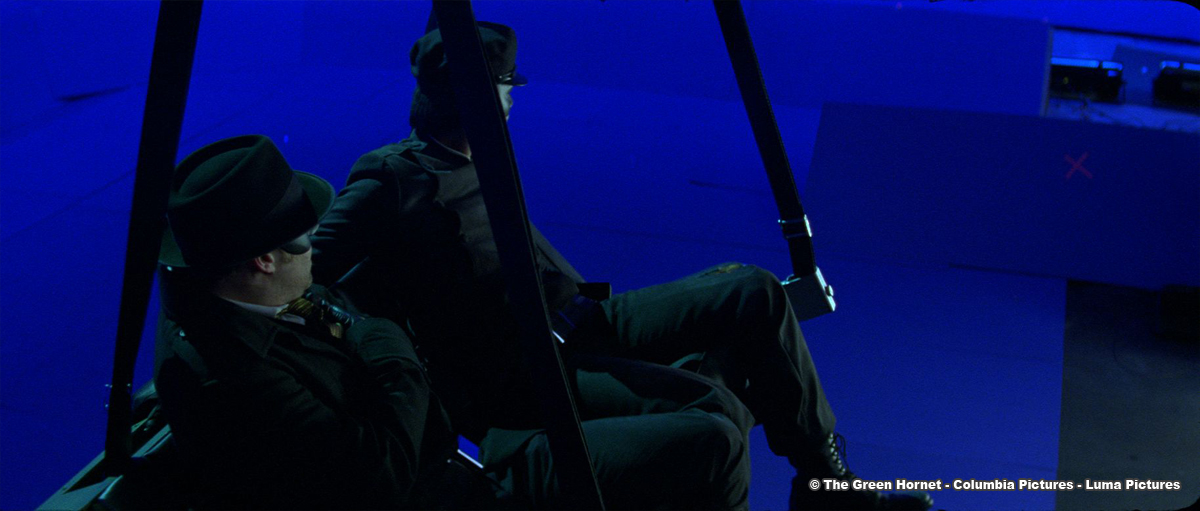

Can you tell us about the shooting of the helicopters sequence?

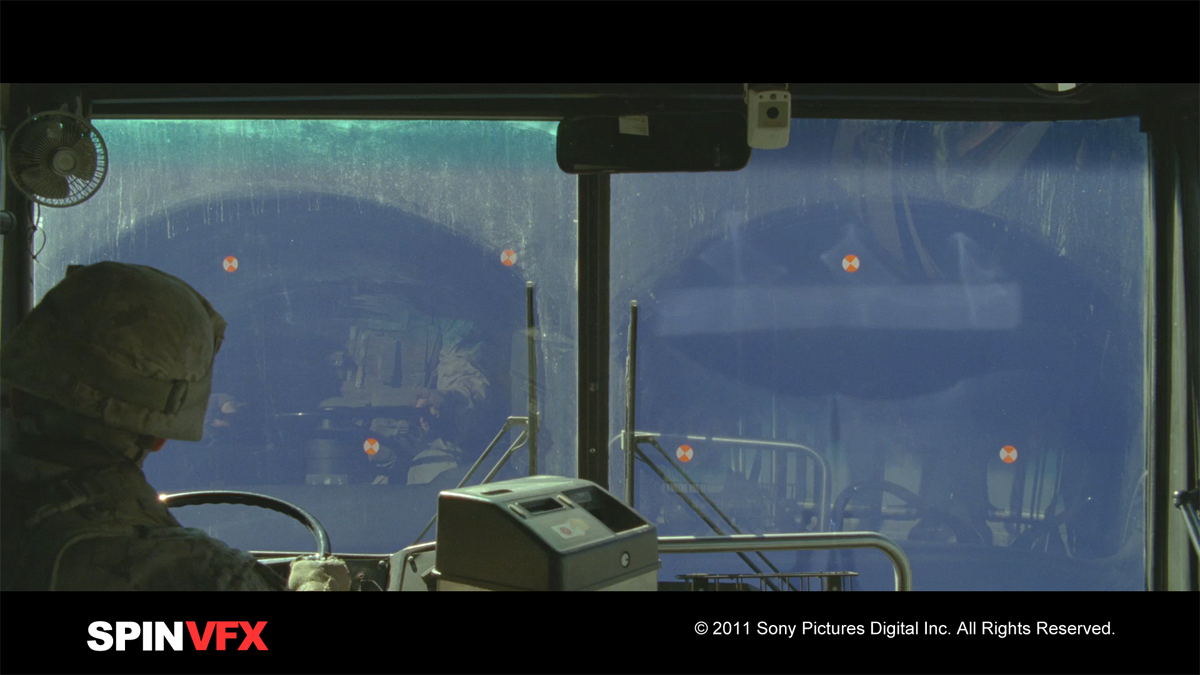

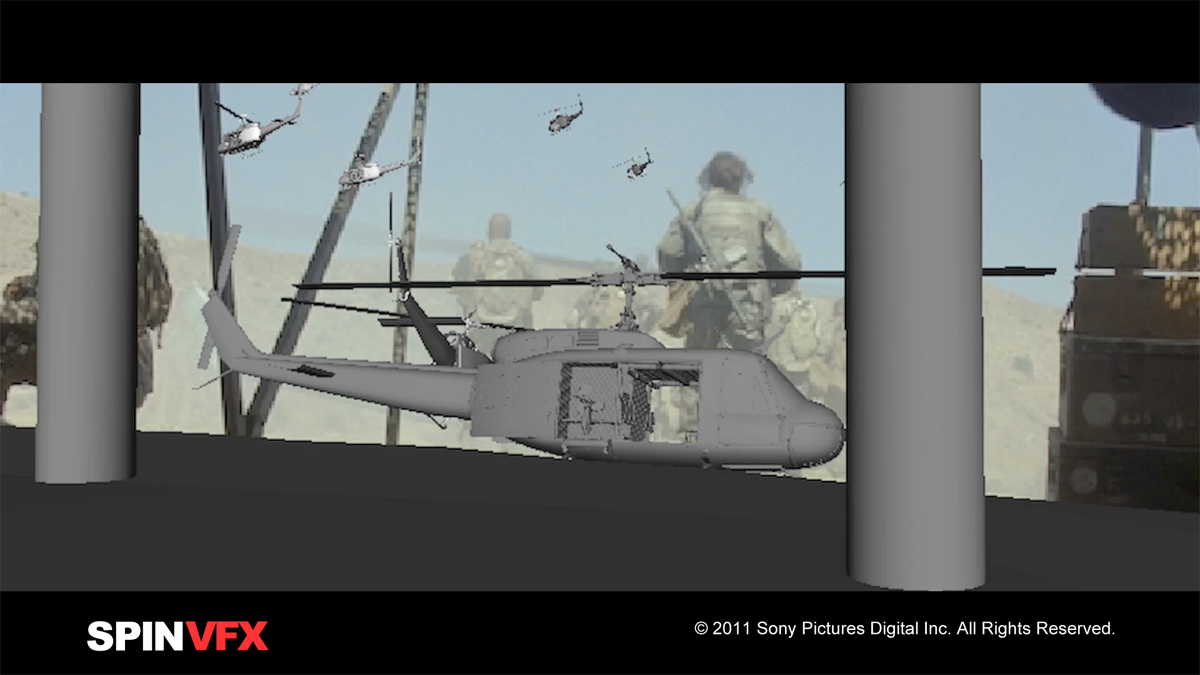

This was the sequence where the Marines leave Camp Pendleton to tackle the invasion of Santa Monica airport. The plate was shot with three helicopters which we enhanced using our stock of assets to a formation of 12 by adding CG ones. The airfield was further populated by tanks, light armoured vehicles and armour, as well as atmospherics such as smoke streams and distant smoke rings.

How did you create this armada of helicopters? Was there a real helicopter in those shots?

There were three real helicopters in almost every live action plate. We already had a CH46 model, which we had created for our work on GENERATION KILL; we customised this and referenced the live action plate to make sure they matched. It is always a blessing to have real vehicles in shot, as a reference for lighting etc.

Have you encountered any problems with the style of shooting especially for the tracking?

Not really. Much of the hand held camera shake was captured in principal photography, but we also added some in the compositing phase. It did cause some delays with tracking, but nothing insurmountable.

What were your references for creating this devastated Los Angeles?

We had matte paintings and digital stills which we composited into the shots, animated and added layers of smoke, fire and debris.

Can you explain in detail how you create those really impressive shots showing the mass destruction of Los Angeles and the beach?

Initially, we created a digital matte painting of the city, extending the damage into the distance, and adding smoke. The fog bank the aliens hide behind is a 3D effect in some of the close shots, and was created in the digital matte painting in some of the wider angles. We also added 3D meteorites, smoke ring elements and water splashes where necessary.

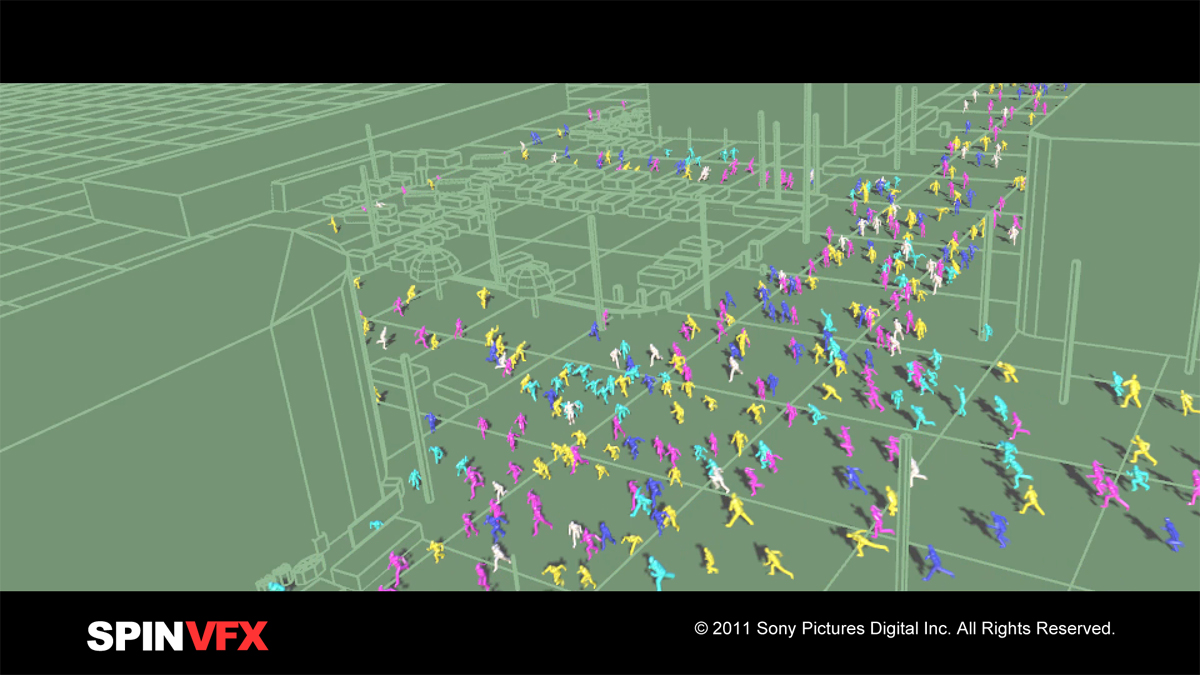

In addition to all of this, we added damaged & wrecked vehicles to the ground, crowds of bodies on the ground created in Massive, 2D and 3D explosions,2D flak bursts and tracer fire, 3D A10 ground attack aircraft, 3D police cars blocking roads, Cobras firing missiles, 3D CH46 helicopters and in some shots, even maneuvering troops created in Massive. Pretty much everything is in there; in some shots there are over fifty layers of effects elements!

|

|

Is the airport in the forward base is real or is it a full CG shot?

When they land at the Forward Operating Base, that’s a live action shot of Santa Monica airport with live action helicopters. All the other 100 or so airplanes on the runway, troops, explosions in the distance etc. is all CG. When they return to the FOB later, the whole environment is CG, including all the vehicles.

|

|

What was your sense of participating in the destruction of a city like Los Angeles? Your artists had to love it.

We loved it – it’s not every day you get to blow up LA!

Tell us about the creatures and their ships. How do you create and animate them?

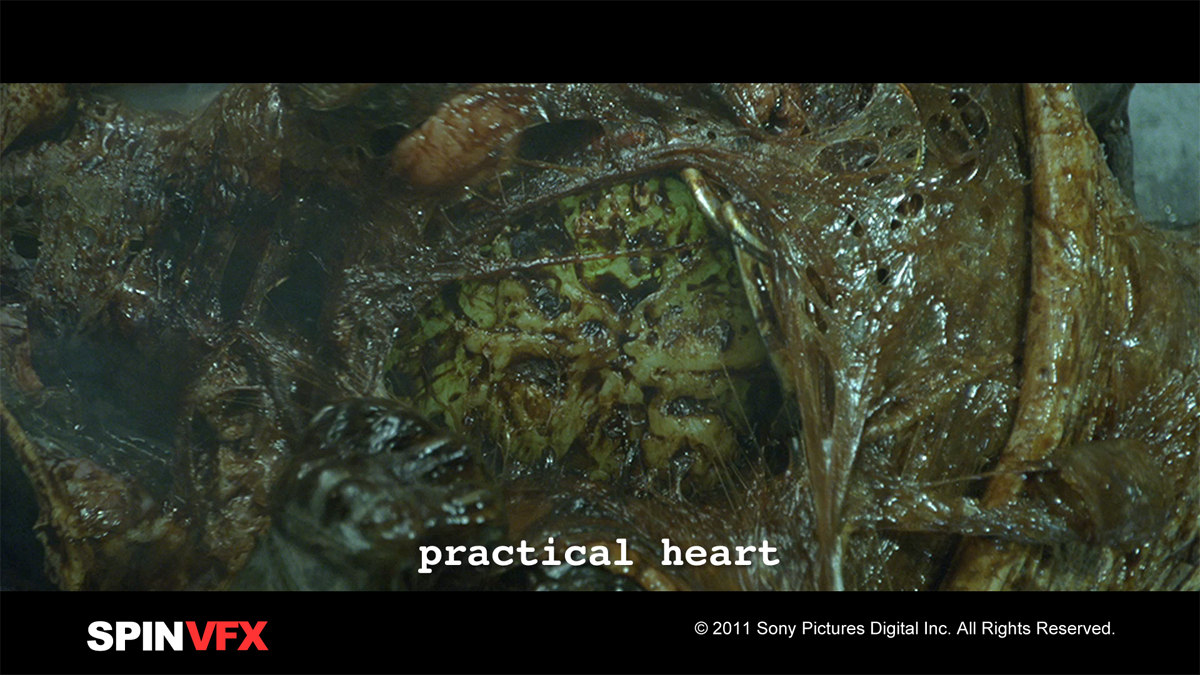

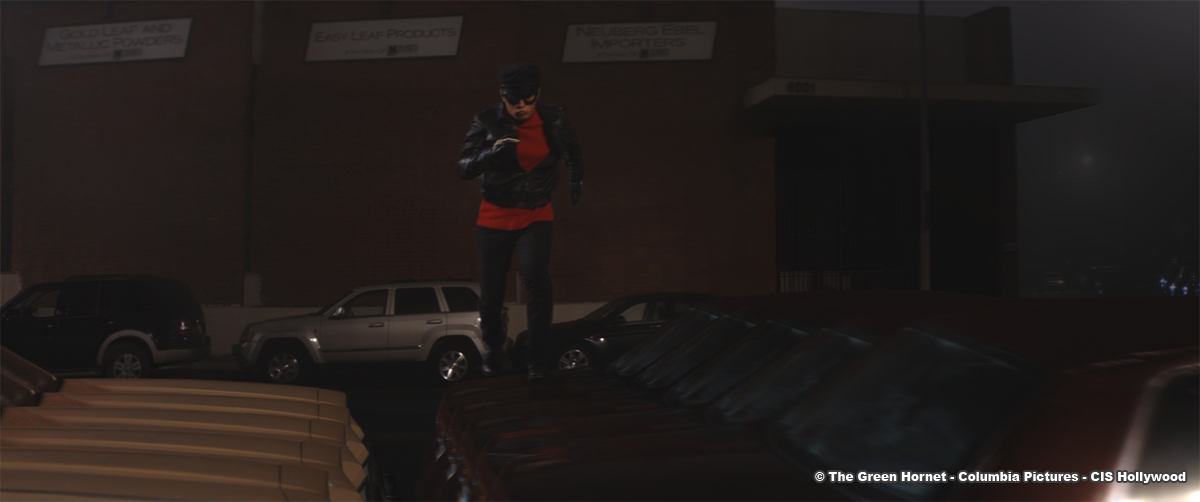

We were responsible for creating the Commander Alien which was designed to have human mannerisms so the audience wouldn’t at first identify the figure as an alien. The model was key frame animated and to make the Commander stand out from the other aliens, he was heavily textured and given a greasy, sweaty look and feel.

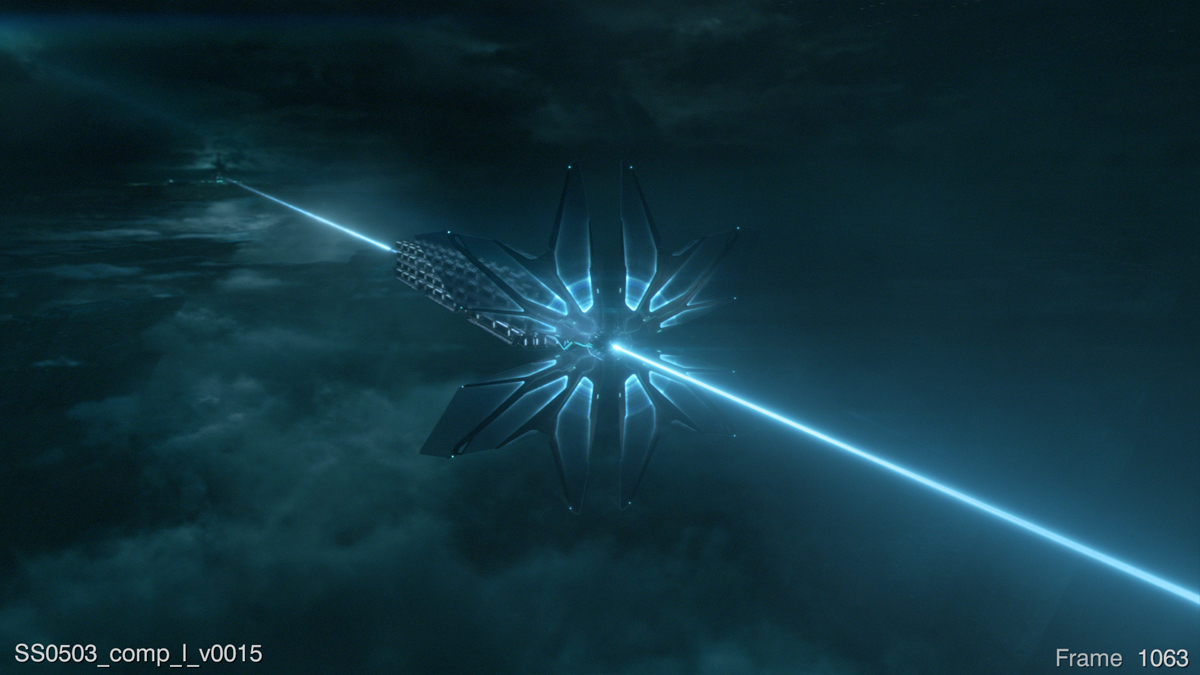

The alien hovercraft was another element which our team generated. Based on original designs by Paul Gerrard, it features in the film as a gun platform for the aliens to fire from.

How was the collaboration between the different VFX vendors?

We provided all the other facilities with full 360 degree panoramas of the destroyed Los Angeles. These were created in Photoshop as fairly traditional digital matte paintings, which were composited in Nuke as a 3D environment. We also shared our Commander Alien asset with Hydraulx. We were the only UK facility on the project and everything worked smoothly with the other facilities, who were all US-based

What was the biggest challenge on this project?

Probably the smoke rings

Was there a shot or a sequence that prevented you from sleeping?

Not really, to be honest. The whole production for us went very smoothly.

How long have you worked on this film?

The project took 10 months to work on, including six weeks spent on set in Baton Rouge, Louisiana.

How many shots have you made and what was the size of your team?

We completed around 110 shots and the team staffed up to about 34 at the peak of the project

What do you keep from this experience?

In the current project I’m working on, we return to reference our work on BATTLE: LOS ANGELES for the smoke and fire. We’re still using our proprietary in-house smoke tools on other shows.

We’re very proud of the work we did on Battle: Los Angeles and it has been received well by the visual effects community, which is always a bonus.

What is your next project?

I’m currently working on the Disney/Pixar film JOHN CARTER OF MARS, which is due for release in 2012.

What are the 4 movies that gave you the passion for cinema?

STAR TREK: THE WRATH OF KHAN, JAWS, BLADE RUNNER and TIME BANDITS.

A big thanks for your time.

// WANT TO KNOW MORE ?

– Cinesite: Official website of Cinesite.

– fxguide: Complete article about BATTLE LOS ANGELES on fxguide.

© Vincent Frei – The Art of VFX – 2011