After beginning his career at Digital Film and MPC, Paul Franklin helps to the creation of the studio Double Negative in 1998. Since then he has supervised projects such as THE LEAGUE OF EXTRAORDINARY GENTLEMEN and HARRY POTTER AND THE ORDER OF THE PHOENIX and has supervised all the movies of Christopher Nolan since BATMAN BEGINS. In the following interview, he talks to us about his work on INCEPTION.

What is your background?

I originally studied sculpture at university in the 80s which is where I first started experimenting with computer graphics. I combined this with the student theatre and magazine work that I was doing at the time which then lead me into film making and animation. I worked in video games for a while as an animator/designer and then moved into film and television in the early 90s. In 1998 I helped to set up Double Negative VFX.

How was your collaboration with Christopher Nolan with which you have already worked on BATMAN BEGINS and THE DARK KNIGHT?

Chris is a fantastic director to work with – he is very demanding, always pushing you to raise the bar in every area, but he also gives you a lot of feedback and involves you in the creative discussion which makes you feel a part of the whole movie making process. Chris told me at the beginning of INCEPTION that it would be an all-consuming experience, and he was right!

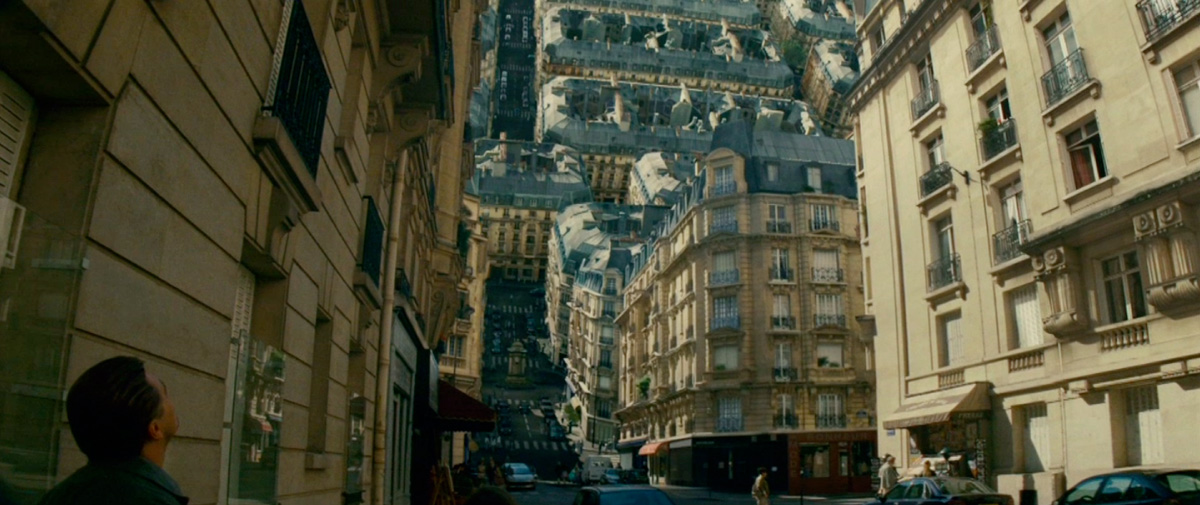

Can you explain how you created the sequence in which Paris is folded over itself?

Returning to the Paris environment, Ariadne, played by Ellen Page, demonstrates her new-found ability to control the dreamworld by folding the streets in on themselves to form a giant « cube city ».

The Dneg vfx team spent a week documenting the Paris location where main unit was scheduled to shoot. Seattle-based Lidar VFX Services did a great job scanning all the buildings and then delivering highly detailed data from which Double Negative built a series of Parisian apartment blocks. It wasn’t possible to get above the buildings so the Dneg VFX modellers sourced photographs of typical Paris rooftops to fill in the missing areas. We implemented the new pertex texture mapping techniques in Renderman to allow the CG team to avoid the laborious UV coordinate mapping that is usually associated with models of this type. The final folded streets featured fully animated cars and people – anything that’s not on the flat in the final images is CG.

How did you created the impressive scene of the cafe in Paris?

Early on in INCEPTION, Ariadne is taken into a dreamworld version of Paris by Cobb, played by Leonardo DiCaprio. When Ariadne realises that she is actually dreaming she panics and the fabric of the dream starts to unravel, disintegrating violently and flying apart in all directions.

Special Effects Supervisor Chris Corbould created a series of in-camera explosions using air mortars to blast light weight debris into the Paris street location. Whilst giving an extremely dynamic and violent effect on film, the system was safe enough that Leo and Ellen were able to actually sit in the middle of the blasts as the cameras rolled. Director of Photography Wally Pfister used a combination of high speed film and digital cameras to capture the blasts at anything up to 1000 frames a second which had the effect of making the turbulent debris look like it was suspended in zero gravity, giving the impression that the very physics of the dreamworld were failing.

Starting with a rough cut of the live action, the Double Negative VFX animation team used the in-house Dynamite dynamics toolset to extend the destruction to encompass the whole street. The compositors retimed the high-speed photography to create speed ramps so that all explosive events started in real-time before ramping down to slow motion which further extended the idea of abnormal physics. As the destruction becomes more widespread the team added secondary interaction within the dense clouds of debris to sell the idea of everything being suspended in a strange weightless fluid medium.

What did you do in the scene in which Ellen Paige turns large mirrors on a bridge in Paris?

Ariadne continues her exploration of the limits of the dreamworld by creating a bridge out of the echoing reflections between two huge mirrors.

We had scouted a bridge over the River Seine in Paris (Bir-Hakeim bridge which had been previously featured in LAST TANGO IN PARIS) which had a really interesting structure: a Metro rail deck overhead with a pedestrian walkway underneath framed by a series of cast-iron arches. Chris wanted this bridge to reveal in an interesting way as part of Ariadne’s playful exploration of her new-found ability to control the dreamworld. During preproduction we worked up various concept animations of the bridge assembling itself in a blur of stop-frame construction, but it always ended up looking slightly twee and overly-magical – Chris was interested in something elegant that, whilst simple in concept, would defy easy analysis by the viewer. In an early discussion I mentioned that from certain angles the arches resembled the infinite reflections generated by two opposed mirrors – Chris thought that this was an interesting idea and eventually asked the question « what could you do on set with a really big mirror? ». I got together with Special Effects Supervisor Chris Corbould who got his team to build an eight foot by sixteen foot mirror that could be swung shut on a hinge, effectively forming a huge reflecting door. Dneg then got to work on a series of animations that explored the range of what we might be able to get in camera with this rig and we arrived at a series of camera setups which then formed the basis for Chris and Wally’s shooting plan. This gave a great start for us in VFX, but as big as the mirrored door was (it’s size being limited mainly by weight as it was already up to 800 Lbs) we still needed to do a lot of work. The compositing team set about removing the support rig and crew reflections and then adding in the infinite secondary reflections as well as the surrounding environment. The result is a series of shots so subtle in their execution that you’re not really aware of any digital intervention until the very last moments of the sequence. In fact most of what you’re looking at is digital with only the actors being real – even their reflections are digital doubles in many cases.

Were you involved on the extreme slow motion and what did you do on them?

We shot slow motion using both the Photosonics 4ER (which uses standard 35mm film) and the Phantom digital camera. Slow motion photography involves a trade off between speed and quality – the faster the camera runs (and thus the slower the resulting image) the lower the quality of the picture. We came up with a solution to this by shooting at as high a frame rate as possible whilst still maintaining the quality and then slowing the footage down even more in post using various respeed tools inside Dneg’s in-house version of Shake. Some things were impossible to shoot slow motion, such as the falling rain in the wide shots of the van coming off the bridge, so instead we created all of the falling rain as VFX animation.

Can you explain to us the shooting of the train that attacks the heroes? What have you done on this sequence?

The train is pretty much all in camera, in other words we really had a full size train on the street crashing through the cars. Special effects and art department built the shell of the train on a truck body – Double Negative then removed the truck’s wheels and added metal train wheels. The fractured road surface was created in CG and additional work was done in compositing to add shadows to the building facades, increasing the overcast rainy-day look.

How was the shooting of the amazing corridor fight and how did you create this sequence?

The « spinning corridor and hotel room » sequence was all in-camera. Chris Corbould’s special effects team built a huge rotating set to create the effect of Arthur (Joseph Gordon-Levitt) and the sub-security guards running over the walls and ceilings. The same principle applied for the scene inside the spinning hotel room. The only VFX work was a simple removal of a camera rig from the background of the final shot in the sequence.

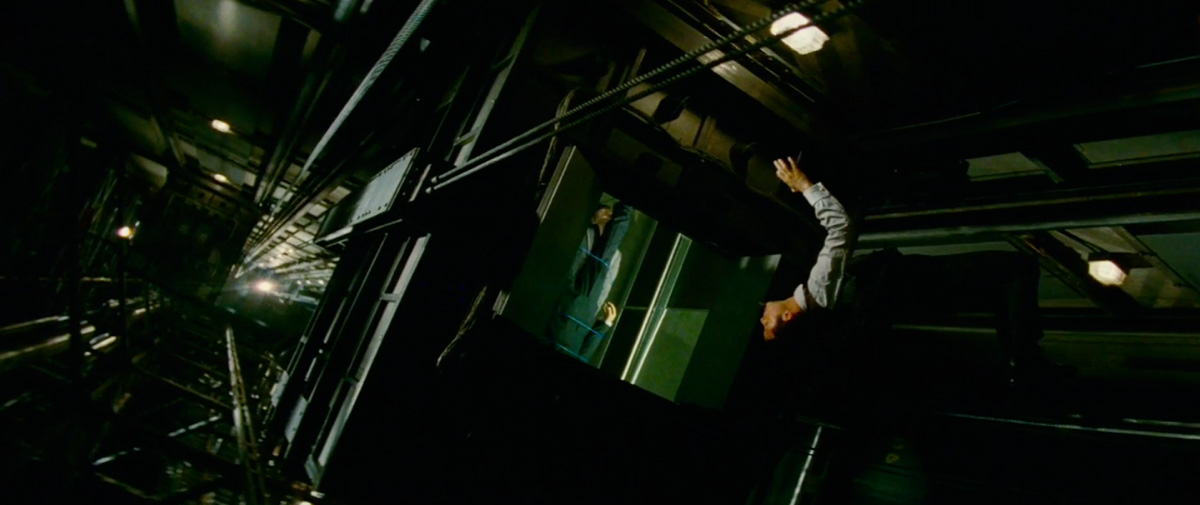

How did you created the zero gravity effect?

The zero-g look was achieved through the use of cleverly designed stunt and special effects rigs which were then removed digitally by Double Negative in post. For the zero-g fight, where Arthur grapples with the security agent a vertical version of the hotel corridor set was built and the performers were dropped into it on wires with the camera filming them from the bottom end of the set. For the most part the actors hid their own wires, but when they became visible they were painted out with CG set extensions being used to fill any gaps that were left in. Much of the rest of the zero-g sequences, such as in the elevator shaft, was achieved on a horizontal set with Joseph Gordon-Levitt being held in a special « see-saw » rig or suspended from a small crane. Once again, Dneg removed all the rigs and repaired the backgrounds where necessary.

Did you created digital doubles for the corridor and zero gravity sequences?

The only digital double work in the zero-g sequences is a brief moment when Arthur is tying up the sleeping dreamers where we replaced the heads of two of the stunt actors with CG heads of Cillian Murphy (Fischer) and Ken Watanabe (Saito). Everything else is done with real people!

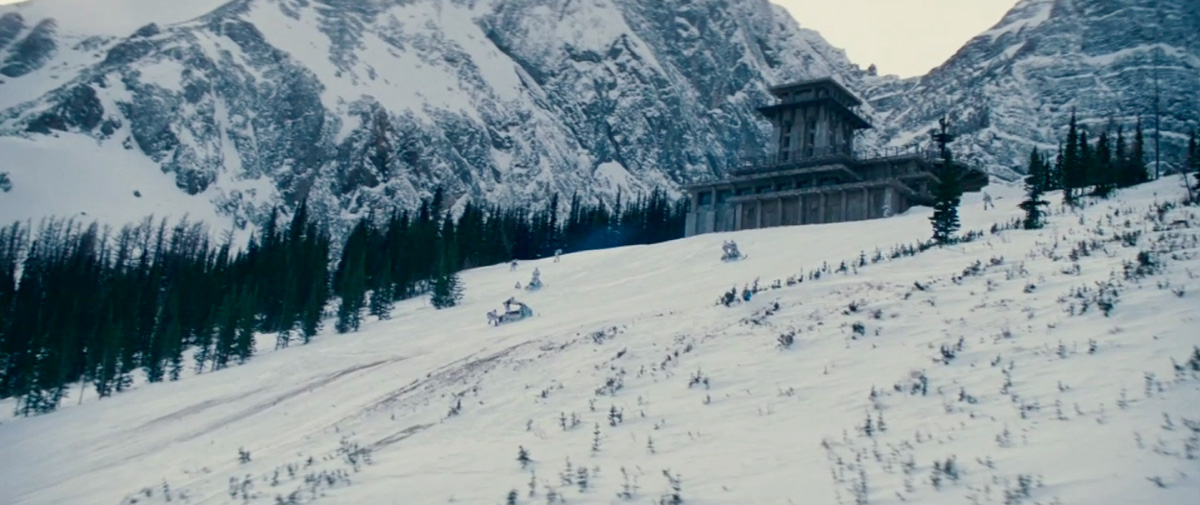

During the sequence in the mountain. Was the landscape real or is it all CG?

The landscapes are all real save for a small bit of terrain at the base of the Fortress when seen in the wide shots. The location of the snow scenes (Kananaskis County in Alberta, Canada) was absolutely spectacular – the only thing we had to do was add the digital Fortress in the wide shots and paint out the odd building in the background.

How did you created the avalanche?

The avalanche is for real. The special effects team collaborated with the local mountain patrol to trigger avalanches with strategically dynamite charges. We added the Fortress in the background and the little falling figures on the cliff face, but otherwise it’s all the real deal.

How was the collaboration with New Deal Studios?

New Deal are a great bunch of guys. I’ve worked with them directly before on LEAGUE OF EXTRAORDINARY GENTLEMEN and of course on THE DARK KNIGHT and Double Negative’s relationship with New Deal goes right back to PITCH BLACK, our first movie in 1998. Ian Hunter, New Deal’s VFX supervisor, did a fantastic job with his team, creating a sixth scale version of the central section of the Fortress and then rigging it for a dynamic collapse and pyrotechnic destruction.

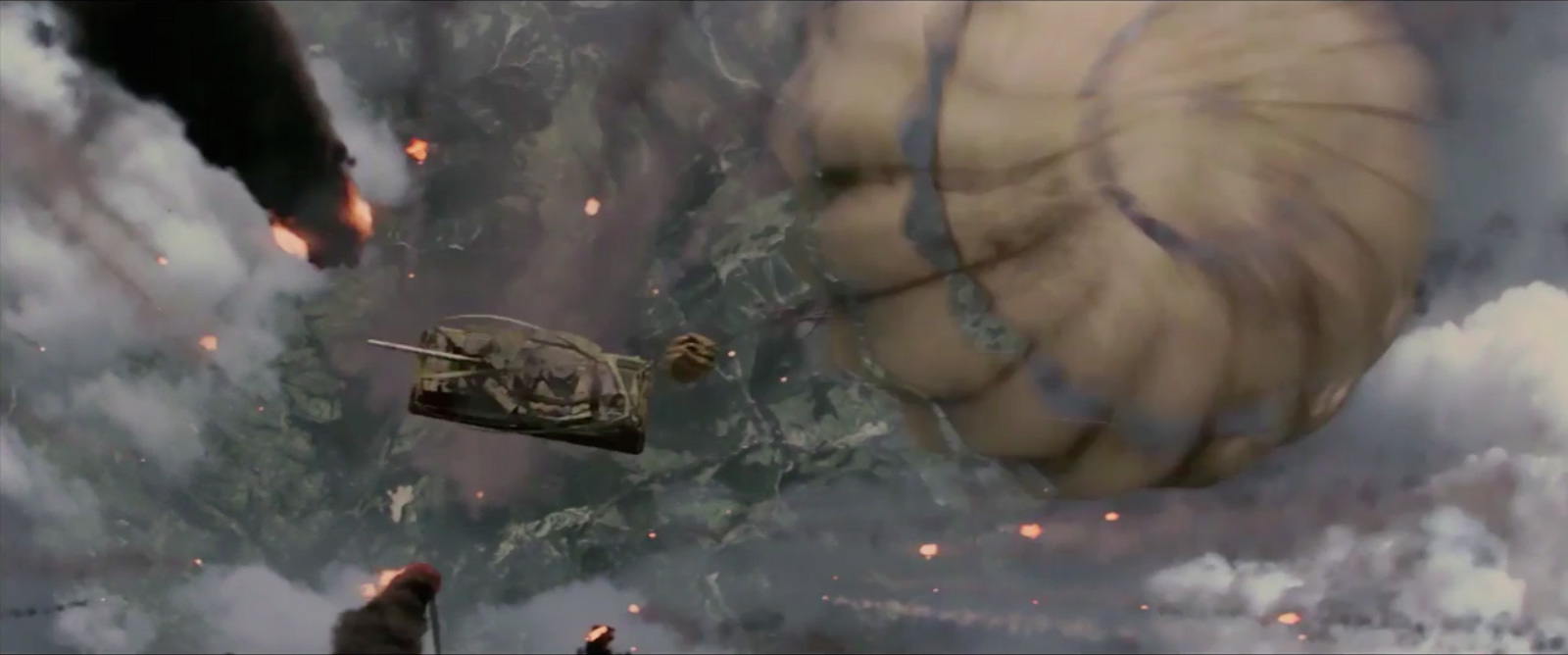

Can you tell us about the sequence at the edge of the ocean. How did you created this city that is falling apart?

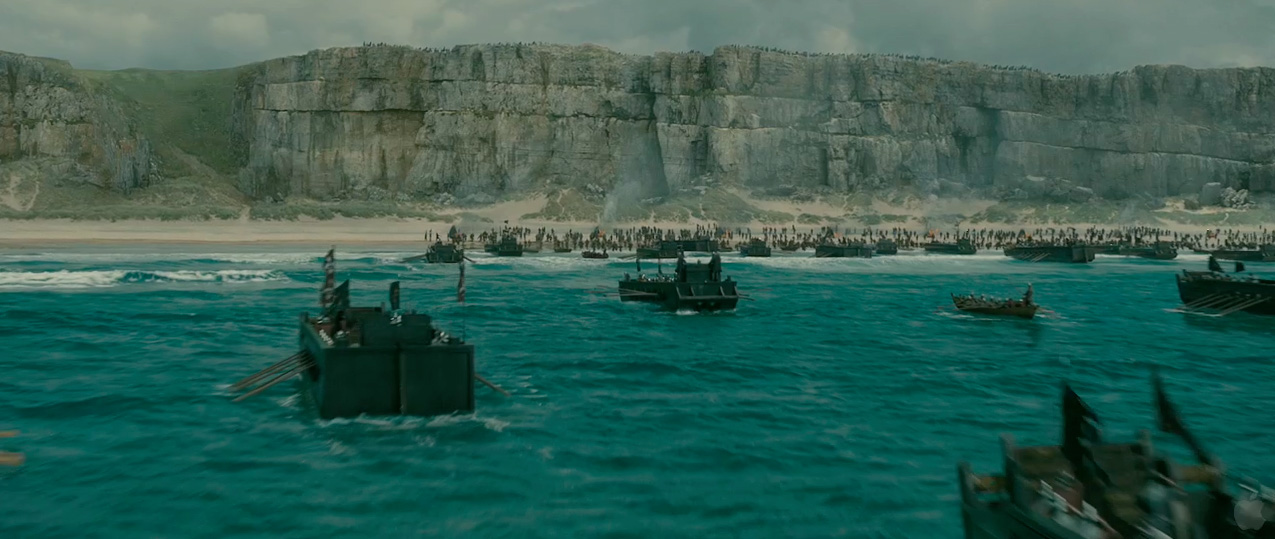

The Limbo City shoreline is, perhaps, the scene that has the most obvious symbolism of any of the dream environments. The sea represents Cobb’s subconcious mind and the city is the mental construct that he built within it – having once been beautiful and pristine, the city is now mutating and crumbling back into the subconcious sea, symbolising Cobb’s state of mental collapse. Chris wanted the city to take on the aspect of a glacier, slowly sliding out into the sea with giant architectural « icebergs » splitting off and drifting away in the water.

During pre-production both Art Dept. and VFX worked on concept designs for Limbo City devoting particular attention to the decaying shoreline. However, even after several weeks’ work we weren’t getting anything that Chris felt happy about – everything was just a little bit too literal. We discussed the idea of Limbo having started out as an idealistic modernist city that has started to collapse back into the sea of Cobb’s subconsciousness. We started with the basic concepts: a city of modern buildings and a glacier. We took a simple polygonal model of the glacier, built from photographic reference, and developed a Maya-based space-filling routine that populated the interior with basic architectural blocks with the height of each block being determined by the elevation of the glacier at that point. We then began to develop a series of increasingly complex rules that added street divisions or varied the scale of the buildings or added damage, all determined by samples taken from the glacial model. After each new rule was added we reviewed the resulting structure and then refined the process.

Once we had reached a certain level of complexity our VFX art director developed a series of paintings from the CG renders provided by the procedural system and these then fed back into the development of the rules. In this way we arrived at a city layout that had familiar features such as squares, streets and intersections, but which had a totally unique structure that felt more like a natural landform – a cliff being washed into the waves with architectural « icebergs » floating out to sea. The VFX animation team then used Houdini to create the collapsing architecture which was primarily referenced from natural history footage of glaciers rather than from building demolitions, adding giant splashes with Dneg’s proprietary Squirt fluids system. The hero shot from the sequence, featured in many of the online trailers, was developed from a helicopter plate that we shot with the INCEPTION aerial unit in Morocco – that’s actually Leo and Ellen walking through the waves. The final look of the city shoreline was created by using lots of reference of derelict housing developments as well as bomb damaged buildings in Iraq and other war zones.

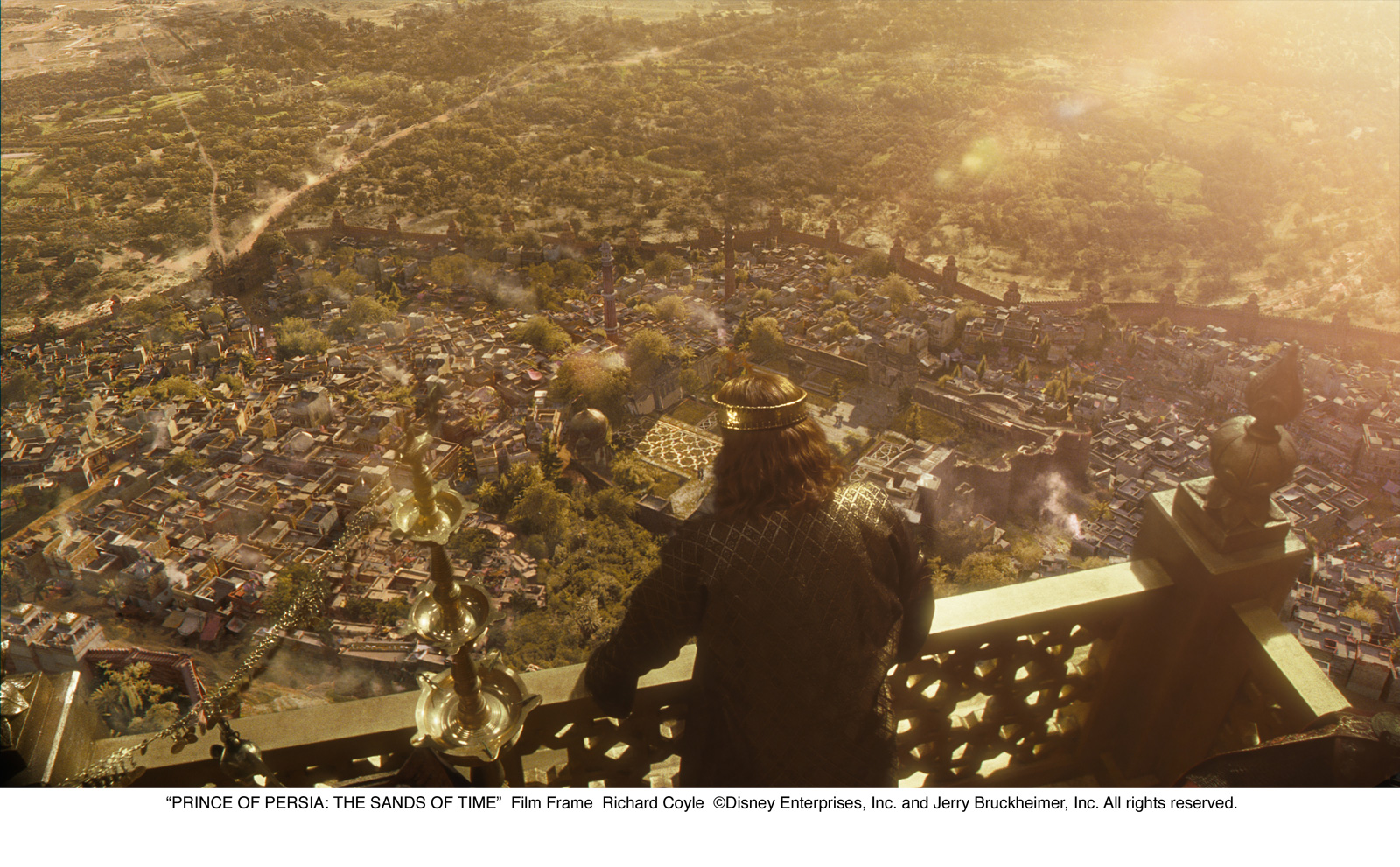

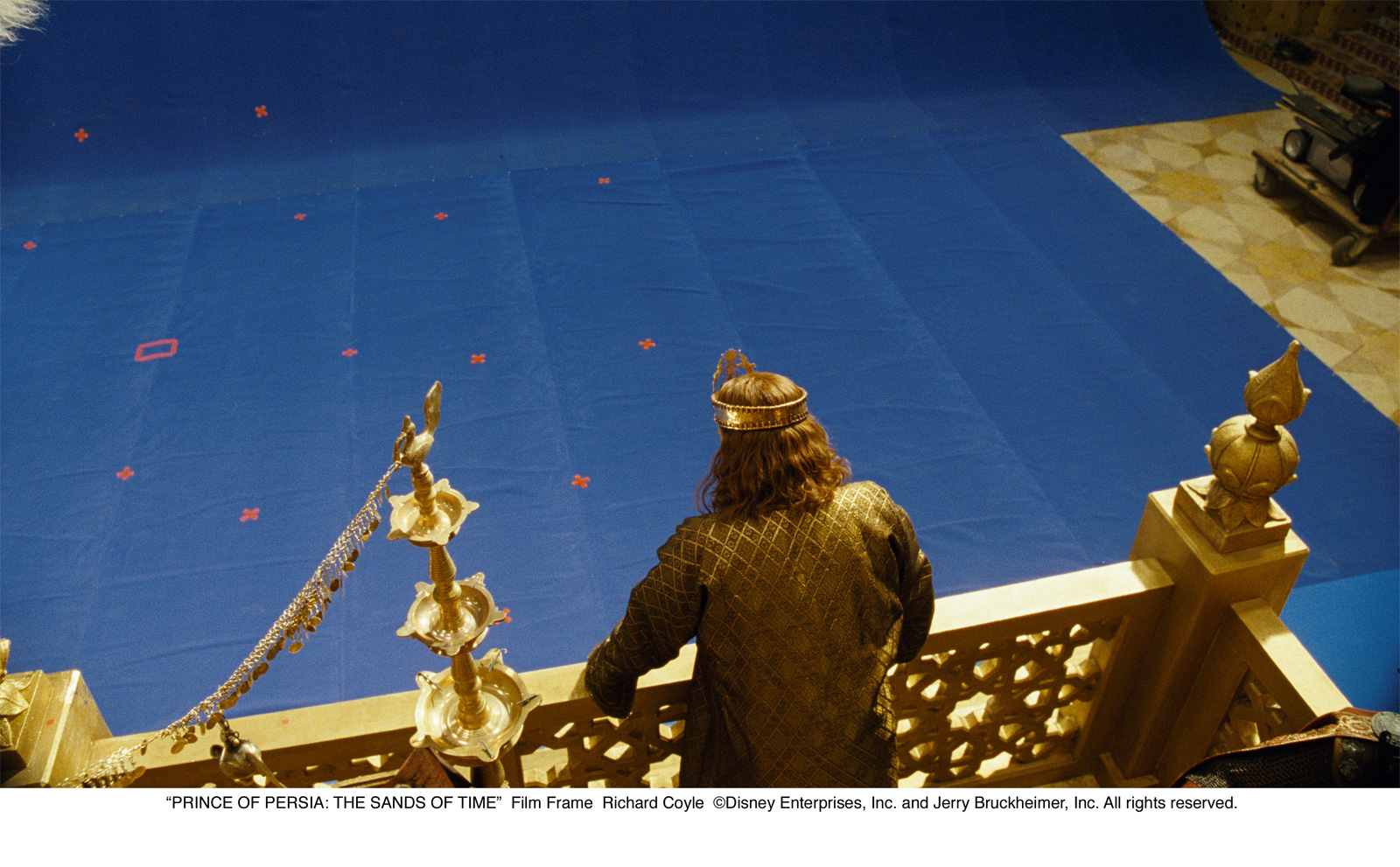

Can you explain the final sequence and its gigantic city that mixed old house and skyscrapers? Was it all shot in front of a greenscreen?

We shot inside the actual house (an early 20th century « craftsman house » in the San Gabriel valley in Pasadena California) and used the location for both the scenes in Cobb’s memory and Limbo. For the Limbo shots we built a large greenscreen, supported on a platform, outside of the windows. The cityscape was created from the same CG setup used for the scenes of Cobb and Ariadne walking through the deserted city. Great attention was paid to the compositing with a lot of time spent on getting the depth of field and exposure right.

How was shot the top? Was it CG or real?

The top was shot for real, there was no CG for it.

How long did you work on that show?

I first read the script in February 2009 and then started on the show properly in April of that year. Our final delivery was at the end of May 2010 – so, in all about 13 or 14 months.

How many shots have you done and what was the size of your team?

We worked on 560 shots of which 500 are in the final film. In total we had about 230 people working on the visual effects over the duration of the show.

What is your next project?

Right now I’m taking a bit of a break – we’ll see what comes later this year!

What are the four films that would have given the passion of cinema?

My favourite films are not necessarily visual effects films, but they all feature visual innovation and take a rigorous approach to story telling. I love David Lynch’s films, in particular THE STRAIGHT STORY which I think is a powerfully emotional film about a very singular man’s journey across rural America. My favourite film is Alexander Korda’s 1940 version of THE THIEF OF BAGDAD, which features some of the earliest use of bluescreen – I love its totally consistent sense of fantasy and powerful drama and it also looks absolutely incredible. That film, perhaps more than any other, is what got me interested in making films and visual effects myself.

A big thanks for your time.

// WANT TO KNOW MORE?

– Meet the Filmmakers: Podcast of Paul Franklin at the Apple Store in London.

– Double Negative: Dedicated INCEPTION’s page on Double Negative website.

– fxguide: Paul Franklin’s podcast and New Deal Studios work on fxguide website.

© Vincent Frei – The Art of VFX – 2010