Luma Pictures is back on The Art of VFX to tell us about their participation on THE AVENGERS. Vincent Cirelli and his team explain in detail their work on this show.

How was the collaboration with director Joss Whedon and Production VFX Supervisor Janek Sirrs?

Vincent Cirelli, VFX Supervisor // We worked directly with VFX Supervisor Janek Sirrs. He was our accomplice on the client side and it was an absolute pleasure to collaborate with him. Janek is a consummate professional and very enjoyable to work with. He goes out of his way to clearly articulate notes and, in spite of a busy schedule, makes himself readily available for discussions. Janek is not only extremely technically knowledgeable in visual effects, has also has great aesthetic sensibilities.”

What Luma Pictures have done on this show?

Payam Shohadai, Executive VFX Supervisor and Luma Co-Founder // A majority of the work was on the Helicarrier Bridge, which involved CG set extensions, multiple exterior cloudscapes, and glass-panel monitor replacements. We also executed shots of Thor conjuring his ‘Thornado’ storm with lighting and tornado simulations, as well as a few miscellaneous effects such as Hawkeye’s arrows and a CG desert environment.

What was the real size of the set for the Helicarrier and especially for the Bridge?

Richard Sutherland, CG Supervisor // The entire Helicarrier is basically the size of an aircraft carrier and this ambitious scale runs through all parts, including the bridge. The interior space of the bridge is a large circular room over 30 feet high and almost 100 feet in diameter. The front of the bridge features 160 degrees of windows which are over 20 feet tall.

Can you tell us more about the tracking challenges for the set extensions?

Richard Sutherland, CG Supervisor // The main challenge was achieving a perfect track over the length of each shot and over the entire frame. When adding CG to a plate, it normally only interacts with one section. For instance, a creature standing on the ground allows you to focus your tracking resources on that one spot. Since the bridge extensions mated with the practical bridge build-out along the entire top edge, the track had to be perfect across the entire frame. Our tracking team did a great job dealing with lens distortion, sweeping camera moves, and lots of moving people to get solid tracks for every shot.

How did you design and create the CG set extensions?

Richard Sutherland, CG Supervisor // We were very fortunate to be able to work from the incredible practical set that was built and filmed. Production provided a scan and blueprints of what they built, along with an incredible amount of reference photos. These photos showed the different materials that comprised the bridge and many of the details and fittings they used on set. We used these to turn some of the concept art provided into a few rough models which we could present for a design discussion. There were some details that were not really evident in the 2D paintings, as well as others that did not seem substantial enough to fit in with the massive scale of the Helicarrier. We worked with the director and VFX supervisor to come up with a design which worked structurally, matched the feel of the practical set, and satisfied the aesthetic of the film.

Did you received some assets for other vendors?

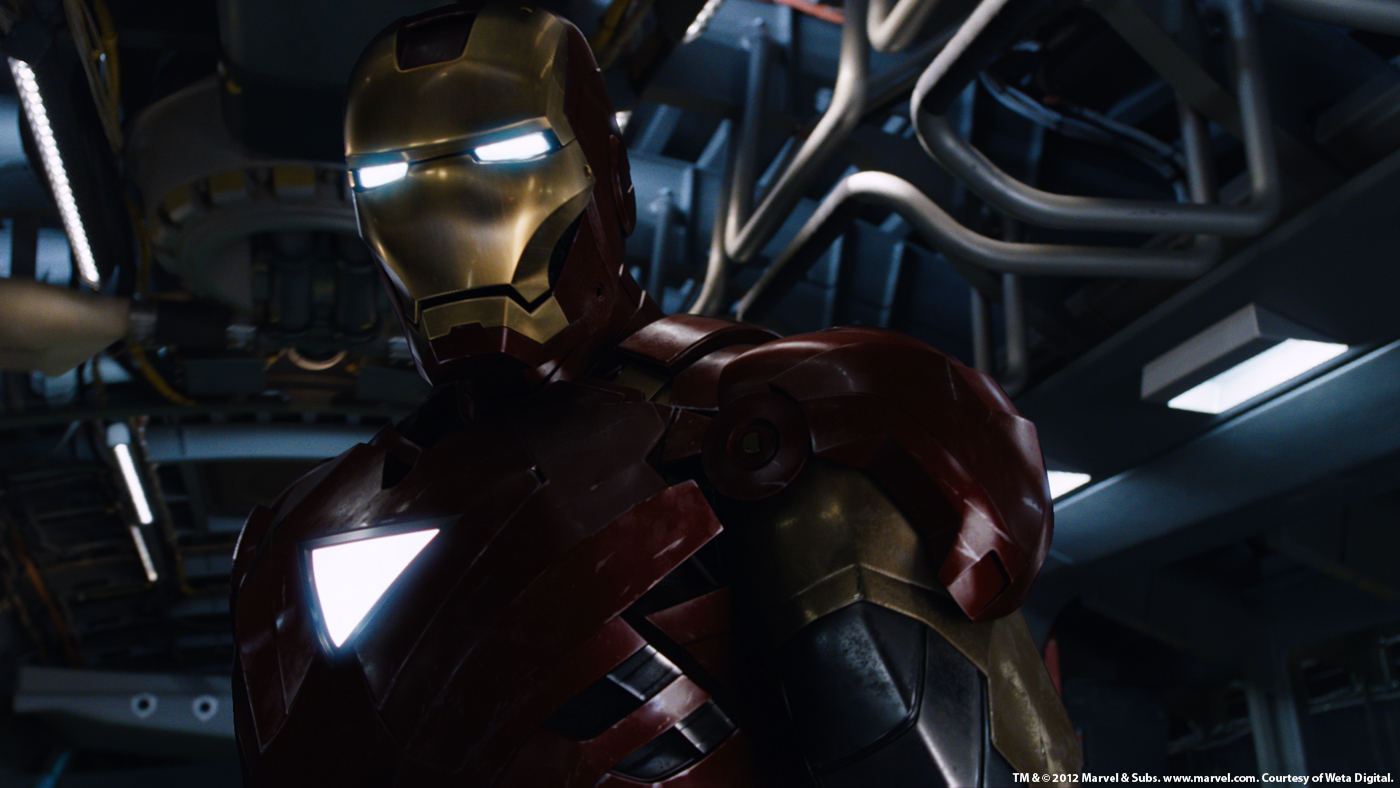

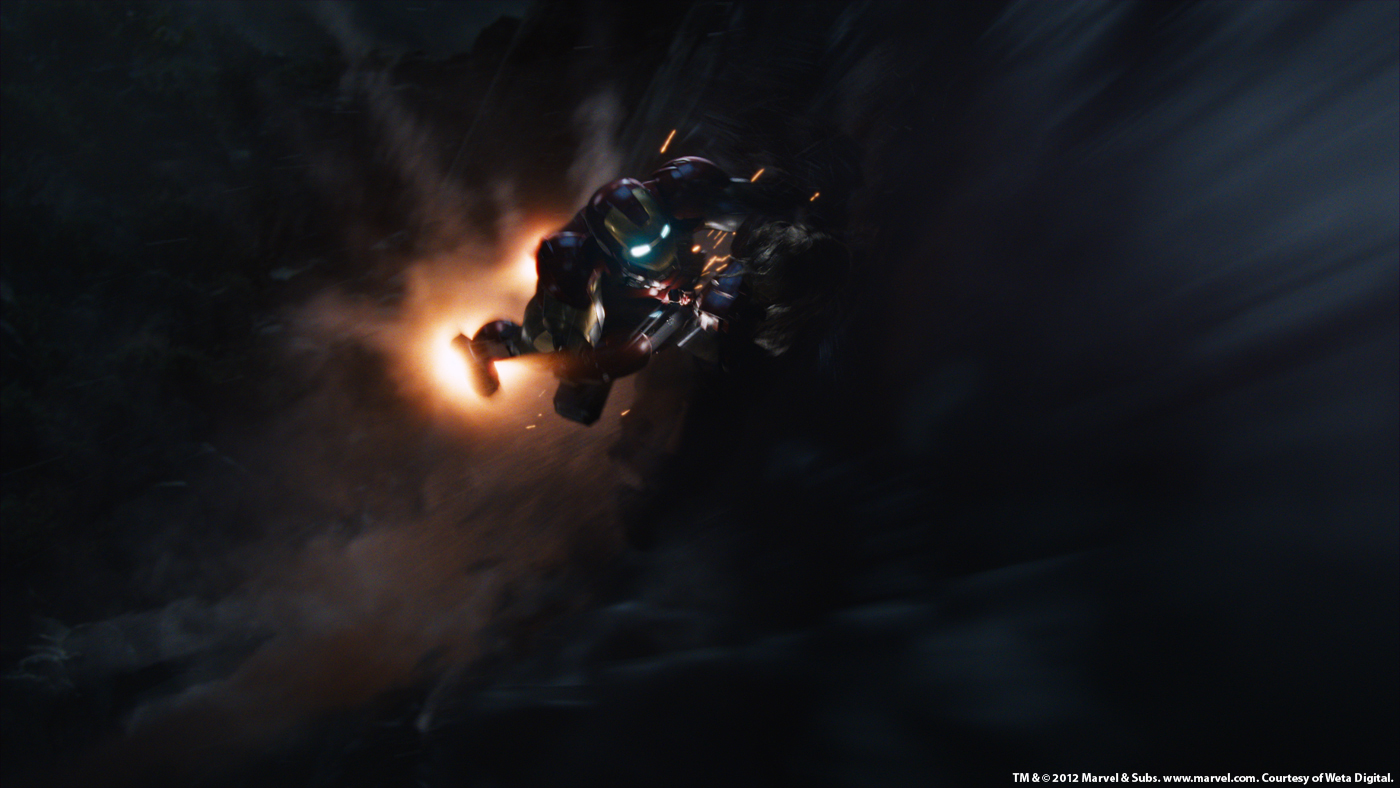

Payam Shohadai, Executive VFX Supervisor // We received some of the Hawkeye arrows from ILM, which formed the basis for our particular design and creation of the special ‘virus’ arrow. We also had the opportunity to animate, light, and composite a shot of Iron Man flying with a QuinJet hot on his trail, two additional assets provided by ILM.

Can you tell us more about the various skies and clouds for the background interior views? Did you used real footages for the skies or were their all CG?

Vincent Cirelli, VFX Supervisor // We completed five different cloud environments in total across various sequences, four of which were daytime and two nighttime. All the skies were CG and each one required various amounts of motion as the Helicarrier traveled through the cloud layers. Since a number of shots needed to be altered as the edit progressed, and to maintain continuity, we wanted to put as much control in the hands of our compositors as possible.

Richard Sutherland, CG Supervisor // Each environment started with a 2.5D Nuke sky gizmo we created using rendered cloud elements. We generated all of our skies in various CG packages, starting with a partial skydome rendered in Vue for the daytime skies. We then broke it up and reassembled it in Nuke with additional cloud elements, tweaking details and adjusting elevation based on the mood and needs of each scene. We added more controls so each compositor could then further refine the Helicarrier’s apparent elevation, forward speed, and placement within the clouds, based on the shot. This allowed us to block out the sequence quickly and respond to editorial changes.

Vincent Cirelli, VFX Supervisor // Depending on the amount of apparent motion through the clouds, we added full volumetric clouds as needed. We did quite a bit of cloud development in various packages, including Houdini, Maya and the Arnold renderer.

Richard Sutherland, CG Supervisor // For the night sequence and another scene in which we were inside the clouds, we used a combination of matte painted clouds projected onto basic shapes in Nuke and rendered volumes for added depth. As the shots neared final completion, we augmented many of them with full volumetric renders.

How did you create the lightning and tornado effects?

Raphael A. Pimentel, Animation Supervisor // Our animation team started the process by blocking out the lightning and tornado timing and placement. This information was then turned over to our FX team to produce the fluid simulations for clouds and the tornado using our updated FumeFX pipeline, which we originally set up during our work on THOR.

Richard Sutherland, CG Supervisor // The FX team also produced several additional simulations for dust, leaves and larger debris. For one of the shots, we ended up replacing the entire field of plants and grass Thor was standing in so that we could add some lighting and wind interaction. The lightning went directly to our lighting team, along with all of the FX elements, so that they could all be rendered with passes for our compositors to layer in correctly.

Have you developed specific tools for the various FX such as the smoke and sparks?

Richard Sutherland, CG Supervisor // We have a collection of rigs and scripts from past shows for sparks and the like, in addition to a pre-rendered library of elements our compositors can choose from and place in 3D space. For smoke and other fluid effects, we have been working since THOR on a FumeFX-based pipeline. For THE AVENGERS, we expanded this pipeline to include not only rendering of FumeFX in Maya on Linux, but also simulation in our standard Maya and Linux pipeline. This was a huge accomplishment from our FX pipeline developer and we were very pleased to have it ready in good time.

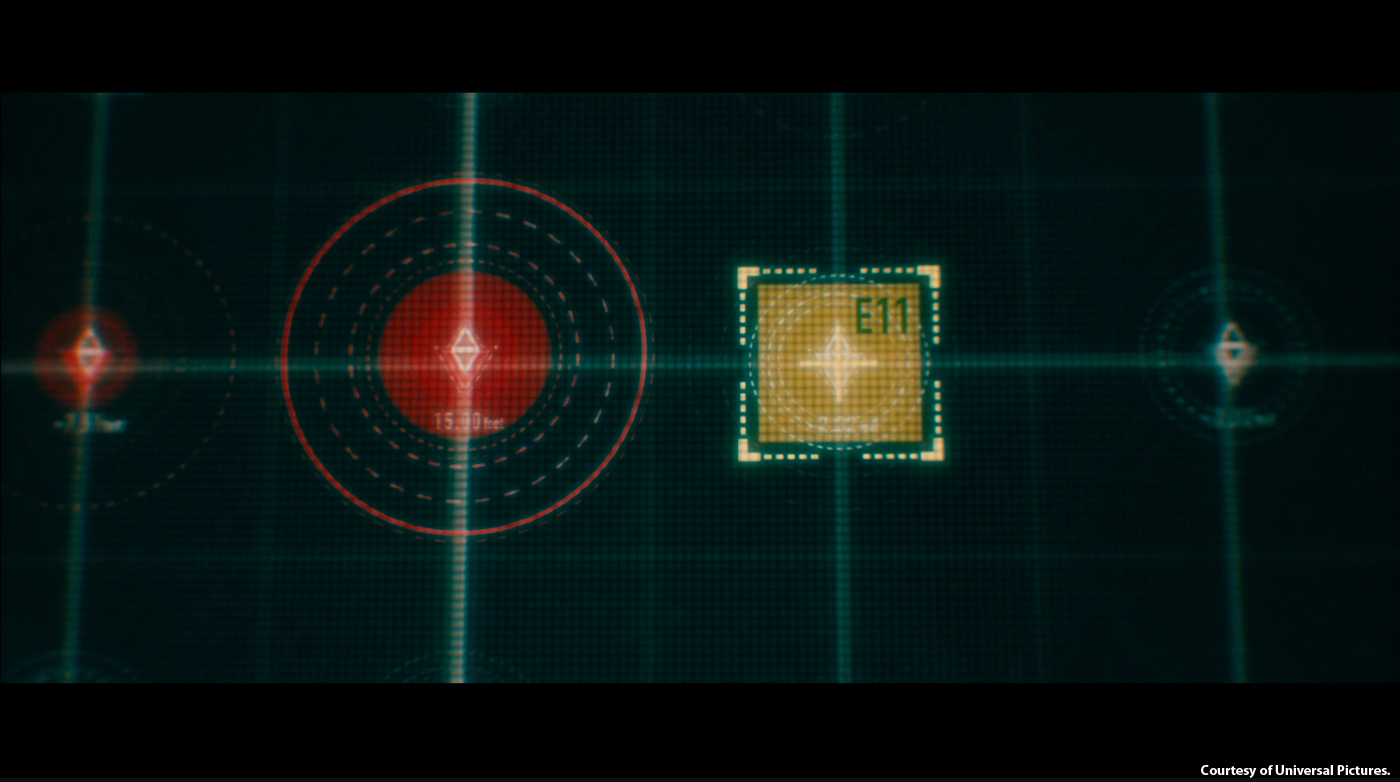

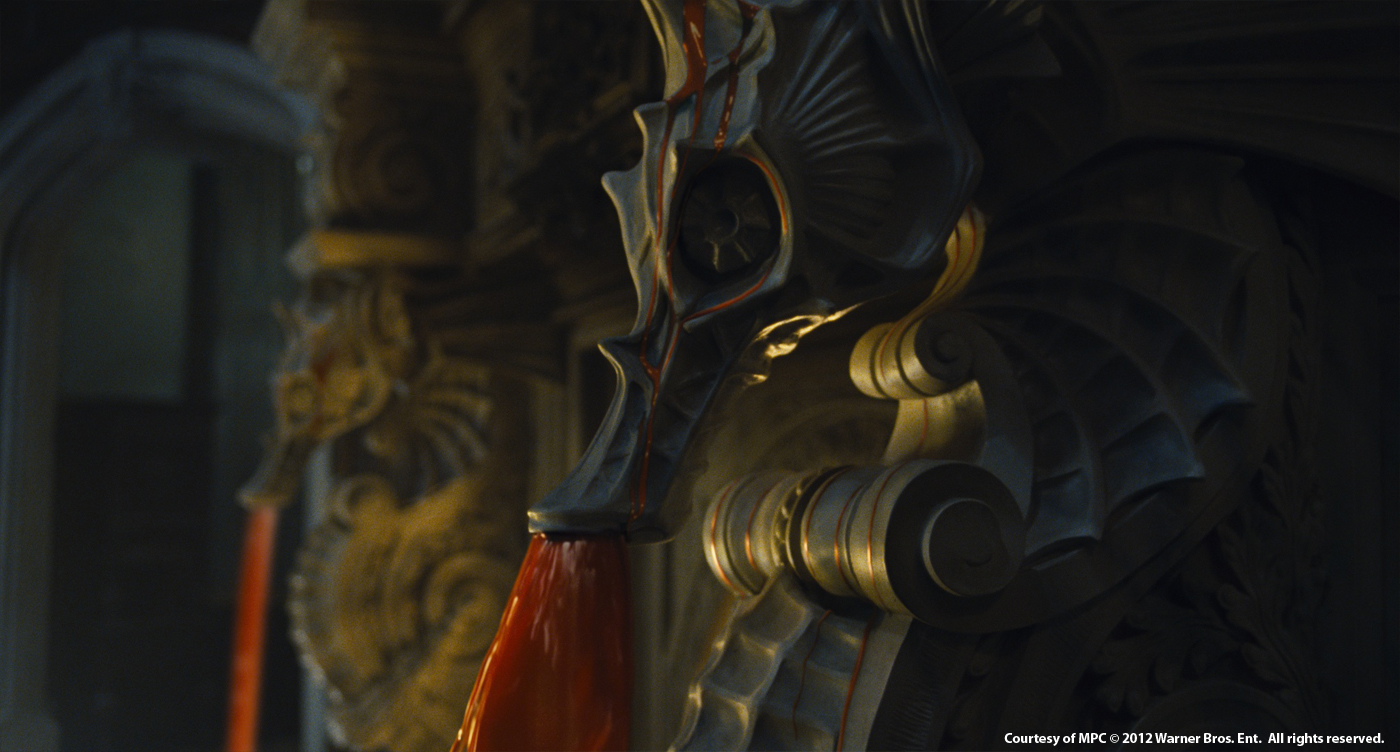

How did you create the CG arrows of Hawkeye?

Vincent Cirelli, VFX Supervisor // Luma designed the virus injection tip for an unusual arrow Hawkeye uses in a strategic attack. We began with the shaft and fletchings received from ILM, then created several tip designs that would fit into the socket that had been built on set. Each arrow tip opened in a unique way to insert prongs into the socket and enable the computer virus injection. We incorporated some specific ideas from the Marvel team into every design, such as the spring-loaded feel and electronic progress meters, but approached them in different ways in each. After a few revisions, we came up with a design which was aerodynamic when closed, but fit well into the machinery shot on set.”

How did you animate the CG arrows of Hawkeye?

Raphael A. Pimentel, Animation Supervisor // Hawkeye’s arrows were animated with a main control used to translate the arrow through space. Additionally, it featured flex and bend attributes which the animators used to achieve the bow release wobble in conjunction with high frequency vibrations seen in real-world arrows once they impact a surface.

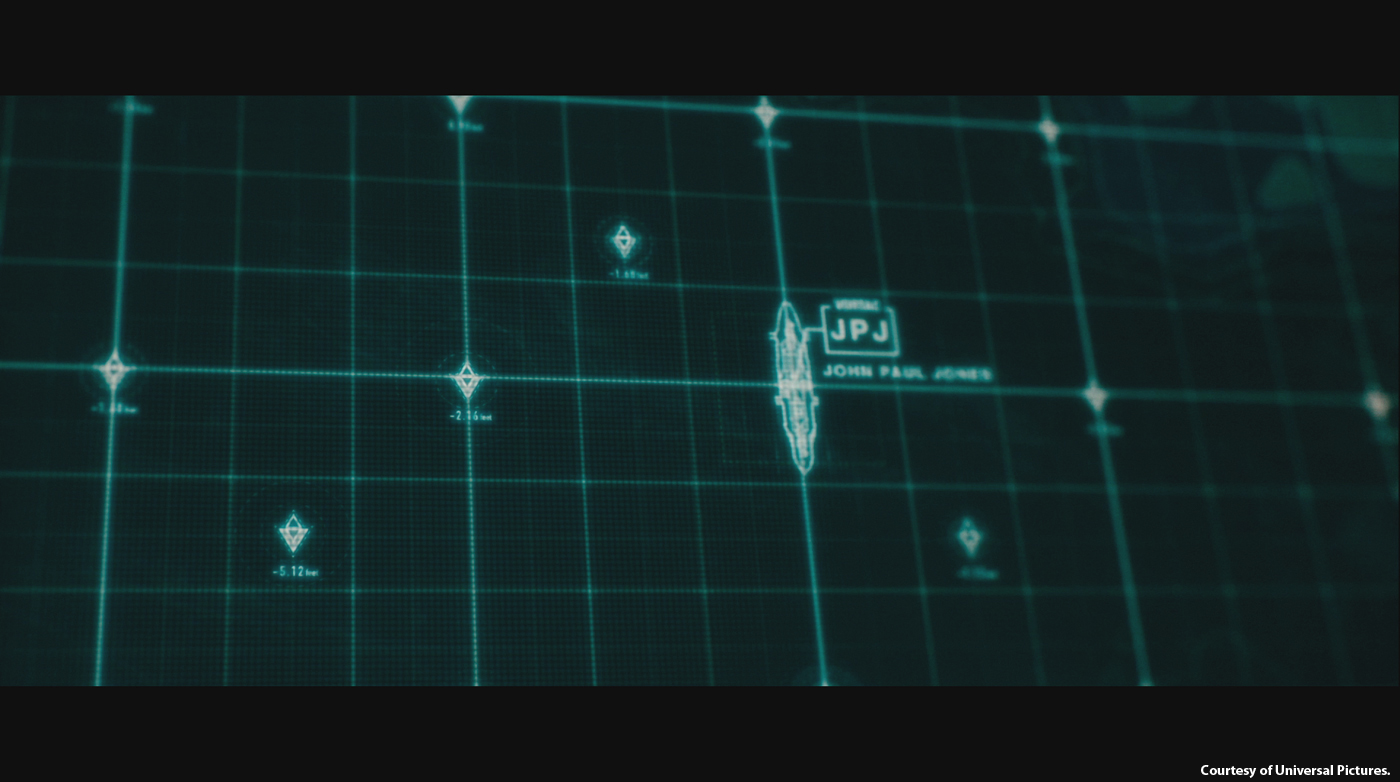

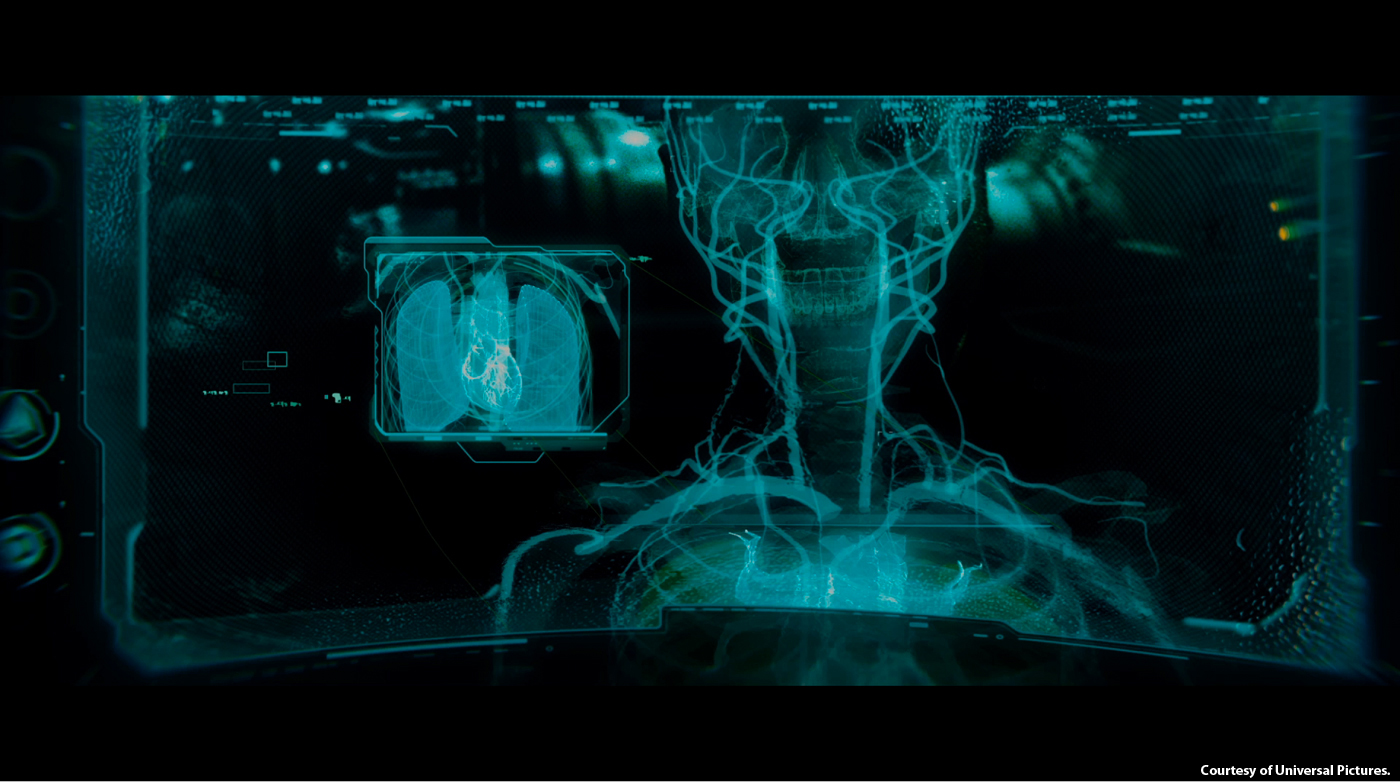

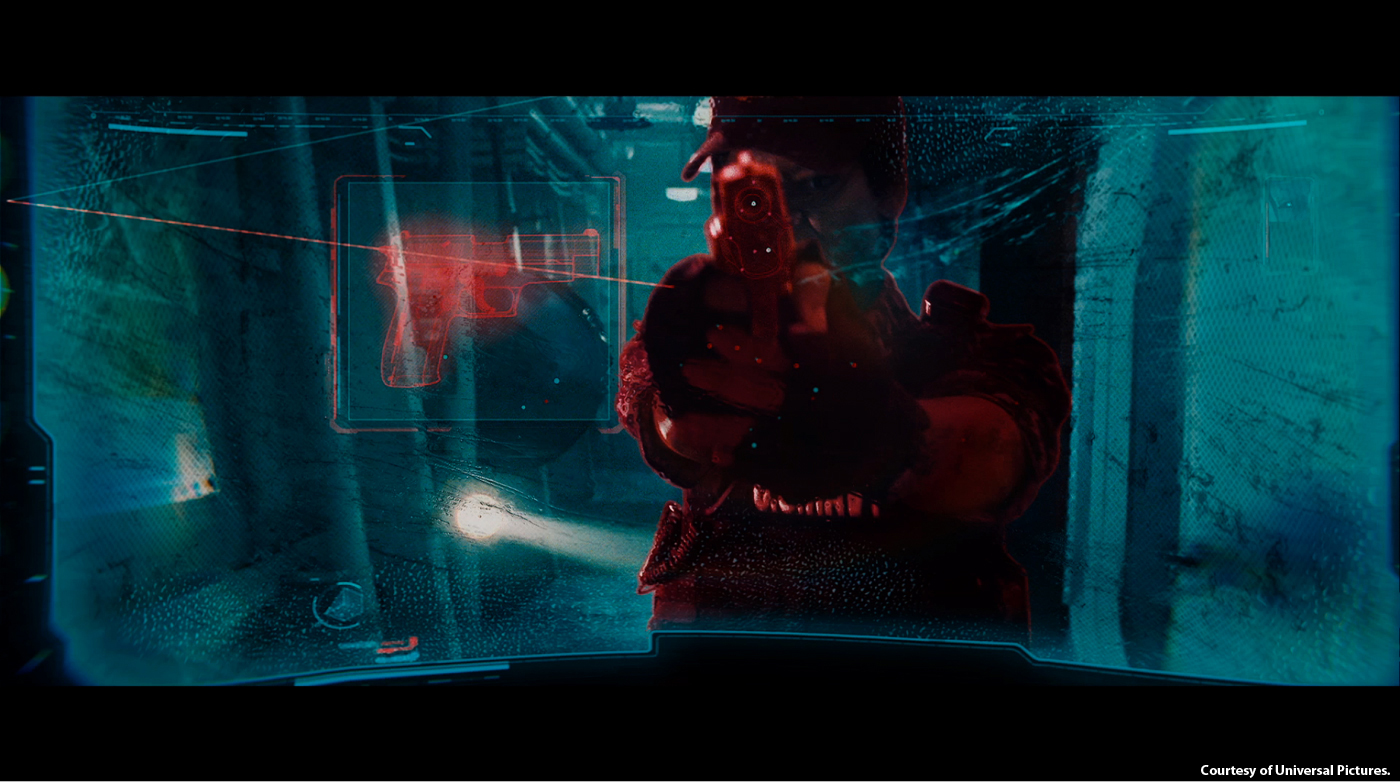

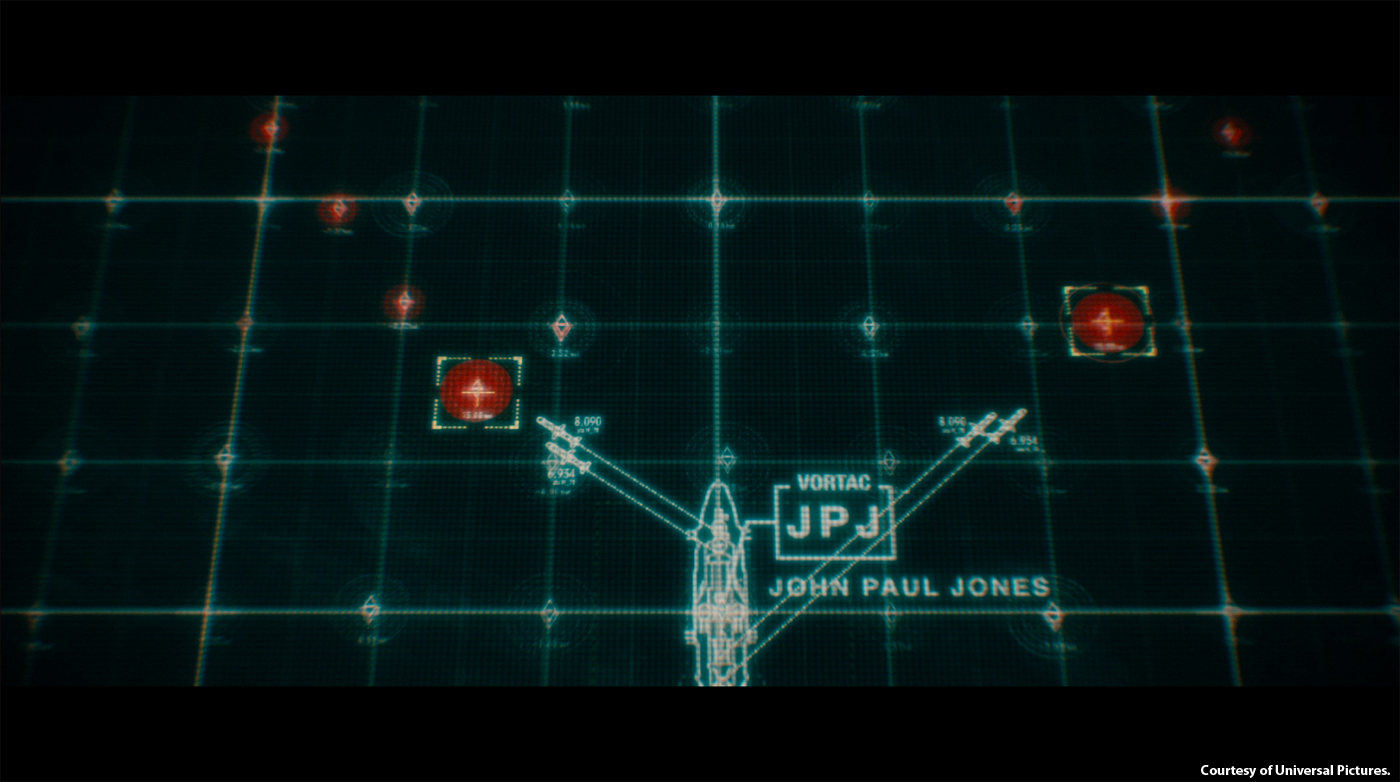

The Helicarrier Bridge features a impressive number of monitors. How did you approach this part?

Vincent Cirelli, VFX Supervisor // Organization was definitely a key to getting the monitors into the shots. Each shot had very specific footage for each monitor, and that would change from time to time as the edit was refined. We received plans and scans for the practical set early on. Using these, we built a representation of all the monitors and synced up our naming with those provided by the graphics vendor. Each shot was tracked and we loaded the camera and monitor geometry into Nuke, where we had a script set up to load the appropriate footage onto each monitor for the compositors.

What was the biggest challenge on this project and how did you achieve it?

Richard Sutherland, CG Supervisor // While not technically difficult, the Helicarrier bridge sequence as a whole was a clerical challenge in that there were several dozen monitors to keep track of and propagate across different scenes and from different angles. Seamlessly integrating the CG set with the clouds plus the glass-panel monitor graphics required some elbow grease and a deceivingly high level of attention to detail.

What do you keep from this experience?

Vincent Cirelli, VFX Supervisor // What we really keep from this experience is an even greater appreciation for how buttoned-up the visual effects crew is at Marvel. It’s impressive how many studios and shots they can juggle without missing a beat. It’s also a testament to the collective talent of Luma’s artists, supervisors, and managers. We take pride in our ability to quickly react to and accommodate changes, and this mission was made even easier for us thanks to our excellent ongoing relationship with Marvel.

How long have you worked on this film?

Payam Shohadai, Executive VFX Supervisor // Turnovers and look dev began trickling in back in late August 2011 and we wrapped in March.

How many shots have you done and what was the size of your team?

Payam Shohadai, Executive VFX Supervisor // We employed a team of approximately 80 at its peak (counting artists as well as production and operations staff) to complete close to 200 shots across various sequences.

What is your next project?

Luma Pictures recently completed work on PROMETHEUS (Scott Free Productions/20th Century Fox) and Sacha Baron Cohen’s THE DICTATOR (Paramount Pictures) and are currently wrapping up G.I. JOE: RETALIATION (Paramount Pictures).

A big thanks for your time.

// WANT TO KNOW MORE?

– Luma Pictures: Dedicated page about THE AVENGERS on Luma Pictures website.

© Vincent Frei – The Art of VFX – 2012