In 2017, Axel Bonami explained the work of MPC on GHOST IN THE SHELL. Today he takes us to the bottom of the oceans to talk about UNDERWATER.

How did you and MPC get involved on this show?

MPC worked on a pitch under MPC VFX Supervision from Arundi Asregadoo. It involved setting the mood and providing tests for the dry for wet shoot and requirements. It was to provide a good idea of what would be targeted and the challenges we would face in the postproduction process.

How was the collaboration with director William Eubank and VFX Supervisor Blair Clark?

Fantastic, the best collaboration I’ve ever had. When I had UNDERWATER pitched as my next show, I had just finished working on GHOST IN THE SHELL, through spider tanks, thermoptic suit and solograms… I am myself a fervent scuba diver, so the idea of working on deep diving visual effects was perfect for me. We were always on the same page with Blair Clark and William Eubank. Same visual references, similar approaches on what to achieve. I still remember the video Blair sent me where he had rented scuba diving gear and filmed himself walking in his swimming pool, priceless.

What were their expectations and approach for the visual effects?

To have the viewers not notice they are looking at visual effects and to bring to life a totally believable, deep underwater world. Apart from the sequences where creatures are visible and out of our known world, we wanted the viewers to have no idea they were looking at a fully computer generated shot, but to feel like the characters are actually walking in deep water for real.

How did you organize the work with your VFX Producer?

I always work very closely with my VFX Producer. It is important to constantly be on the same page on the show requirements and solutions considered. Even though it clocks a little over 400 shots, when we decided to fully computer generate 300 of them, it is a call that needs to be in line with the budgetary restrictions and obtaining the best visual.

How was the work split amongst the MPC offices?

London would cover main creature assets, model, rigging and lookdev. Bangalore would help on secondary assets, plate clean ups, Rotoanim and the compositing. Montreal, all the rest.

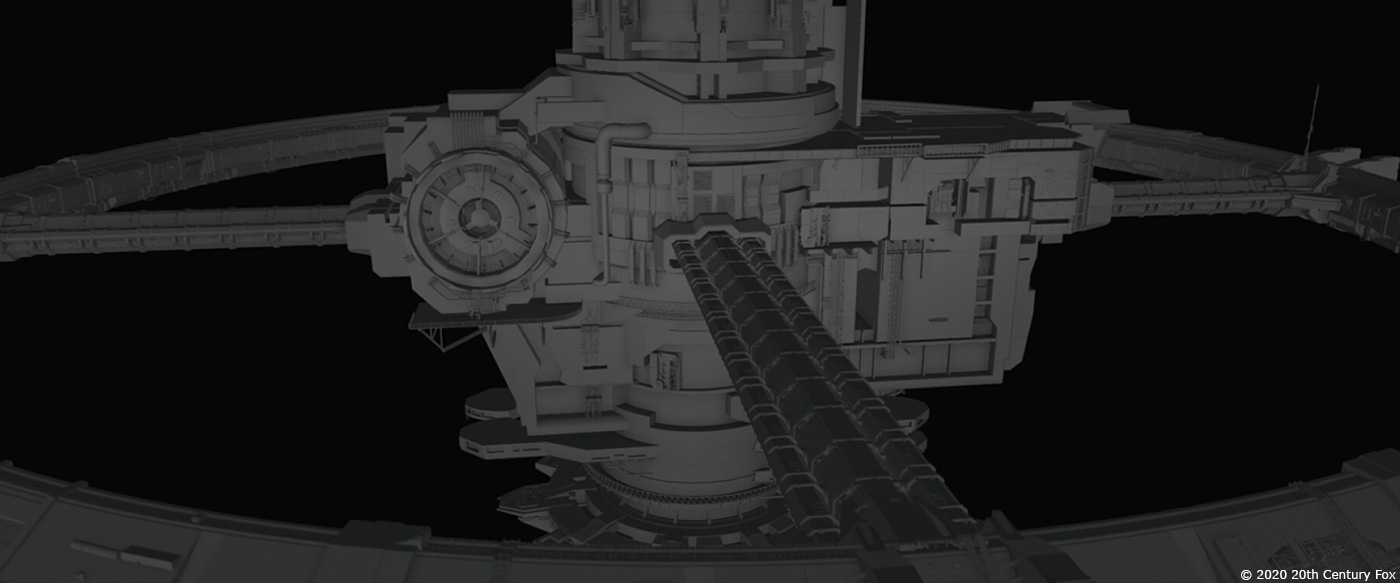

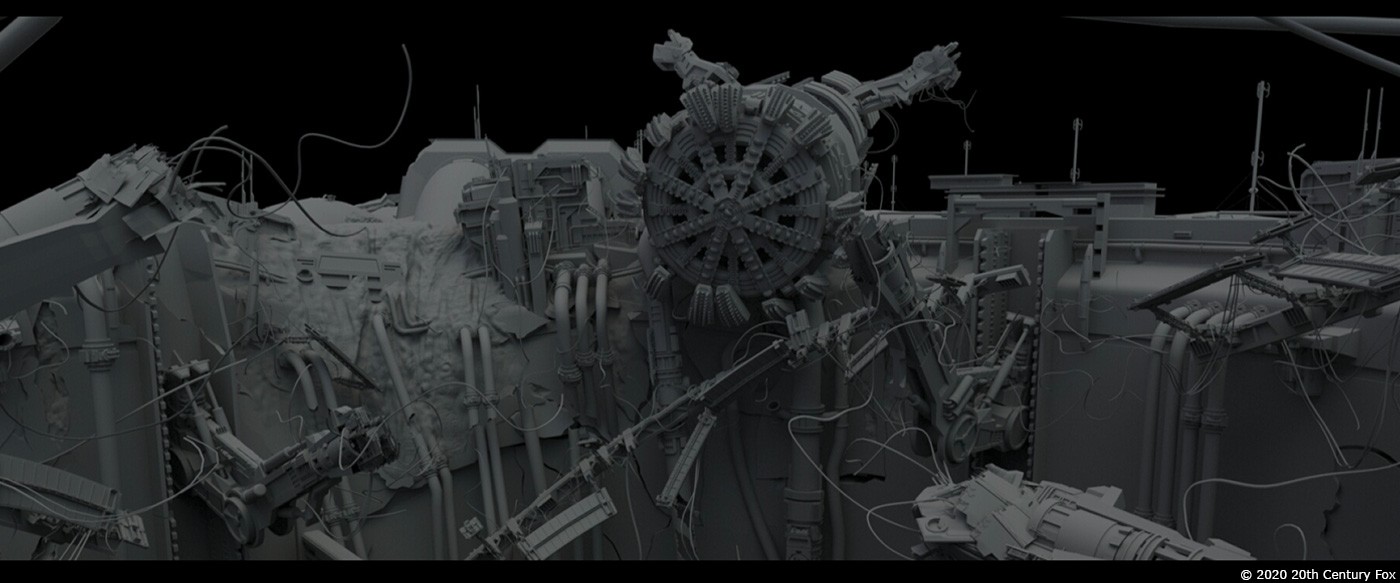

Can you elaborate on the design and creation of the Kepler Station?

The station parts were divided between, Kepler station (where the movie starts), Midway platform (baby clinger capture), Midway Station (run to safety as the Kepler collapses), Drill site, Ocean floor, Shepard Station and finally the Roebuck. We had sketches, some rough model ideas, provided by production and Blair Clark, partial sets of station platform and ocean’s floor to start with. Our Environment Supervisor, Thierry Hamel and his team gathered some reference of industrial sites that would line up with current “Naaman Marshall”’s sets design and concept art. We presented rough model updates of the different set piece, to agree on relative size and main features and work up detailing in second stages. We had internally a full 3D representation of the entire station from the Kepler to the Roebuck, covering literally 5 miles. There were talks during post production of doing a fly over of the whole station at the beginning of the movie, so we were prepared. This did not happen in the end, but it made sense for us internally to have a clear road map.

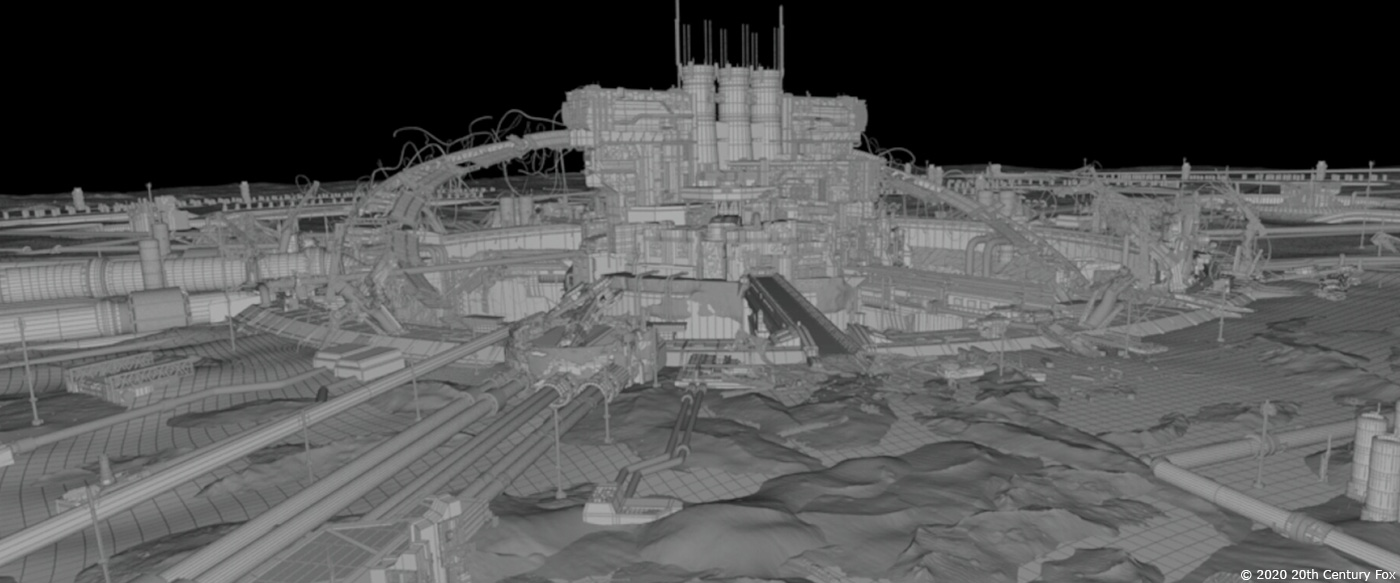

How did you destroy it due to the earthquake?

For the Kepler implosion sequence in the first part of the movie, we looked at actual pressure releases on different type of tanks and barrels. William wanted to feel the pressure, and the physics of underwater collapse and explosions. We built up “simple” rigs for Anim to time the different events, so we could all agree and its rhythm. Our Tech Anim lead, Timothee Nolasco and his team would then run some actual metal compressions, deformations and heavy collapses. A real challenge for such a big size environment. Our FX team, would run secondaries, through explosion/implosion bubble, silt, particulates, bubbles blasts… During the compositing phase, our compositing team, under Ruslan Borysov and Sreejith Venugopolan’s supervision, would work closely with the environment for additional matte painting detailing. The exterior assets were all started with some texture/shading, but due to the huge size and number of them, we would use Matte painting for finishing details to emphasize the scale.

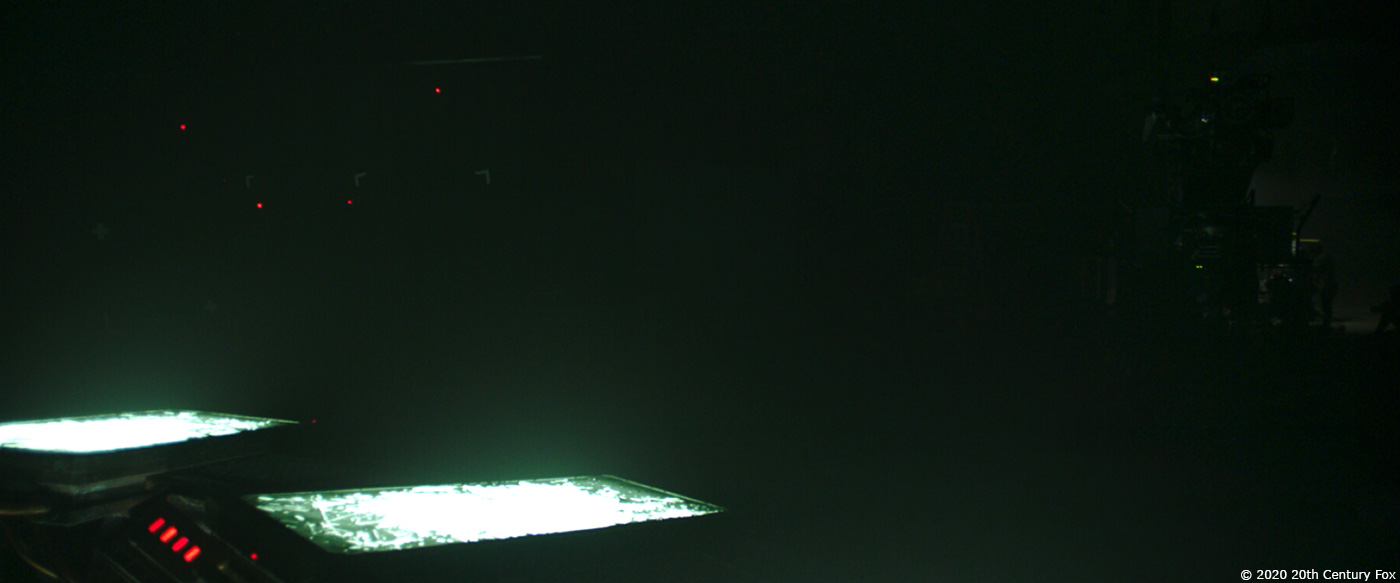

How did you create and animate the water simulations?

We first work by gathering references. We used a lot of saturation diving clips where you see underwater workers at depths of 150 m, installing and maintaining underwater structure such as oil rigs. This was very good reference for underwater visuals, particulates and silt behavior. We had a lot of different types of particulates with specific behaviors, and it was important to nail the actual fluid behavior, and how it gets affected when sucked into movement vortices. Our Lighting Lead, Francois De Villiers, improved our particulate shading, based on modelled instances, so we could actually read the light behavior on each individual particulate, and improve the physical simulation, to perfect how they spin, float, absorb, or diffuse the light. Our FX lead, Francis St-Denis, one of his Key Artist’s, Bryce Vallee and their team created hundreds of generic caches for each type of particulates with specific behavior. Thanks to our software team who develop and improve FX cache management pipeline, we would setup a shot first using generic caches where we would control start frames and spatial position. This was to make sure that we could generate the very high volume of particles. We would then upgrade the caches when required to better match the action. Finally compositing would add here and there, as well, what the Director had called the magic trick, a few specific bubbles or bigger particulates swirl to create the final wet feel result.

Can you tell us more about the shooting of the sequence outside the station?

For everything out in the open underwater scenes, following the pitch and some early discussions with Blair, we decided for a dry for wet shoot. We would get in camera, the best and the more realistic performances we could get. Partial Sets were built, with ocean floor, underwater junk structures, parts of platforms where the actors would act their scene. With no thin cloth floating and no hair sticking out of the suits, we had decided as well to not use any green screen. It never actually covers the right part, and in a slightly smoked environment it always starts flooding the whole set with green spill all over.

We wanted to keep the actors and their performance in a contained moody environment. The Director of Photography, Bojan Bazelli, would have no constraints when setting up what he wanted the scene to look like. One of the requests was to actually photograph the shot 1-2 stops higher, in order to have better visibility in the raw plates; a post grade would take the plates back down. We shot at different frame rates, pending on the action, so we could keep some underwater resistance feel in certain type of actions, and we sometime used cable rigs to add some float to the actor’s performances.

Sets were dressed with hundreds of LEDs to help the match move tracking. And even though, everything was computer generated in post, we would not have the same performance, nor the same moody intention if created afterwards. All actors’ real fear, sweat, tiredness and tears of their endeavor were added back into the CG body performance, through precise roto-animations and suits helmet inserts.

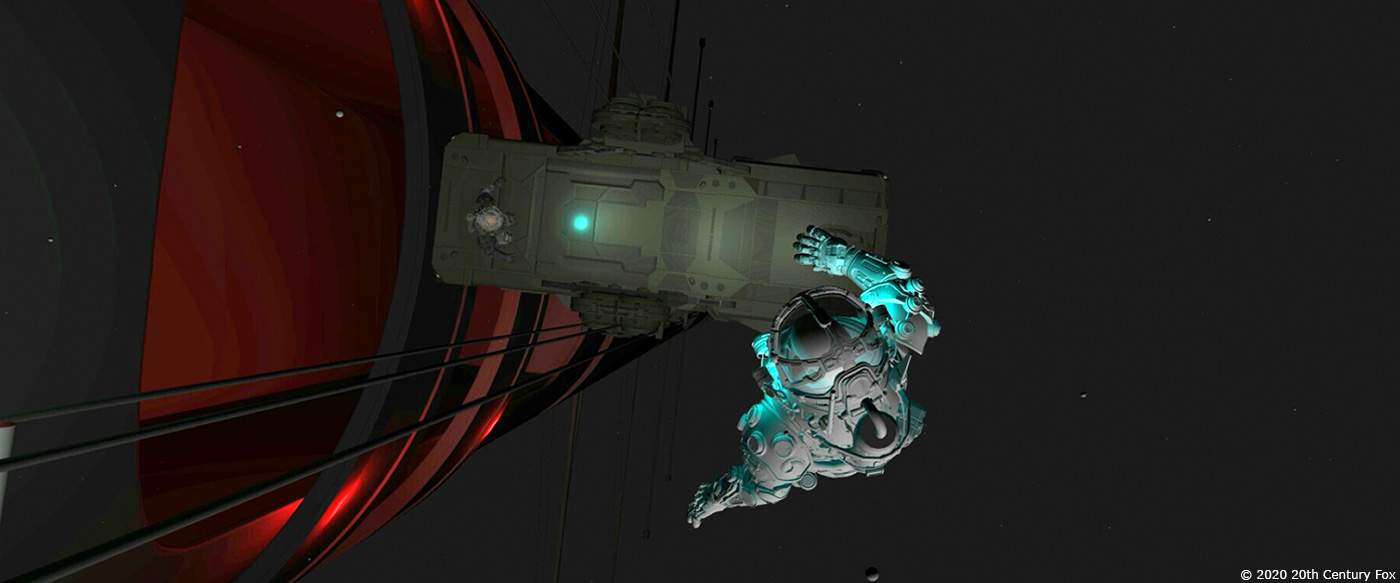

Can you explain in detail about the suit creation?

Suits were a combination of design work with Ash Thorp and Calum Alexander Watt, then fully built into practical with Legacy. The latter built different versions of suits, with some actual functioning underwater helmets for a couple of tank underwater scenes. They were pretty impressive designs and props. We created the digital matching versions of them. We knew we had several full CG shots where we would use the digital version and where we would perfectly match the real suits. We just used these assets even more to immerse the viewers deeper into this underwater venture.

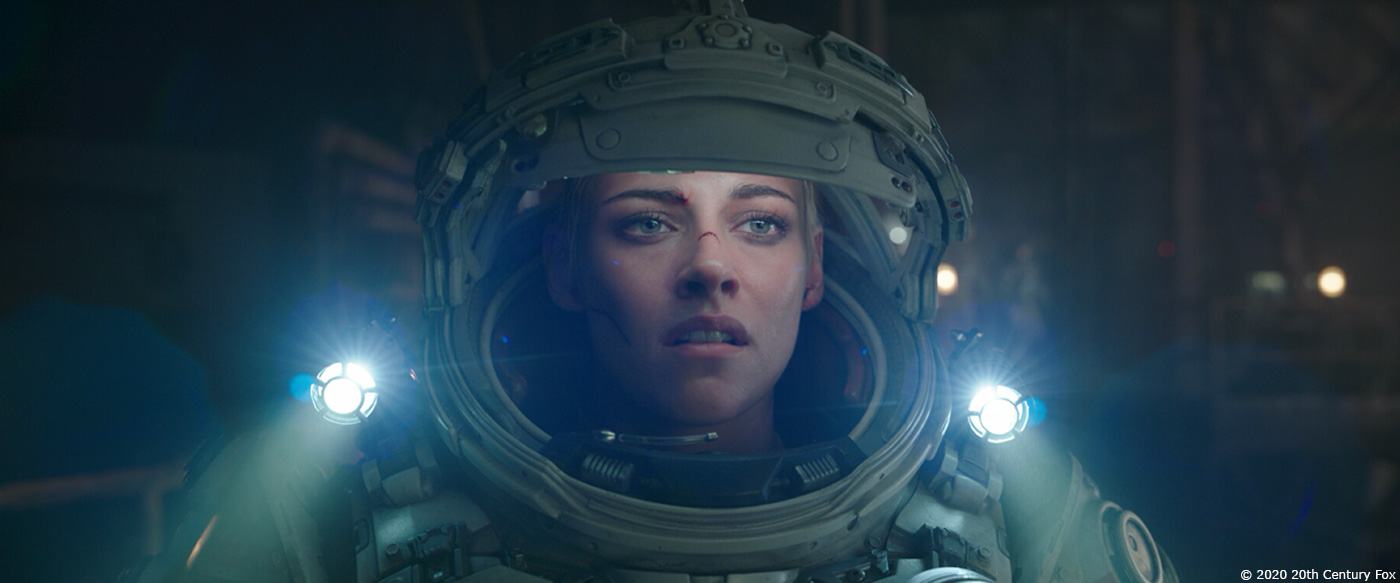

How did you handle the visor challenge?

It is something common nowadays to add visors in post to control their extreme reflectivity. This technique was used a lot in THE MARTIAN. It is more comfortable for the actors, and is a great help once we need to add the reflection of a world that does not exist during the shooting process. The challenge came from the refraction nature of an underwater environment. A natural phenomenon when transitioning from a water environment to air with the use of a mask, optically, it adds a refraction effect which distorts the light rays. When snorkeling, you will notice that things appear bigger and closer that they are for real. This is a phenomenon that people are familiar with, at least visually, when looking at underwater documentaries. With a spherical helmet, this becomes a huge challenge, as it is like looking into a crystal ball. As there were some real underwater shots with the actors in the helmets, we had a great reference of the effect, except that with such a helmet, everyone looked like very small fish eye lens heads.

In post we were already running tests on refraction, and starting with the actual real water refraction, we had found it felt it was too much to sustain this effect for so long and so much main actor screen time, so we were looking into reducing the index of refraction, for a normal 1.4 value to a slightly under 1.1. What we had then to look into, is actually reduce the refraction of principle photography shoot so we could match the agreed preferred look. In the end the illusion worked, and we had principal photography shots with refraction reduced mixed with fully computer generated shot with face post-refracted plate. On top of that, our Compositing Supervisor Ruslan, designed a floating screen HUD into the glass with basic vitals, like any diving computer would have to enhance the suit visors.

How did you work with the SFX and stunt teams?

By letting them do what they’re best at. I always appreciate practical special effects, and I consider the best visual effects to always be a combination of both. So for a scene like the corridors explosions and compression, we worked on the full CG shot of the corridor with the workers trying to escape, but used some of the clean plate with water sprays, added practical sparks taken out of other plates, and used practical SFX as references. When looking at the sequence, you cannot tell what is practical from computer generated, and that’s for us the real deal. Same was for the stunt where they rigged the stunts doubles in suit to perform, whether when blown out of the elevator, when Norah is attacked by the clinger or when she’s ejected back by the blast. In these particular actions, the performance were roto-animated down to the fingers and toes, so we keep the exact performance of the stunt double in our fully computer generated image.

Which stunt was the most complicated to enhance?

The huge bubble blast that throws Norah back when the Behemoth hits the bridge. Not so much related to the stunt itself, but more to the nature of the blast shockwave, that goes into super slow motion in one shot, blasts again to get sucked in as Norah lands. In the end, apart from the visor plate of the close up, all was computer generated based on the stunt of the first shot, to the Norah performance, roto-animated of the close up shot, to fully CG animated in the third shot. It felt in the end the whole cut and event worked pretty well.

The movie introduces terrifying creatures. How did you work with the art department for their design?

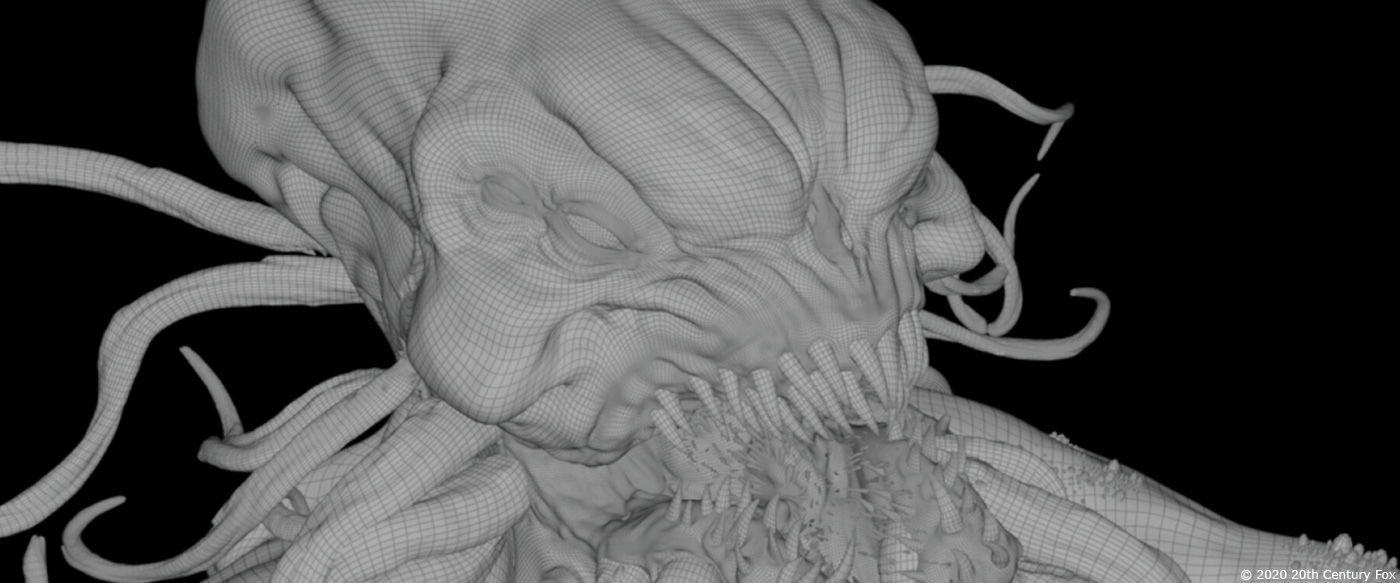

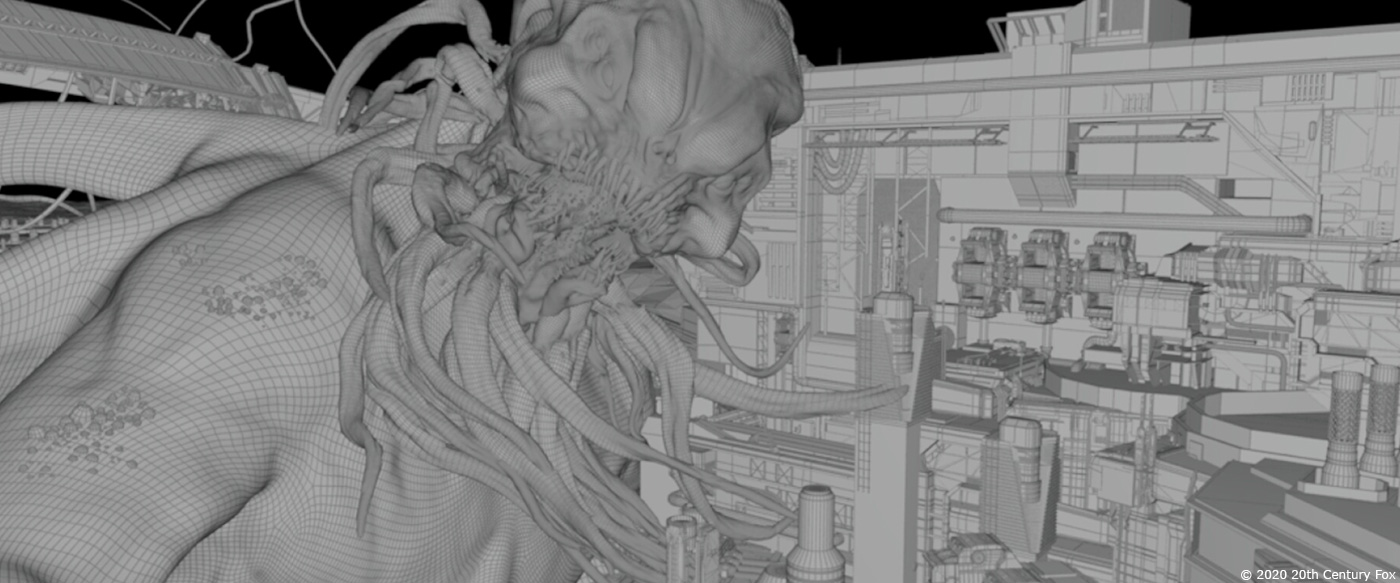

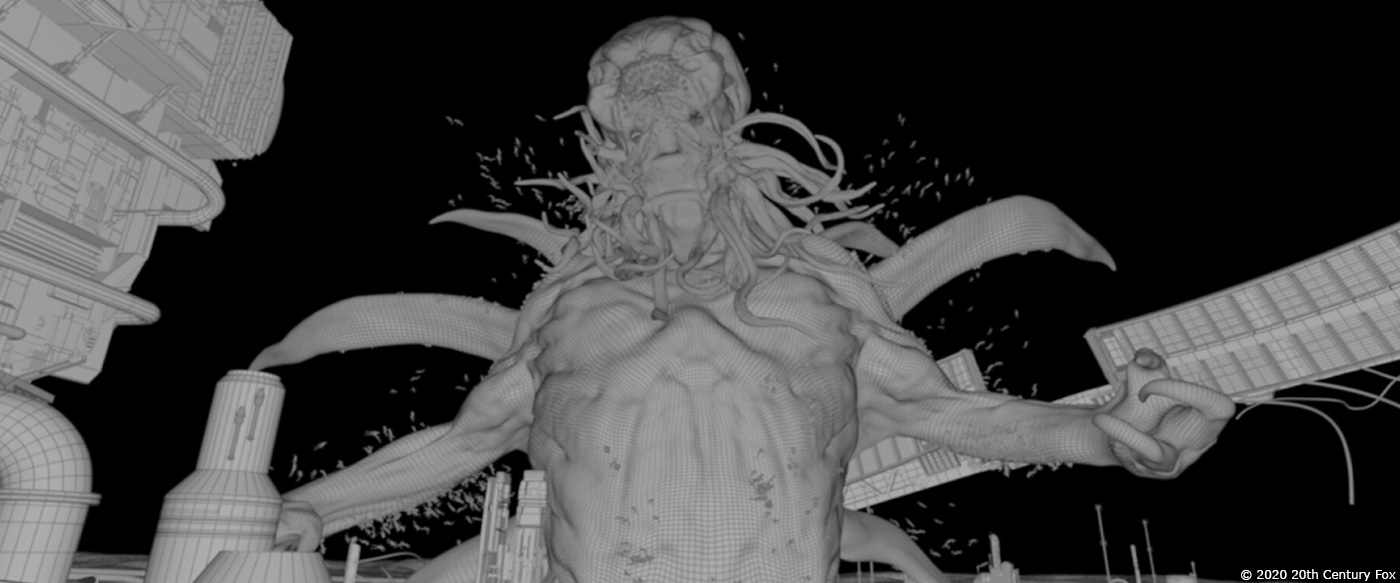

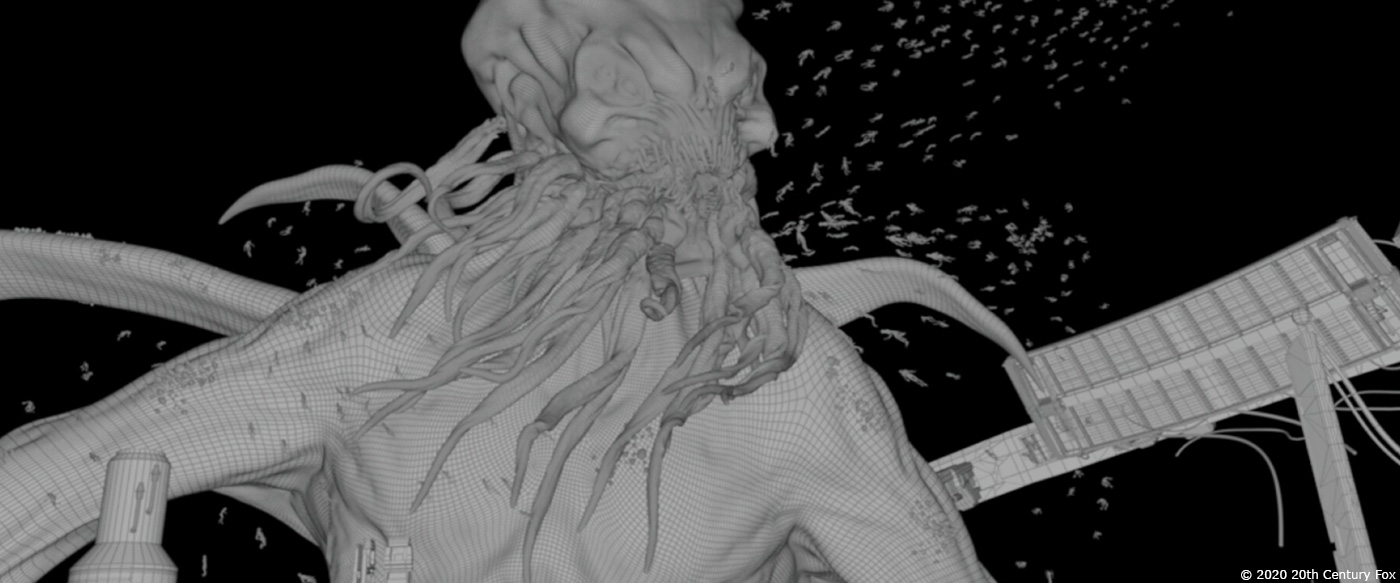

The creature work went through many different phases and designs while in post. We changed the main design quite drastically when we had almost finished the current asset of the clinger. The clinger v1.0 is actually used for couple of shot in the end, when Norah is lost by herself. It had a more squid like/octopus feature, with huge claws along the tentacles. William thought it looked too much like what you could expect, so he wanted to bring an extra dimension to what they would be facing. So the design changed into a tall biped like creature, which became our clinger v2.0. The Behemoth shifted to a more “Call of Cthulhu” type. A lot of the final designs were handled by Leandre Lagrange, one of our senior concept modeller artists. Then provided to us as sketches, as well as a sculpted 3D model, which is very practical to start with.

Can you explain in detail about their creation?

Once we get a concept approved, our asset team, under supervision of Christoper Antoniou and Anelia Asparuhova, picks the concept model and start the referencing stage to refine the actual model. We look at real life references, of different animals and surface qualities, so we can refine the anatomy and the model details. Once approved, we refine the actual mesh in the topology process. We then move it to the texturing stage. For the clinger v2.0, we would look at deep sea creatures, jelly fish, frogs for the surface quality, as well as pigmentation layout. This would carry over to the look when working the skin properties and translucency, in and out of body, as at some point, Norah ends up in the stomach of the clinger.

The behemoth was a real challenge with its scale being roughly 700 meters long. You end up juggling with a couple of assets depending on what body parts are featured. There was the idea that the clingers would be like parasites that sit in skin pores. Based on the female Suriname sea toad, a frog that has its baby into its back skin that pops out. It’s gross for some people based on trypophobia, a phobia of clusters of small holes on a surface, like barnacles, lotus seed pods… Other skin features were based on big whales, such as scars. On its face and arms were added strings of algae of different sizes to add surface complexity and maintain scale. Most of the time we would have to cheat that actual water attenuation properties to fit its scale, otherwise, in the real world, it would be impossible to see anything else than a small part of his body.

Can you tell us more about their rigging and animation?

The clinger v1.0 was a real rigging challenge with its 15 tentacles, with limited screen time in the end. Regarding the clinger v2.0 and its humanoid shape, the rigging was straight forward. On the other hand, its animation was a challenge due to the underwater environment and nature. Our Animation Supervisor, Michael Langford, took his team to the swimming pool to study and record references of what movement it would take to walk underwater, stand and change position. Combine with then sea lion, seal, octopuses and squid references for speed and ease. We wanted to make it feel aquatic, but awkward at the same time to entertain that level of scare William was seeking.

For the Behemoth, the challenge was its scale again. At that size, it’s difficult to keep the physics real, because you would see it barely move in any given shot. As it’s ripping off the Roebuck with its beard tentacles for instance, we had to cheat the actual speed of animation and find the sweet spot that keeps its sense of scale. However, an increase in speed creates challenges for the FX department as you have to adapt your simulation and bend real world physics. So it takes few iterations to get it right, or feel right.

How does the Deep Sea affect your animation and lighting work?

Animation wise, it is the water density we have to get familiar with, and the incompressible nature of it, compared to air which can compress, propagation and fluids have completely different behaviors in a liquid. We shot the actual actors performances at a different frame rate, based on the action or the framing of the shot. Based on the rehearsal, Blair and myself would suggest a more appropriate frame rate for a particular camera and shot and use cable rigs to constrain their performances too like it would add resistance to their run. In fully animated shots, the team would focus their details on real life references, like when you push with your hand in the water to rotate your body.

The lighting work for UNDERWATER was a big turning point of our postproduction. William and Bojan Bazelli, the director of photography were targeting a photorealistic feel for the lighting, therefore very source based and from a limited number of light sources. Following the pitch, we had agreed though we would cheat and maintain a level of ambience, that you usually have closer to the surface, so we can play a little more with the distance and silhouette in the dark. As we were working on the water feel addition through particulates and silt, we were not reaching the realism we all wanted – it wasn’t “wet enough”. Our CG supervisors, Manolo Mantero, Lazlo Mates and Nick Hamilton, in concert with our compositing supervisors, looked into altering the shading, introducing more self-bounce, and way more diffuse and softer specular. This is because underwater, the light bounces on its own textures more than in the air. The test results were very promising and convinced everyone of the underwater “wet” look. Initially, we had to run, in our postproduction process, roto-animation of the performances for all the FX interactions, and we would normally then rotoscope all characters to split the depth. So we shifted the rotoscope tasks to refine the roto-animation, to be able to render the suits digitally, looking like the real one, but we would have now the bounce and shading control, plus a full precise depth attenuation off the full CG approach.

The process would then match the actual plate performances and lighting. Render additional shading properties of the full environment and suits, and combine the whole in compositing using in house underwater shading templates, developed by our team. We then had full control on how far you see – how much ambience, etc…

Which sequence or shot was the most challenging?

In sequence, the run to safety – which is when the Kepler explodes while they are in the cargo lift and it crashes to the ground. The sequence was introducing explosions, fire, large debris crashing, POV inside helmet, multiple characters running, and wide shots. All of the above are all against you when you want to sell a real underwater feel, as not of them are realistically possible. But in the end, what a sequence though. I think it worked pretty well.

Is there something specific that gave you some really long nights?

We started the show wishing that James Cameron would say it believed it looked real underwater. THE ABYSS is a tent pole of underwater cinematography. The bar was raised really high; it did indeed take long hours to get there.

What is your favorite shot or sequence?

2 shot come to mind, when Norah walks on the platform away from the elevator where Rodrigo just exploded, and a piece of his jaw hits her Helmet visor. That set the tone.

The second one is not so long after, when Norah jump on the cargo lift with the camera look down to the abyss beyond. Its gives me goosebumps every time.

What is your best memory on this show?

Being a part of it. We had such a blast.

How long have you worked on this show?

A little over a year.

What’s the VFX shots count?

A little north of 400 shots

What was the size of your team?

Around 400 artists across all sites.

What is your next project?

I’m VFX Supervisor on ARTEMIS FOWL, out in May 2020.

As for the next one, I really can’t wait to talk about it because it is going to be really fun…

A big thanks for your time.

WANT TO KNOW MORE?

MPC: Dedicated page about UNDERWATER on MPC website.

© Vincent Frei – The Art of VFX – 2020