To kick off 2019, Bryan Hirota had explained in detail the work of Scanline VFX on Aquaman. He then worked on Gemini Man. Today he talks about the opportunity to bring to life the vision of Director Zack Snyder on Zack Snyder’s Justice League.

Last summer, Julius Lechner told us about Scanline VFX’s work on Spider-Man: Far from Home. He then went to work on Hubie Halloween.

How did you feel being back on Justice League?

Bryan // A bit surreal to be revisiting Justice League so many years after the theatrical version. It was a mixture of familiarity having spent so much time on it the first go around and confusion trying to remember the specifics of how things were left back in 2017.

Julius // It was definitely a unique project. You usually don’t get to work on a movie twice. A lot of the old shots had to be approached completely differently compared to how you would on a regular production, because we had to dissect what needed to be replaced or changed and what could be kept from back then.

How was this new collaboration with director Zack Snyder and VFX Supervisor John ‘D.J.’ DesJardin?

Bryan // I’ve worked with both of them numerous times and as such, have developed a really efficient working relationship with both of them.

Julius // I had worked on some of their projects previously, but it was the first time with them for me as a VFX Supervisor. It was a really pleasant experience and I think it’s amazing how creatively in sync Zack, D.J. and Bryan are.

Can you tell us what Scanline VFX did on this show?

Scanline delivered north of 1,000 shots over 22 sequences for this version of the film. The biggest sequences (numerically) being the Tunnel Battle, Park Battle, Russian Melee and Underwater Fight. There were numerous other smaller sequences which I’d like to specifically highlight including the opening of the film and the Beach Torture and Martian Manhunter sequences.

It’s really unique to be able to work again on the same shots and improve them. What did you do differently thanks to your experience on the 2017 version?

Bryan // Our biggest advantage was that most of the lead personnel on our team had worked on the theatrical version so there was a great familiarity with the work. Also, for the shots didn’t require some kind of large creative overhaul, for example if it was small content changes or making work for the new 1.33 aspect ratio, those shots were more of a technical exercise in completion.

Julius // For the old shots, it helped tremendously having solved either the creative or the technical challenges once before. Also, our experience on the 2017 version drove a lot of the new workflows, development and setups over the last couple of years, spearheaded by our CG Director/VFX Supervisor Harry Mukhopadhyay, who was a key figure on the show back then. It was great to re-apply them back to where they came from and see how much easier certain things were this time around.

How did you manage the huge task of restoring the previous work and updating the assets?

We had a name for it – “Indiana Jones-ing.” In order to plan the show, it was crucial for us to know which parts of the old data we needed to bring back, what still made sense for us to use and what would be better to recreate. Not everything was still valid, because our technology and pipeline has changed so much. Once we had everything we needed back online, we had to make sure that it still worked.

Our render engine version had changed, all the software versions had changed, our in-house simulation software Flowline had countless updates since then and so on. We had to make sure everything still looked the same and worked with today’s technology. Since we had over a thousand assets back then, we had to figure out a way of knowing which assets would actually be needed in this new version of the film. We wanted to focus only on the assets that would be used in the sequences that we were going to be working on and not spending time on things that would not be used. But there was no real way for an artist to know where and how each asset was used back then. Our database however keeps track of anything that’s used anywhere. That helped us narrow the list down. Once we had that list, our philosophy was that if we made sure that every asset and setup worked by itself, we could be confident that we wouldn’t get any bad surprises down the road. Some things, like hero characters and simulation setups, we checked manually, while other things like assets used in environments were checked by automated turntable setups.

It can be pretty tricky to backtrack on shots that were completed years ago and try to ascertain why things where done a certain way back then. What made this show really unique compared to others is that every shot that had to be adjusted on a case by case basis. Changes that you needed to make on one shot, might not be required on others, so it was important to have the whole team completely in sync about every single one of them.

After 4 years, your pipeline has changed. How did you adjust or rebuild the assets for it?

One thing that changed quite a bit was our rigging pipeline. Part of the reason being that Maya is pretty different under the hood now compared to 2016/2017. So our rigs from back then simply didn’t work anymore. However, we were able to retain certain parts of the rigs, like the skinning for example. We used the fact that we had to rebuild the rigs as an opportunity to make them more efficient and improve any areas that caused us headaches back then. The character models themselves remained relatively unchanged, although we did have to upgrade some assets that had previously played a smaller role in our sequences and were now used more extensively, like Batman’s tactical suit. As we didn’t work on the final battle in the 2017 version, we had only used this version of his suit in a single shot where it didn’t cover a large screen area. For this film, we used it extensively in full CG shots during the Russian Melee sequence.

Which asset was the most challenging to enhance or rebuild?

I would say Flash and Cyborg were probably the most work. Cyborg is of course tricky because the model itself was complicated whereas Flash had a more sophisticated rig than other characters because all of his armor plates are tied together with wires and he had to hold up and move correctly right in front of the camera.

How does the 1.33 aspect affect your work?

From a shot production point of view honestly the only change that we really needed to make was to render/composite more vertical area than a wider aspect ratio. We were still carrying the same horizontal aperture, just extending the top and bottom.

The schedule was short and the amount of work really huge. How did you organize your teams with your VFX Producer?

We split the large show into three smaller shows of similar shot count to help manage it:

- Team Russia – Chris Mulcaster (CG Supervisor), Curtis Carlson (Compositing Supervisor), Jonathan Freisler (FX Supervisor)

Russian Annex, Russia Beginning, Russian Melee, Opening, Glass House, Martian Manhunter

- Team Park – Mathew Praveen/Jordan Alaeddine (CG Supervisors), Miles Lauridsen (Compositing Supervisor)

Gotham Police HQ Rooftop, Hero Tribute, Park Battle, Containment Facility, Cyborg Learns, Aftermath, Underwater Reveal, Underwater Fight, Boat Save, Beach Torture, Unity Creation, Containment Prep, Batarang

- Team Tunnel – Ioan Boieriu (CG Supervisor), Andy Chang (Compositing Supervisor)

Tunnel Battle

Our Animation Supervisor Clement Yip and his team worked as one unit across all sequences.

How did you use your experience on Aquaman for the new underwater sequences?

We originally developed the underwater techniques we used on Aquaman during Justice League. We further refined the techniques on Aquaman and then brought them back full circle to this.

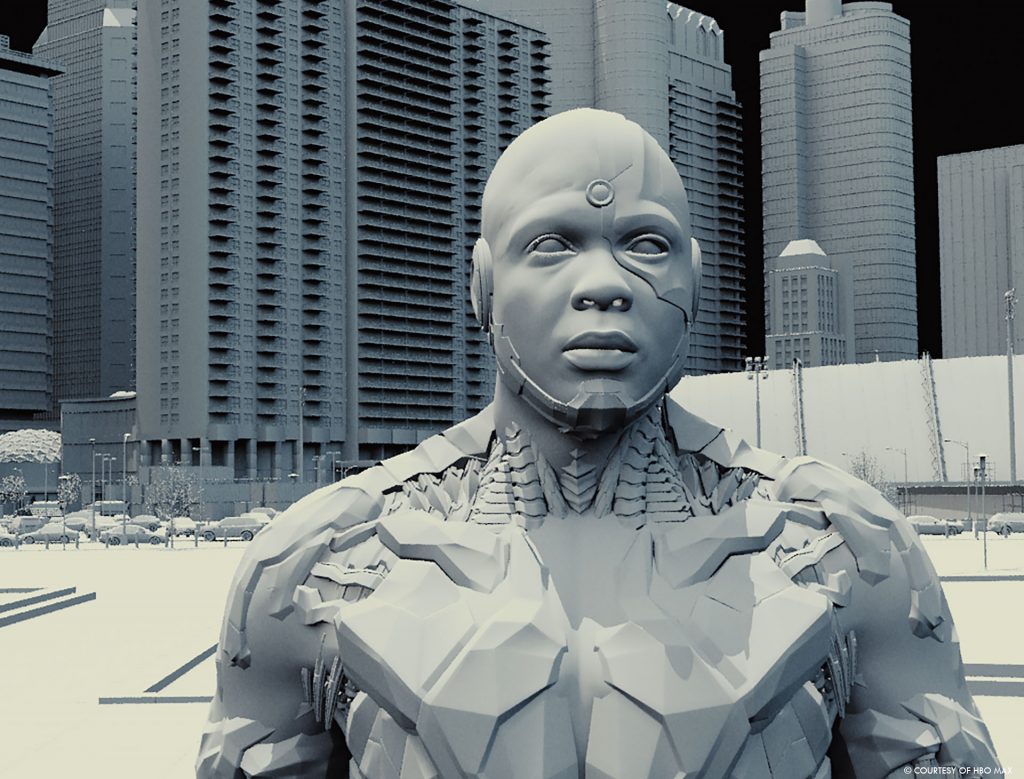

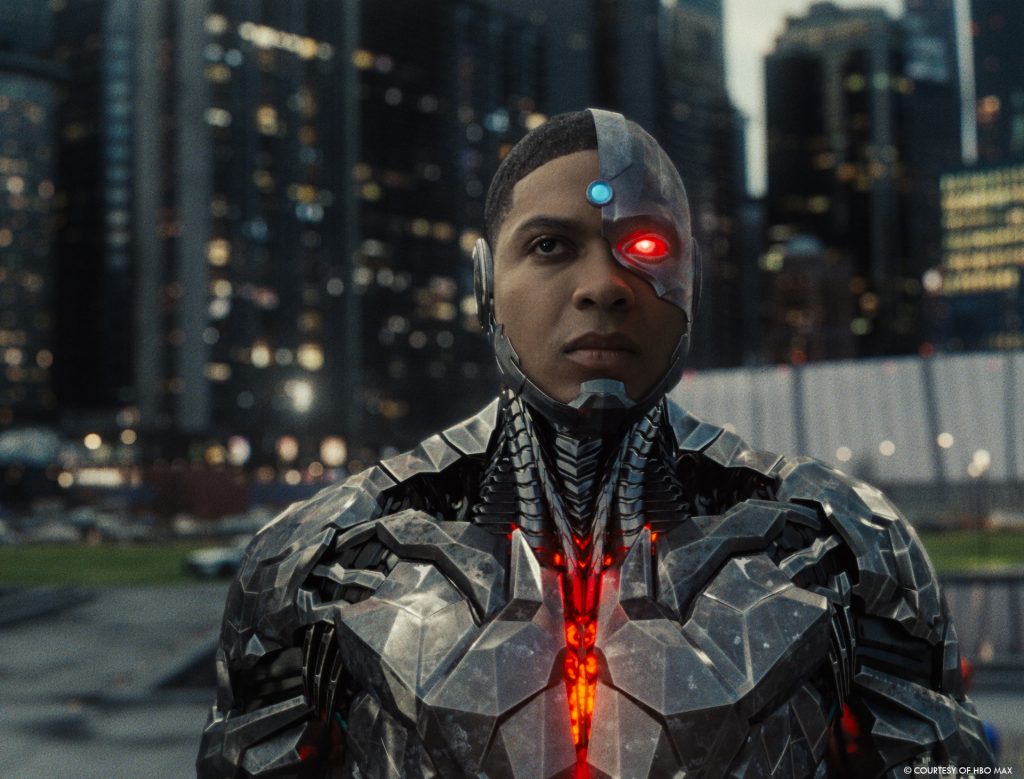

Can you explain in detail about the creation of Cyborg?

We received the model for Cyborg back in 2016/2017 from another vendor, so we were not directly involved in his creation. However, we did create some of his gadgets, like his quad arms for example. Since his geometry is intricate and, while staying rigid, still had to allow for a reasonable range of motion, his character rigging was definitely a challenge. For the shots where we had to use his model, it was crucial to have a tight lock on his head motion to fit his metal skull and face piece onto his performance. On close-up shots, where there was lots of dialogue, it could be quite tricky because of all the soft tissue moving around, that in reality would be pinned down by the metal.

How did you animate his armor during his flight mode and during the fight?

Cyborg has hidden engines that can unfold from underneath his armored plates. We always tweaked their direction and intensity during the animation stage and passed on the values to our simulation department, so the exhausts made sense with his motion. Often, we diverted substantially from the original plate, if there was one available, and either used a CG face or mixed it with camera projections of Ray’s performance.

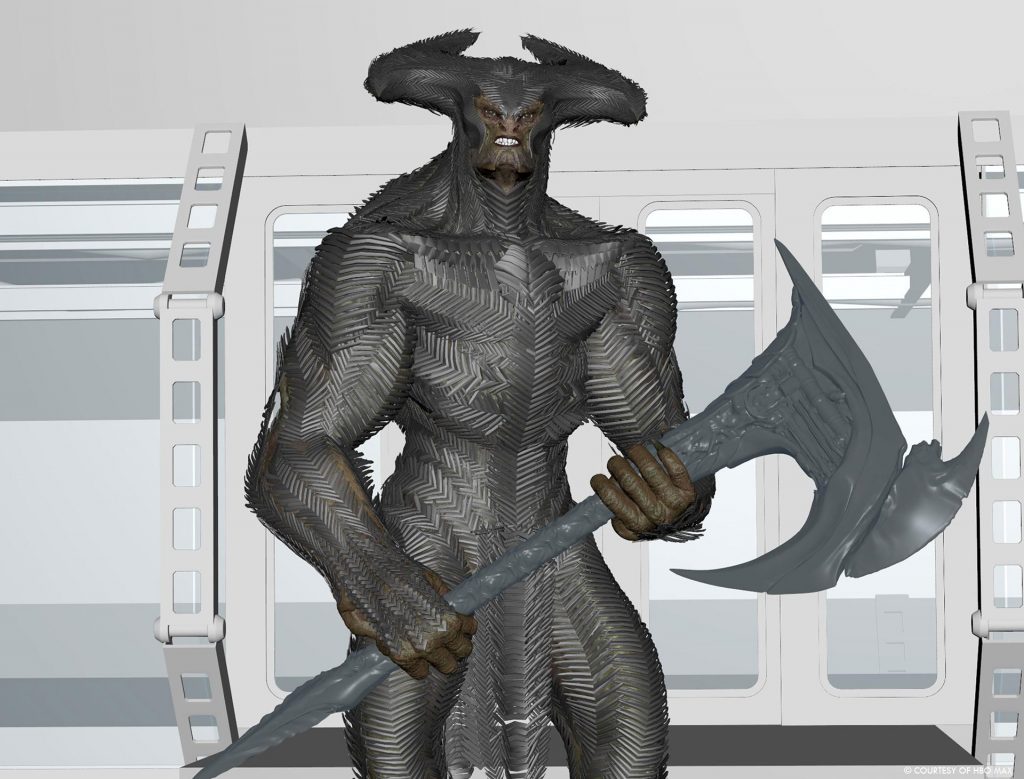

The bad guy, Steppenwolf, has a new improved look. Can you explain in detail about his creation?

Steppenwolf’s current design can actually be seen in Batman v Superman, when Lex Luthor is in the genesis chamber at the end of the movie. So we did know about this design before. Weta Digital built his model and also shared their simulation setup for his spikes with us. The heavy geometry was definitely a challenge for our rigging and simulation departments initially, but we were eventually able to build very efficient rigs and setups for it.

Can you elaborate about his animation, especially his face?

On our sequences, most of Steppenwolf’s dialogue, performed by Ciarán Hinds, was recorded back in 2016/2017. We also had footage from a head mounted camera to go along with it. We used facial motion capture software to track Ciarán’s movements and re-targeted them to Steppenwolf’s face. Since he is an alien creature and his facial features are different from Ciarán’s, the performance needed to be adjusted to fit Steppenwolf’s face. However, we did try to retain as much of Ciarán’s acting as we could and keep his take on the character as recognizable as possible.

Steppenwolf’s armor structure moves. Did you use procedural tools for this?

We used Houdini to simulate the movement in Steppenwolf’s armor. Weta Digital shared their setup with us, which gave us a good head start, however we did have to adjust it to be able to handle certain things that only showed up in our shots, for example the absorption of Atlantean blaster hits or Cyborg’s sonic cannon. Other special behaviors we added were shock ripples when Steppenwolf either throws hard punches or hits a wall. Those were riding on top of his regular body simulations and would propagate like a wave through his armor spikes. In the underwater fight with Aquaman and Mera, the armor needed to be affected and damaged by Mera’s powers and then rebuilt after Steppenwolf recovers.

How did you handle the lighting challenges on your CG characters?

Each of the characters had their own challenges due to their individual designs. For example, due to Steppenwolf and Cyborg’s metallic armor, it was really important to get the reflections and highlights right as it can make or break a shot. In particular, Steppenwolf’s face was interesting in regards to how much his character and the mood he portrays changes simply with light direction. We often made use of the fact that he has the armor on his forehead, to have his eye sockets fall into darkness, giving him a menacing look.

A new CG character appears in this version, the Martian Manhunter. Can you explain in detail about his design and creation?

Martian Manhunter was a new addition that did not exist at all in 2017. We based his model on a concept design that we received from Zack, but we were also able to incorporate our own ideas. Zack wanted to have his face look alien but still recognizable as Harry Lennix, who also plays General Swanwick in the movie. Because this character was a new addition, and due to the restrictions surrounding COVID, his creation was a bit unusual. As we could not use a character scan as a starting point like we usually would, we had to sculpt his face based on photo references. For his performance capture, we also had to get creative. Usually we would use footage from a head mounted camera but since that was not possible given the circumstances, we relied on regular cameras filming Harry’s head as he was recording his dialogue. We then used facial motion capture software to track his performance and apply it to Martian Manhunter’s face.

As in every movie by Zack Snyder, there are tons of slow-motion shots. How does that affect your FX work?

There are different cases of slow motion that we approach with different methodologies. Shots that are just slower overall are relatively straightforward, as we just work with a different time step in all of our simulation software packages. The ones that have creative re-times, for example slowing down or speeding up an action over the course of the shot, can be trickier to deal with. Especially for high-complexity shots, with tons of effects and character simulations, we have to make sure everything is in sync. Once Zack likes a re-time, he usually sticks to it, which is great. We would either provide him animations or effects blocking simulations to work out the timing with editorial. But to be prepared for eventual changes and to make it easier for the different departments to sync up and deal with motion blur correctly, we developed a system that lets us work on the shots independent of the re-time. This allows us to apply any re-time curve from editorial to our animations and simulations, without having to do things over again.

Close to the end there is a beautiful sequence with Flash recreating the Universe. What kind of references and influences did you receive for this?

We knew that we really wanted to top the moments in the Speed Force that had been seen in the film prior to this event. There was some previs that outlined the basics of the action and Zack had a strong vision that when the Flash was running and rebuilding the world, each of his footfalls create a mini big-bang with galaxies forming under the footfalls.

His inclusion of a couple of these shots in the original Hallelujah trailer really helped drive innovation. We referenced many things including Hubble space telescope imagery, nuclear explosion tests in Nevada and an assortment of shots we’d done involving the Speed Force.

Can you explain in detail about the creation of this sequence?

This sequence had some of the heaviest FX shots out of our sequences and especially with the short timeframe, good planning was crucial. As mentioned above, the fact that some of the shots were already in the first trailer, made us push hard on the creative aspects and the setups that we needed early on. The destruction of the environment was done with Houdini as well as 3ds Max and thinkingParticles, while all of the atmospherics were done with Flowline. The general logic for a lot of those sims was to work on them as if everything would happen with time moving forward and then basically playing the caches backwards. The blue explosion, for the part where the Russian city gets obliterated, was done in Flowline and the later part in space was done with particle systems within Nuke. This gave us a lot of flexibility to adjust the look as we wanted with quick turnarounds. For Flash himself, we usually replaced everything with CG, apart from his face, in order to get proper lighting interaction with all the electricity around him. The lightning bolts were a combination of some procedural simulations combined with a lot of custom painted hero bolts to get the exact timing and look we wanted.

How did you create the various environments and especially the Russian location for the final battle?

For the scenes we had previously worked on, we could use the environments we built back then for the most part. Sometimes we needed to do upgrades when certain bits of the action changed or if we were going to see things that we hadn’t before. An example of one of the areas we had to upgrade was for the underwater fight, as some shots in the opening sequence shared the same environment. We had to build a large area surrounding the Redoubt, the building where the Atlantean mother box is kept.

An environment that we had to create from scratch was for the opening of the movie when Superman dies. We had worked on Batman v Superman, but not on this sequence. So we had to build something that plausibly fit into the finale of the old film. We could however use our matte paintings from back then as a base for the far background.

Although we didn’t work on the final battle in the 2017 instalment of the movie, we had a head start on the Russia environment, thanks to Warner delivering us the assets that were used back then. The action in the sequence had changed a lot though, so areas that were previously not that important now needed more detail. Also, we needed more buildings that we could be destroyed in FX and some assets like the big Parademon cannons didn’t exist at all back then. So, we did have to create lots of new assets and adjust the existing assets to our needs. Any vegetation scattering had to be done from scratch to work within our pipeline.

Which location was the most complicate to create and why?

I wouldn’t say that one location was necessarily more complicated than others. All of the big environments were of similar complexity and had unique challenges in how their build had to be planned.

What is your favorite shot or sequence?

Bryan // I’m quite proud of all of the work that the various teams at Scanline completed for the film, but if there’s one moment that’s closest to my heart, it’s when the unity is achieved, the world is destroyed, and it’s up to Barry to turn back time to save the world. Internally we referred to it as the “cosmic rewind.”

Julius // There’s a number of highlights for me across all sequences, for example: the cosmic rewind and the Parademon tackling the Batmobile, Steppenwolf in the machine room, Steppenwolf when he leaves the underwater fight with the mother box in his hand and Cyborg stopping the flipping Humvee. That one was one of my personal arch enemies in 2017 and it was eventually omitted from the movie, so it was great to get back to it and be able to finish it for Zack’s version.

What is your best memory on this show?

Bryan // Being able to help Zack realize his version of the film is the most rewarding memory that I’ll take away from this project.

Julius // It was great seeing all the amazing work come together after the incredible effort and all the passion that the whole team put into it. I’m also very proud that we created over 80 minutes of the movie.

How long have you worked on this show?

Seven months overall. The first month was primarily de-archiving and setting up to bring the dormant show back to life. So, six months of active shot production.

What was the size of your team?

We had in the neighborhood of 400 artists working on the show worldwide.

What is your next project?

Bryan // I am working on an exciting project that I am not able to share yet, but stay tuned!

Julius // I am currently working on Matt Reeves’ The Batman, which I’m very excited about.

A big thanks for your time.

// Zack Snyder’s Justice League – VFX Showreel – Scanline VFX

(click on the picture below to watch it)

WANT TO KNOW MORE?

Scanline VFX: Official website of Scanline VFX.

HBO Max: You can watch Zack Snyder’s Justice League on HBO Max now.

© Vincent Frei – The Art of VFX – 2021